Research page: https://meta.wikimedia.org/wiki/Research:Study_of_performance_perception

Introduction

The current metrics we use to measure page load performance are based on assumptions about what users prefer. Even the assumption that faster is always better is universal in the metrics used in this field, while academic research shows that this might not be a universal main criterion to assess the quality of experience (performance stability might be preferred) and is likely to depend on the subject and the context.

We usually deal with two classes of metrics, real user metrics (RUM) that we collect passively from users, leveraging the performance information that we can capture client-side. It's usually very low level, highly granular and quite disconnected from the experience from the user's perspective. The other type of metric we use is synthetic, where we have automated tools simulate the user experience and measure things. These get closer to the user experience, by allowing us to measure visual characteristics of a page load. But both are far from capturing what the page feels like to users, because when the measurement is made, they don't require any human input. Even their modeling is often just a best guess by engineers, and only recently have studies looked at the correlation between those metrics and user sentiment. It wasn't part of the metrics' design.

In this study, we would like to bridge the gap between user sentiment and the passive RUM performance metrics that are easy to collect unobtrusively.

Collecting user-perceived page load performance

A lot of studies put users' nose in the mechanics of the page load to ask them about it. For example, showing them 2 videos side by side of the same page loading differently. This is a very artificial exercise and disconnected from the real-world experience of loading a page in the middle of a user flow. In this study we want to avoid interfering with the page load experience. Which is why we plan to ask the real users, on the production wikis, about their experience after a real page load has finished, in the middle of their browsing session. After a random wiki page load has happened, the user viewing it is asked via an in-page popup to score how fast or pleasant that page load was.

Users will have an option to opt out of this surveying permanently (preference stored in local storage). It might be interesting to give them different options to dismiss it (eg. "I don't want to participate", "I don't understand the question") in order to tweak the UI if necessary.

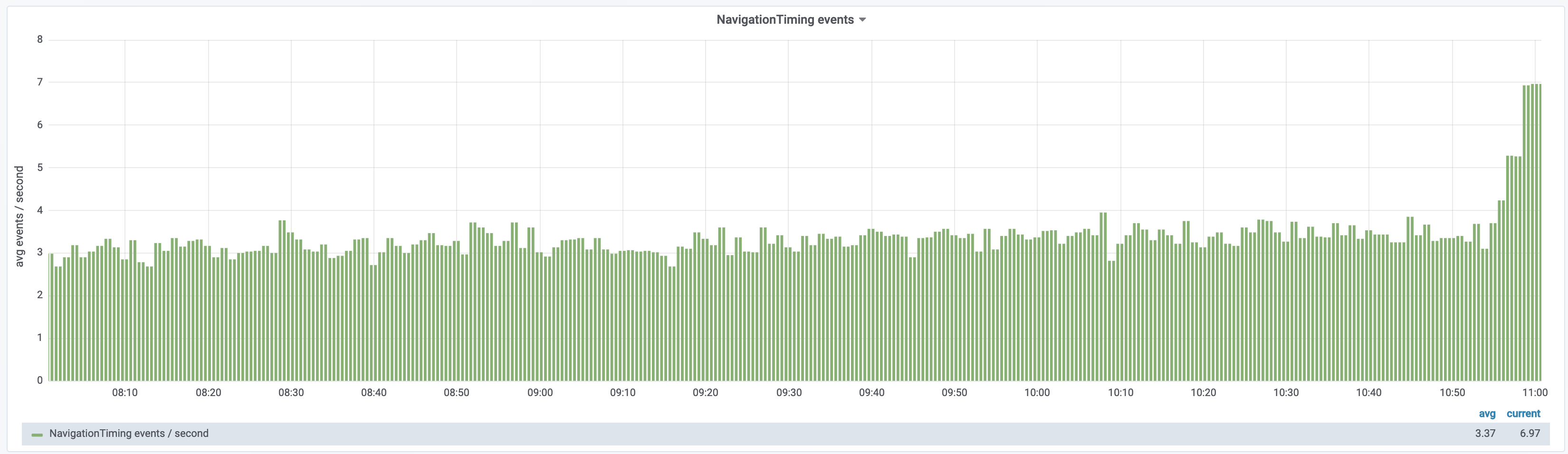

Collecting RUM metrics alongside

This is very straightforward, as we already collect such metrics. These need to be bundled with the survey response, in order to later look for correlations. In addition to performance metrics, we should bundle anonymized information about things that could be relevant to performance (user agent, device, connection type, location, page type, etc.). Most of this information is already being collected by the NavigationTiming extension and we could simply build the study on top of that.

Attempting to build a model that lets us derive user-perceived performance scores from RUM data only

Once we have user-perceived performance scores and RUM data attached to it, we will attempt to build a model that reproduces user-perception scores based on the underlying RUM metrics.

We can try building a universal model at first, applying to all users and all pages on the wiki. And then attempt to build context-specific models (by wiki, page type, connection type, user agent, location, etc.) to see if we could get better correlation.

Ideally, given the large amount of RUM data we can collect (we could actually collect more than we currently do), we would be trying the most exhaustive set of features possible. We should try both expert models and machine learning, as prior work has shown that they can both give satisfying results in similar contexts.

Scope

While it would be nice to have the user-perceived performance scores collected on all wikis, some have communities that are less likely to welcome such experiments by the WMF. We could focus the initial study on wikis that are usually more friendly to cutting-edge features, such as frwiki or cawiki. Doing at least 2 wikis would be good, in order to see if the same model could work for different wikis, or if we're already finding significant differences between wikis.

This study will focus only on the desktop website. It can easily be extended to the mobile site or even the mobile apps later, but for the sake of validating the idea, focusing on a single platform should be enough. There is no point making the study multi-platform if we don't get the results we hope for on a single one.

Challenges

- Picking when to ask. How soon in the page load lifecycle is too soon to ask? (the user might not consider the page load to be finished yet) How late is too late? (the user might have forgotten how the page load felt)

- Does the survey pop-up interfere with the page load perception itself? We have to display a piece of UI on screen to ask the question, and it's part of the page. We need to try to limit the effect that this measurement has as much as possible. This should be one of the main criteria in the design, that this survey UI's appearance doesn't feel like it's part of the initial page load.

- How should the question be asked? Phrasing matters. If we ask a question too broad (eg. are you having a pleasant experience?) people might answer thinking about a broader context, like their entire browsing session, the contents of the page, or whether or not they found what they wanted to find on the wiki. If the question is too narrow, it might make them think too much about page loading mechanics they normally don't think about.

- What grading system should we use? There are a number of psychological effects at play when picking a score for something, and we should be careful to pick the model that's the most appropriate for this task.

Limitations

This study won't look at performance stability. For example, if the page loads before the one being surveyed were unusually fast or slow, this will likely affect the perception of the current one. We could explore that topic more easily in a follow-up study if we identify meaningful RUM metrics in this initial study limited to page load studies in isolation.

Expected outcomes

- We don't find any satisfying correlation between any RUM-based model, even sliced by page type/wiki/user profile. This informs us, and the greater performance community, that RUM metrics are a poor measurement of user-perceived performance. It would be a driving factor to implement new browser APIs that measure performance metrics closer to what users really experience. And in the short term it would put a bigger emphasis on synthetic metrics as a better reference for user-perceived performance (as there has been academic work showing a decent correlation there already). It could also drive work into improving synthetic metrics further. Also, from an operational perspective, if we keep the surveys running indefinitely, we would still get to measure user-perceived performance globally as a metric we can follow directly. It will be harder to make it actionable, but we would know globally if user sentiment is getting better or worse over time and slice it by different criteria.

- We find a satisfying RUM-based universal model. Depending on its characteristics, we can assess whether or not it's a wiki-specific one, or if we potentially uncovered a universal understanding, that could be verified in follow-up studies done by others on other websites.

- We find a satisfying RUM-based model adapted to some context. This would change the way performance optimization is done, by showing that context matters, meaning that improving performance might not take the form of a one-size-fits-all solution.

In the last 2 cases, this would allow us to have a universal performance metric that we can easily measure passively at scale and that we know is a good representation of user perception. This would be a small revolution in the performance field, where currently the user experience and the passive measurements are completely disconnected.

See also

The following url shows the survey unconditionally (Note: submissions are real!)

https://ca.wikipedia.org/wiki/Plantes?quicksurvey=internal-survey-perceived-performance-survey

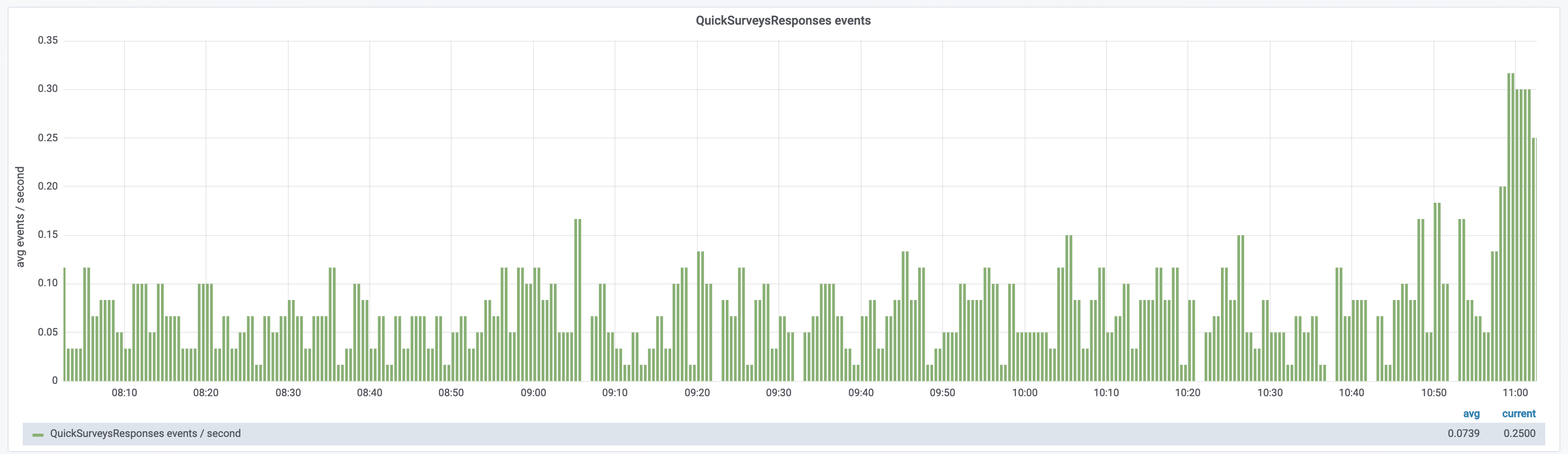

The following dashboard shows ingestion of responses (Note: This can include other surveys in the future, although as of writing, no other ones are enabled).

https://grafana.wikimedia.org/dashboard/db/eventlogging-schema?var-schema=QuickSurveysResponses