In T124874 we tested different ways of making mobile pages faster on 2G and we wanted to have baseline how fast the page load so we tested also on HTTP/1. And guess what happened? According to WebPageTest HTTP/1 started to render the Obama page much faster than SPDY! We tested the page using a throttled connection (2GFast) and the outcome was that the rendered blocking CSS was delivered much later on SPDY.

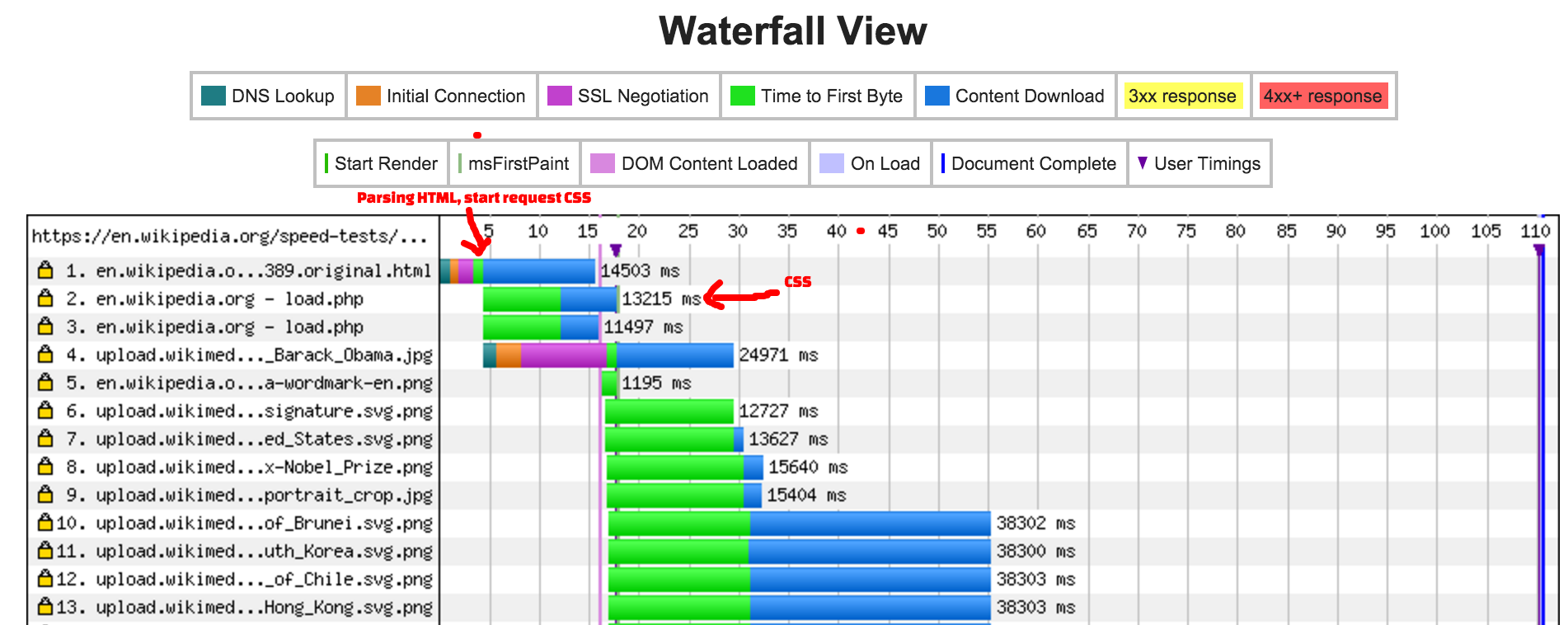

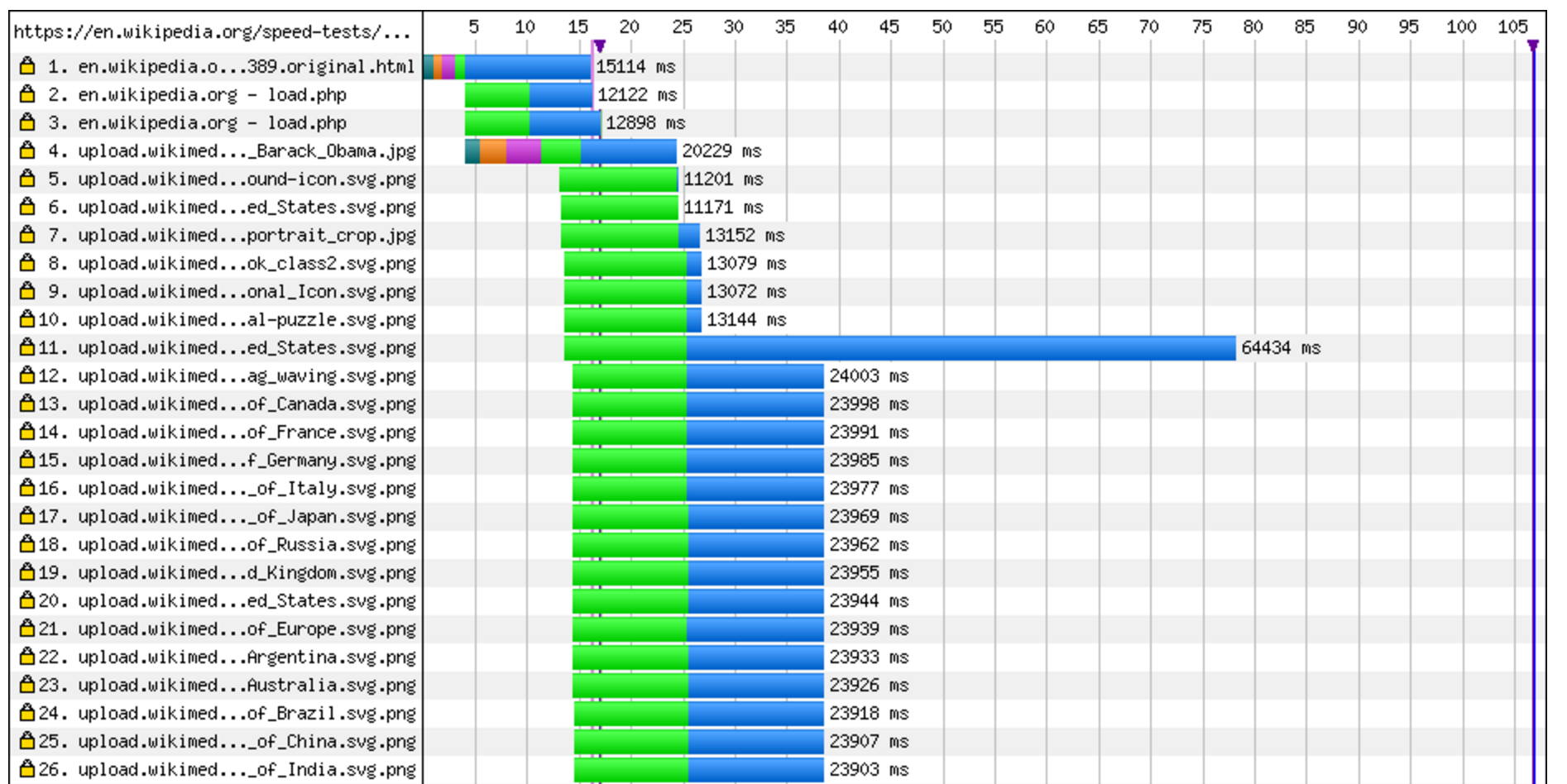

Using SPDY it looked like this:

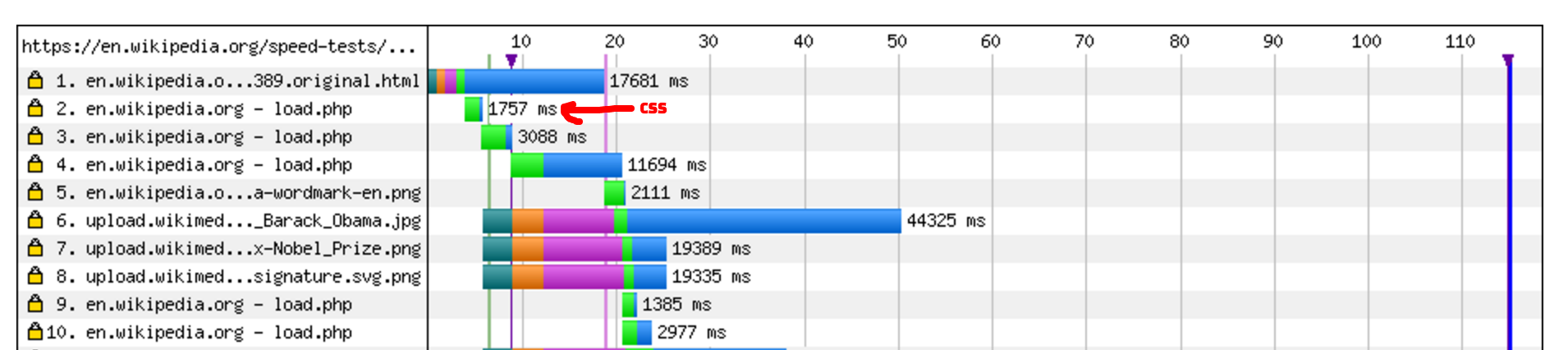

And HTTP/1 like this:

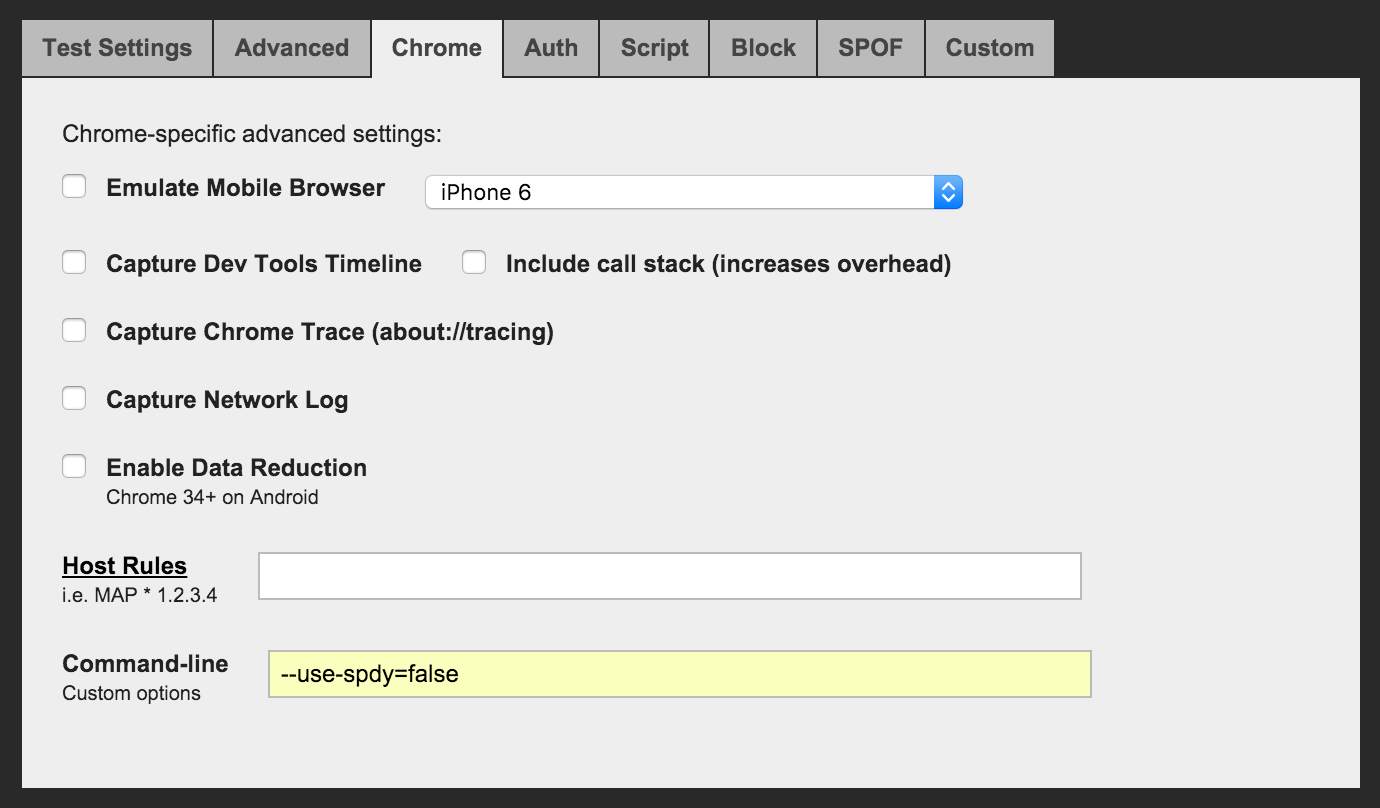

Could something be wrong in WebPageTest? When we tested we used the --use-spdy=false on Chrome to get HTTP/1 connections. We also tested using Firefox using SPDY and could see that we got a late renderer there too. Using IE 11 (IE 11 on WPT only supports HTTP/1.1) we could see that it was much faster. It really looks like SPDY is slower on slower connections. But still, there could be maybe some overall problem with SPDY on WPT.

Lets test it locally.

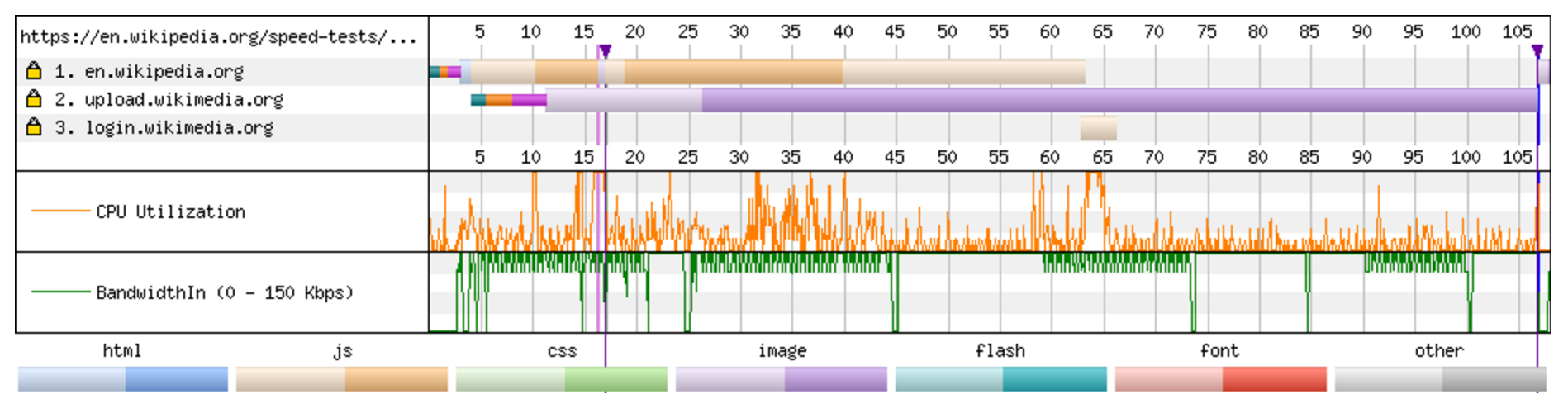

Part 1 - SPDY

I'm using Chrome Canary (because the network throttler is more configurable there, however setting the same values as WebPageTests 2GFast didn't work for me, I never got a first paint). I start Canary, open Developer Tools and choose Regular 2G (that is way faster than on WebPageTest but lets use it for now). I make sure I disable the cache and set the device mode to mobile and then in the network panel, I choose to show the protocol (to make sure it uses SPDY/1.1). And then access the Obama page https://en.m.wikipedia.org/wiki/Barack_Obama:

The same moment I press enter I also start the stopwatch on my phone (yep I'm old school). When the page starts to render, I stop the watch. And then check the first paint from Chrome:

console.log((window.chrome.loadTimes().firstPaintTime * 1000) - (window.chrome.loadTimes().startLoadTime*1000));

I also do the same thing throttling the connection as GPRS (yep that is really slow I know but I want to see what happens on a really slow connection).

Part 2 - HTTP 1.1

I use the exact same setup except that I close down Chrome Canary and open it using the command line with the switch to disable SPDY. On my local Canary is located /Applications/Google Chrome Canary.app/Contents/MacOS and I start it like this:

$ ./Google\ Chrome\ Canary --use-spdy=offI follow the same procedure and verify the protocol in the dev tools panel, yep it is 1.1.

The result

Testing it locally gave me this result.

| Protocol | Connection type | The stopwatch | Chrome first paint |

| SPDY | Regular 2G | 6.0 s | 5.2 s |

| SPDY | GPRS | 27 s | 26.4 s |

| HTTP/1.1 | Regular 2G | 3.6 s | 2.9 s |

| HTTP/1.1 | GPRS | 16.4 s | 15.9 s |

With the current setup we have HTTP/1.1 starts render the page faster when we have a slow connections. How can we make SPDY faster?

Questions

Things we should find out:

- Is the way I test wrong in any way? Should we try something else?

- Could we tweak SPDY so that the CSS is delivered earlier? Is there an easy win out there?

- Will this also exists when we switch to HTTP/2?

- Does the timings in any way reflects the numbers we fetch with the Navigation Timing metrics from real users (a.k.a is this a real problem)?