Over 1.5h to merge this patch -- https://gerrit.wikimedia.org/r/#/c/269083/

mediawiki-extensions-php53 SUCCESS in 20m 55s mediawiki-extensions-hhmv SUCCESS in 13m 17s

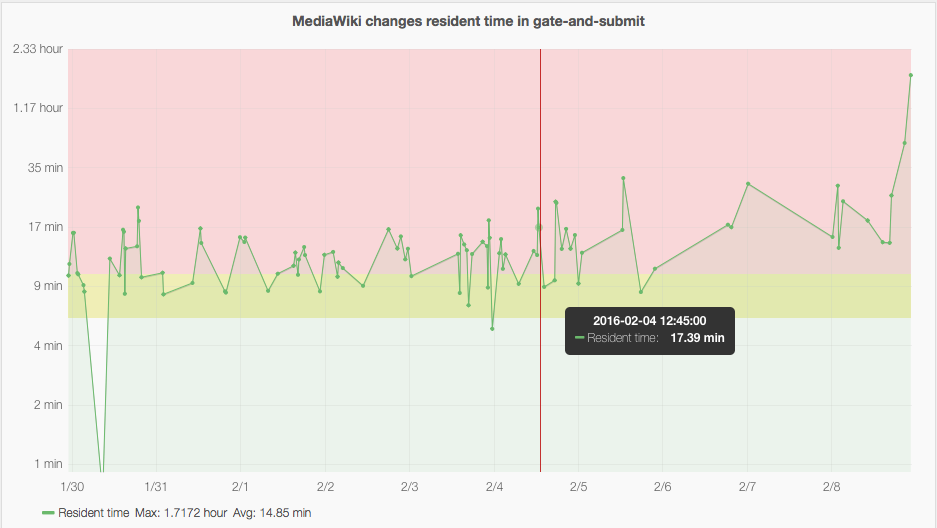

From our graph that track amount a time a change spend in gate-and-submit ( https://grafana.wikimedia.org/dashboard/db/releng-kpis?panelId=2&fullscreen ):