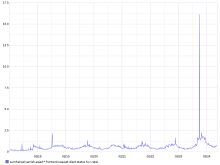

Since February 17 around 20:00Z, we're seeing a steep increase in the number of 5xx responses we get from any datacenter.

Here is the graph for eqiad, where network/connectivity issues can't be the cause of such errors:

Looking at the SAL, I just see a couple of deployments around that time.

This needs investigation as some users are reporting more frequent timeouts when navigating our sites. Note that there was a second step up on February 23rd around 16:00Z, which made things even more worrying.

It's important to note that this does /not/ come from upload caches so it might be Mediawiki-related.

Findings so far

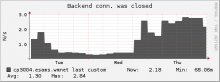

- The surge comes from text caches

- The surge is not tied to a specific datacenter and/or varnish backend, so we can exclude any hardware issue to a specific machine.

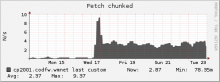

- The first surge correlates with the timing of enabling keepalive on text caches; the progressive raise in 503s during one hour also seems to suggest the propagation of a puppet change across the cluster.

- This still would not explain the second surge. We might be seeing the superposition of two independent effects here.