Labs Project Tested: phabricator

Site/Location:CODFW

Number of systems: 1

Service: phabricator

Networking Requirements: external IP, LVS

Processor Requirements: ?

Memory: 64

Disks: 500gb

NIC(s):

Partitioning Scheme: one large volume for /srv on raid1

Other Requirements:

Our current phabricator setup lacks redundancy which causes problems during deployments, kernel upgrades and any hardware related issues.

Details

It's not currently trivial to recreate phabricator from backups + puppet, so any issues will cause significant downtime.

Given how much we rely on phabricator, it's fairly critical to maintain nearly 100% uptime.

Here are the components involved with phabricator:

| host | domain/service name | Description |

| iridium.eqiad.wmnet | phabricator.wikimedia.org | Apache + PHP |

| git-ssh.wikimedia.org | sshd + phabricator git integration | |

| phd | phabricator's worker queue | |

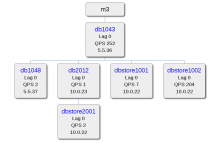

| m3-master.eqiad.wmnet | MySQL | Phabricator's DB Cluster |

The database is already redundant, however, all the other critical pieces are running on iridium with no fallback.

Iridium specs

The specs for iridium are fairly generous. It's a 16 core Intel(R) Xeon(R) CPU E5-2450 v2 @ 2.50GHz with 64G ram and 2x500G (raid1 mirrored) storage.

Iridium's repository storage looks like this:

Filesystem Size Used Avail Use% Mounted on /dev/md0 9.1G 6.2G 2.4G 73% / /dev/md2 456G 138G 318G 31% /srv

What's needed

In order to have some redundancy, I'd like to start with a backup web server. If we have a machine available with a decent amount of storage then we could also have a repository mirror on the same machine. The repositories will become more important soon as we are migrating from gerrit to differential real soon now.

Even without the repository storage it would still be useful to have a backup web server to handle requests for maniphest. So at minimum all that we need is a small apache+php machine with no other special dependencies.

For a good backup (as apposed to a bare minimum) I think the following specs would be appropriate: 4-8 core CPU, 16-32G ram and 500g of non-mirrored storage.

Load Balancing

Once we have a second phabricator webserver, we could experiment with load balancing as well, though I am primarily interested in redundancy not performance.

Geographical Diversity

It might also make sense to allocate the backup in codfw for the sake of keeping a live mirror of repositories in dallas. This could be used as a primary host when machines in codfw need to clone repositories and I believe it might even be possible to do 2-way mirroring of git, however, that requires more planning and configuration.