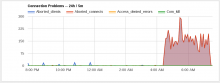

There is a huge amount of connections to the master, as many that there are 5000 connection errors per minute.

While I cannot yet put a reason to it, most of the connections that fail seem to be happening since 12:30-12:31 UTC. Most of the failures come from wikibase-addUsagesForPage job execution. If that is a symptom (because there are a lot of them) or a cause, I cannot say yet:

https://logstash.wikimedia.org/#dashboard/temp/AVUF9DIZivsygkP3Y44Z

HHVM was restarted yesterday, but much earlier 10:25-10:35, so I am discarding that as a cause.

My pointers is to: a config/code deploy or a change in traffic pattern that we should identify and either support or drop.