Today at around 13:50 some Cassandra instances experienced shutdown / restarts, all instances recovered on their own but further investigation is needed. The instances in question: 1007-b, 1008-b, 1010-b, and 1013-c

Findings

1007-b

The troubles begin at around 2016-10-18T13:51:05 with a number of tombstone warnings.

WARN [SharedPool-Worker-8] 2016-10-18 13:51:05,403 SliceQueryFilter.java:308 - Read 1 live and 23613 tombstone cells in local_group_default_T_summary.data for key: www.wikidata.org:Q27335792 (see tombstone_warn_threshold). 3 columns were requested, slices=[ea00744c-9539-11e6-b0d3-e8874bd2c097:!-] WARN [SharedPool-Worker-28] 2016-10-18 13:51:05,477 SliceQueryFilter.java:308 - Read 1 live and 15624 tombstone cells in local_group_default_T_mobileapps_remaining.data for key: www.wikidata.org:Q27335792 (see tombstone_warn_threshold). 1 columns were requested, slices=[-] WARN [SharedPool-Worker-20] 2016-10-18 13:51:05,766 SliceQueryFilter.java:308 - Read 1 live and 15624 tombstone cells in local_group_default_T_mobileapps_remaining.data for key: www.wikidata.org:Q27335792 (see tombstone_warn_threshold). 1 columns were requested, slices=[-] WARN [SharedPool-Worker-8] 2016-10-18 13:51:06,438 SliceQueryFilter.java:308 - Read 1 live and 15624 tombstone cells in local_group_default_T_mobileapps_remaining.data for key: www.wikidata.org:Q27335792 (see tombstone_warn_threshold). 3 columns were requested, slices=[ea7f27ba-9539-11e6-88e5-ee03422d6bf0:!-] WARN [SharedPool-Worker-3] 2016-10-18 13:51:11,059 SliceQueryFilter.java:308 - Read 2 live and 23614 tombstone cells in local_group_default_T_summary.data for key: www.wikidata.org:Q27335792 (see tombstone_warn_threshold). 3 columns were requested, slices=[ed62279d-9539-11e6-9aa0-7acf40f3ba78:!-] WARN [SharedPool-Worker-31] 2016-10-18 13:51:11,309 SliceQueryFilter.java:308 - Read 2 live and 15628 tombstone cells in local_group_default_T_mobileapps_remaining.data for key: www.wikidata.org:Q27335792 (see tombstone_warn_threshold). 3 columns were requested, slices=[ed6c46a2-9539-11e6-8e21-c9f934dea3db:!-]

After which the node entered StatusLogger Hell (an indication that load had become high). As the StatusLogger output progresses, you can see the read stage steadily backing up. At 2016-10-18T13:51:43, a number of read requests fail while fetching a quorum of role permissions from other nodes, and by 2016-10-18T13:53:10 the node OOMed.

ERROR [MessagingService-Incoming-/10.192.48.50] 2016-10-18 13:53:10,258 CassandraDaemon.java:185 - Exception in thread Thread[MessagingService-Incoming-/10.192.48.50,5,main]

java.lang.OutOfMemoryError: Java heap space

at java.nio.ByteBuffer.wrap(ByteBuffer.java:373) ~[na:1.8.0_102]

at sun.security.ssl.CipherBox.applyExplicitNonce(CipherBox.java:981) ~[na:1.8.0_102]

at sun.security.ssl.InputRecord.decrypt(InputRecord.java:157) ~[na:1.8.0_102]

at sun.security.ssl.SSLSocketImpl.readRecord(SSLSocketImpl.java:1015) ~[na:1.8.0_102]

at sun.security.ssl.SSLSocketImpl.readDataRecord(SSLSocketImpl.java:930) ~[na:1.8.0_102]

at sun.security.ssl.AppInputStream.read(AppInputStream.java:105) ~[na:1.8.0_102]

at net.jpountz.lz4.LZ4BlockInputStream.readFully(LZ4BlockInputStream.java:216) ~[lz4-1.3.0.jar:na]

at net.jpountz.lz4.LZ4BlockInputStream.refill(LZ4BlockInputStream.java:150) ~[lz4-1.3.0.jar:na]

at net.jpountz.lz4.LZ4BlockInputStream.read(LZ4BlockInputStream.java:102) ~[lz4-1.3.0.jar:na]

at java.io.DataInputStream.readInt(DataInputStream.java:387) ~[na:1.8.0_102]

at org.apache.cassandra.net.IncomingTcpConnection.receiveMessages(IncomingTcpConnection.java:176) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.net.IncomingTcpConnection.run(IncomingTcpConnection.java:91) ~[apache-cassandra-2.2.6.jar:2.2.6]From the stacktrace, you can see that the OOM occurred while decompressing incoming data off the wire (though this could just be the proverbial camel back-breaking final straw). GC logs could provide additional information, but due to the completely broken way that the JVM rotates out these logs, the subsequent restart obliterated the relevant output.

1008-b

Similar to 1007-b, StatusLogger output begins ramping up at ~13:50, the first read stage backlog is evident at 13:50:45. By 13:50:49 a number of mutation and read messages are being dropped, and at 13:50:52 the OOM occurs.

ERROR [SharedPool-Worker-7] 2016-10-18 13:50:52,452 JVMStabilityInspector.java:117 - JVM state determined to be unstable. Exiting forcefully due to:

java.lang.OutOfMemoryError: Java heap space

at java.nio.HeapByteBuffer.<init>(HeapByteBuffer.java:57) ~[na:1.8.0_102]

at java.nio.ByteBuffer.allocate(ByteBuffer.java:335) ~[na:1.8.0_102]

at org.apache.cassandra.io.util.RandomAccessReader.readBytes(RandomAccessReader.java:340) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.utils.ByteBufferUtil.read(ByteBufferUtil.java:359) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.utils.ByteBufferUtil.readWithLength(ByteBufferUtil.java:322) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.db.ColumnSerializer.deserializeColumnBody(ColumnSerializer.java:132) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.db.AtomDeserializer.readNext(AtomDeserializer.java:108) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.io.sstable.format.big.IndexedSliceReader$IndexedBlockFetcher.getNextBlock(IndexedSliceReader.java:427) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.io.sstable.format.big.IndexedSliceReader$IndexedBlockFetcher.fetchMoreData(IndexedSliceReader.java:351) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.io.sstable.format.big.IndexedSliceReader.computeNext(IndexedSliceReader.java:143) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.io.sstable.format.big.IndexedSliceReader.computeNext(IndexedSliceReader.java:45) ~[apache-cassandra-2.2.6.jar:2.2.6]

at com.google.common.collect.AbstractIterator.tryToComputeNext(AbstractIterator.java:143) ~[guava-16.0.jar:na]

at com.google.common.collect.AbstractIterator.hasNext(AbstractIterator.java:138) ~[guava-16.0.jar:na]

at org.apache.cassandra.io.sstable.format.big.SSTableSliceIterator.hasNext(SSTableSliceIterator.java:83) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.db.filter.QueryFilter$2.getNext(QueryFilter.java:174) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.db.filter.QueryFilter$2.hasNext(QueryFilter.java:157) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.utils.MergeIterator$Candidate.advance(MergeIterator.java:146) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.utils.MergeIterator$ManyToOne.advance(MergeIterator.java:125) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.utils.MergeIterator$ManyToOne.computeNext(MergeIterator.java:99) ~[apache-cassandra-2.2.6.jar:2.2.6]

at com.google.common.collect.AbstractIterator.tryToComputeNext(AbstractIterator.java:143) ~[guava-16.0.jar:na]

at com.google.common.collect.AbstractIterator.hasNext(AbstractIterator.java:138) ~[guava-16.0.jar:na]

at org.apache.cassandra.db.filter.SliceQueryFilter.collectReducedColumns(SliceQueryFilter.java:264) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.db.filter.QueryFilter.collateColumns(QueryFilter.java:109) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.db.filter.QueryFilter.collateOnDiskAtom(QueryFilter.java:82) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.db.filter.QueryFilter.collateOnDiskAtom(QueryFilter.java:69) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.db.CollationController.collectAllData(CollationController.java:319) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.db.CollationController.getTopLevelColumns(CollationController.java:61) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.db.ColumnFamilyStore.getTopLevelColumns(ColumnFamilyStore.java:2025) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.db.ColumnFamilyStore.getColumnFamily(ColumnFamilyStore.java:1829) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.db.Keyspace.getRow(Keyspace.java:360) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.db.SliceFromReadCommand.getRow(SliceFromReadCommand.java:85) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.service.StorageProxy$LocalReadRunnable.runMayThrow(StorageProxy.java:1587) ~[apache-cassandra-2.2.6.jar:2.2.6]This exception occurs while locally reading a slice.

1010-b

ERROR [SharedPool-Worker-9] 2016-10-18 13:59:25,714 JVMStabilityInspector.java:117 - JVM state determined to be unstable. Exiting forcefully due to:

java.lang.OutOfMemoryError: Java heap space

at java.nio.HeapByteBuffer.<init>(HeapByteBuffer.java:57) ~[na:1.8.0_102]

at java.nio.ByteBuffer.allocate(ByteBuffer.java:335) ~[na:1.8.0_102]

at org.apache.cassandra.io.util.RandomAccessReader.readBytes(RandomAccessReader.java:340) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.utils.ByteBufferUtil.read(ByteBufferUtil.java:359) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.utils.ByteBufferUtil.readWithLength(ByteBufferUtil.java:322) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.db.ColumnSerializer.deserializeColumnBody(ColumnSerializer.java:126) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.db.AtomDeserializer.readNext(AtomDeserializer.java:108) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.io.sstable.format.big.IndexedSliceReader$IndexedBlockFetcher.getNextBlock(IndexedSliceReader.java:427) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.io.sstable.format.big.IndexedSliceReader$IndexedBlockFetcher.fetchMoreData(IndexedSliceReader.java:351) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.io.sstable.format.big.IndexedSliceReader.computeNext(IndexedSliceReader.java:143) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.io.sstable.format.big.IndexedSliceReader.computeNext(IndexedSliceReader.java:45) ~[apache-cassandra-2.2.6.jar:2.2.6]

at com.google.common.collect.AbstractIterator.tryToComputeNext(AbstractIterator.java:143) ~[guava-16.0.jar:na]

at com.google.common.collect.AbstractIterator.hasNext(AbstractIterator.java:138) ~[guava-16.0.jar:na]

at org.apache.cassandra.io.sstable.format.big.SSTableSliceIterator.hasNext(SSTableSliceIterator.java:83) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.db.filter.QueryFilter$2.getNext(QueryFilter.java:174) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.db.filter.QueryFilter$2.hasNext(QueryFilter.java:157) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.utils.MergeIterator$Candidate.advance(MergeIterator.java:146) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.utils.MergeIterator$ManyToOne.advance(MergeIterator.java:125) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.utils.MergeIterator$ManyToOne.computeNext(MergeIterator.java:99) ~[apache-cassandra-2.2.6.jar:2.2.6]

at com.google.common.collect.AbstractIterator.tryToComputeNext(AbstractIterator.java:143) ~[guava-16.0.jar:na]

at com.google.common.collect.AbstractIterator.hasNext(AbstractIterator.java:138) ~[guava-16.0.jar:na]

at org.apache.cassandra.db.filter.SliceQueryFilter.collectReducedColumns(SliceQueryFilter.java:264) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.db.filter.QueryFilter.collateColumns(QueryFilter.java:109) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.db.filter.QueryFilter.collateOnDiskAtom(QueryFilter.java:82) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.db.filter.QueryFilter.collateOnDiskAtom(QueryFilter.java:69) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.db.CollationController.collectAllData(CollationController.java:319) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.db.CollationController.getTopLevelColumns(CollationController.java:61) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.db.ColumnFamilyStore.getTopLevelColumns(ColumnFamilyStore.java:2025) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.db.ColumnFamilyStore.getColumnFamily(ColumnFamilyStore.java:1829) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.db.Keyspace.getRow(Keyspace.java:360) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.db.SliceFromReadCommand.getRow(SliceFromReadCommand.java:85) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.db.ReadVerbHandler.doVerb(ReadVerbHandler.java:38) ~[apache-cassandra-2.2.6.jar:2.2.6]Again, while performing a local slice query (at the request of a remote coordinator)...

1013-c

ERROR [SharedPool-Worker-128] 2016-10-18 13:53:45,570 JVMStabilityInspector.java:117 - JVM state determined to be unstable. Exiting forcefully due to:

java.lang.OutOfMemoryError: Java heap space

at org.apache.cassandra.db.ArrayBackedSortedColumns$CellCollection.iterator(ArrayBackedSortedColumns.java:769) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.db.ColumnFamily.iterator(ColumnFamily.java:488) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.db.ColumnFamily.updateDigest(ColumnFamily.java:396) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.db.ColumnFamily.digest(ColumnFamily.java:390) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.service.RowDigestResolver.resolve(RowDigestResolver.java:90) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.service.RowDigestResolver.resolve(RowDigestResolver.java:28) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.service.ReadCallback.get(ReadCallback.java:126) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.service.AbstractReadExecutor.get(AbstractReadExecutor.java:147) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.service.StorageProxy.fetchRows(StorageProxy.java:1436) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.service.StorageProxy.readRegular(StorageProxy.java:1360) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.service.StorageProxy.read(StorageProxy.java:1275) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.service.pager.SliceQueryPager.queryNextPage(SliceQueryPager.java:94) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.service.pager.AbstractQueryPager.fetchPage(AbstractQueryPager.java:85) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.service.pager.SliceQueryPager.fetchPage(SliceQueryPager.java:39) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.cql3.statements.SelectStatement.execute(SelectStatement.java:239) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.cql3.statements.SelectStatement.execute(SelectStatement.java:179) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.cql3.statements.SelectStatement.execute(SelectStatement.java:76) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.cql3.QueryProcessor.processStatement(QueryProcessor.java:226) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.cql3.QueryProcessor.processPrepared(QueryProcessor.java:466) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.cql3.QueryProcessor.processPrepared(QueryProcessor.java:443) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.transport.messages.ExecuteMessage.execute(ExecuteMessage.java:142) ~[apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.transport.Message$Dispatcher.channelRead0(Message.java:507) [apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.transport.Message$Dispatcher.channelRead0(Message.java:401) [apache-cassandra-2.2.6.jar:2.2.6]

at io.netty.channel.SimpleChannelInboundHandler.channelRead(SimpleChannelInboundHandler.java:105) [netty-all-4.0.23.Final.jar:4.0.23.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:333) [netty-all-4.0.23.Final.jar:4.0.23.Final]

at io.netty.channel.AbstractChannelHandlerContext.access$700(AbstractChannelHandlerContext.java:32) [netty-all-4.0.23.Final.jar:4.0.23.Final]

at io.netty.channel.AbstractChannelHandlerContext$8.run(AbstractChannelHandlerContext.java:324) [netty-all-4.0.23.Final.jar:4.0.23.Final]

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) [na:1.8.0_102]

at org.apache.cassandra.concurrent.AbstractLocalAwareExecutorService$FutureTask.run(AbstractLocalAwareExecutorService.java:164) [apache-cassandra-2.2.6.jar:2.2.6]

at org.apache.cassandra.concurrent.SEPWorker.run(SEPWorker.java:105) [apache-cassandra-2.2.6.jar:2.2.6]

at java.lang.Thread.run(Thread.java:745) [na:1.8.0_102]While collating results for a client SELECT...

Heap dumps

There is something about Cassandra's handling of OOM exceptions that often times renders the resulting heap dump useless for analysis. You can tell when this occurs, because the heap dump is something less than the size of the heap (12G on these instances). However, it appears that we may have one or more dumps from this event that weren't trampled by the error handler. If intact, these heap dumps would be able to help identify precisely where the memory was allocated. Unfortunately, the tools I'm familiar with (jvisualvm and MAT) require a machine with something close to size of the dump in available RAM, and the ability to run a graphical application. I don't presently have access to a desktop machine with this much memory; I'd be grateful to anyone with ideas for how to analyze these.

Obligatory Dashboards

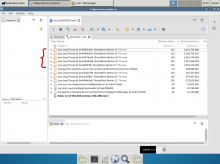

No report is complete without some dashboard plots, however I'm not certain any of these really add any insight into what happened.

There is plenty of evidence to be found of the impact (high latency, dropped messages, all the signs of memory pressure, etc). And, there is clearly something going on around the time the nodes failed, as is evidenced by, for example, increased internal RESTBase request rates. However the rates themselves don't seem sufficient to explain the impact.

- RESTBase request rates

- Cassandra write rate

- Cassandra read rate

- System metrics

- Cassandra GC / heap usage

Conclusions

When Cassandra OOMs like this, it's almost always the result of allocations from aberrant data, for example, range slices of a very wide partition (wide as the result of some combination of live and/or tombstoned columns). We have some well known issues here (very wide rows with large numbers of unreclaimed tombstones), and all of the evidence from this incident would point to that as the cause. An examination of the heap dumps would not only confirm this, but also highlight the offending data. As mentioned above though, this would require access to a machine with something on the order of ~12G of RAM and the ability to run a graphical Java app; Without a machine to perform a heap analysis, it may not be worth the time/effort to pursue this further.

See also: