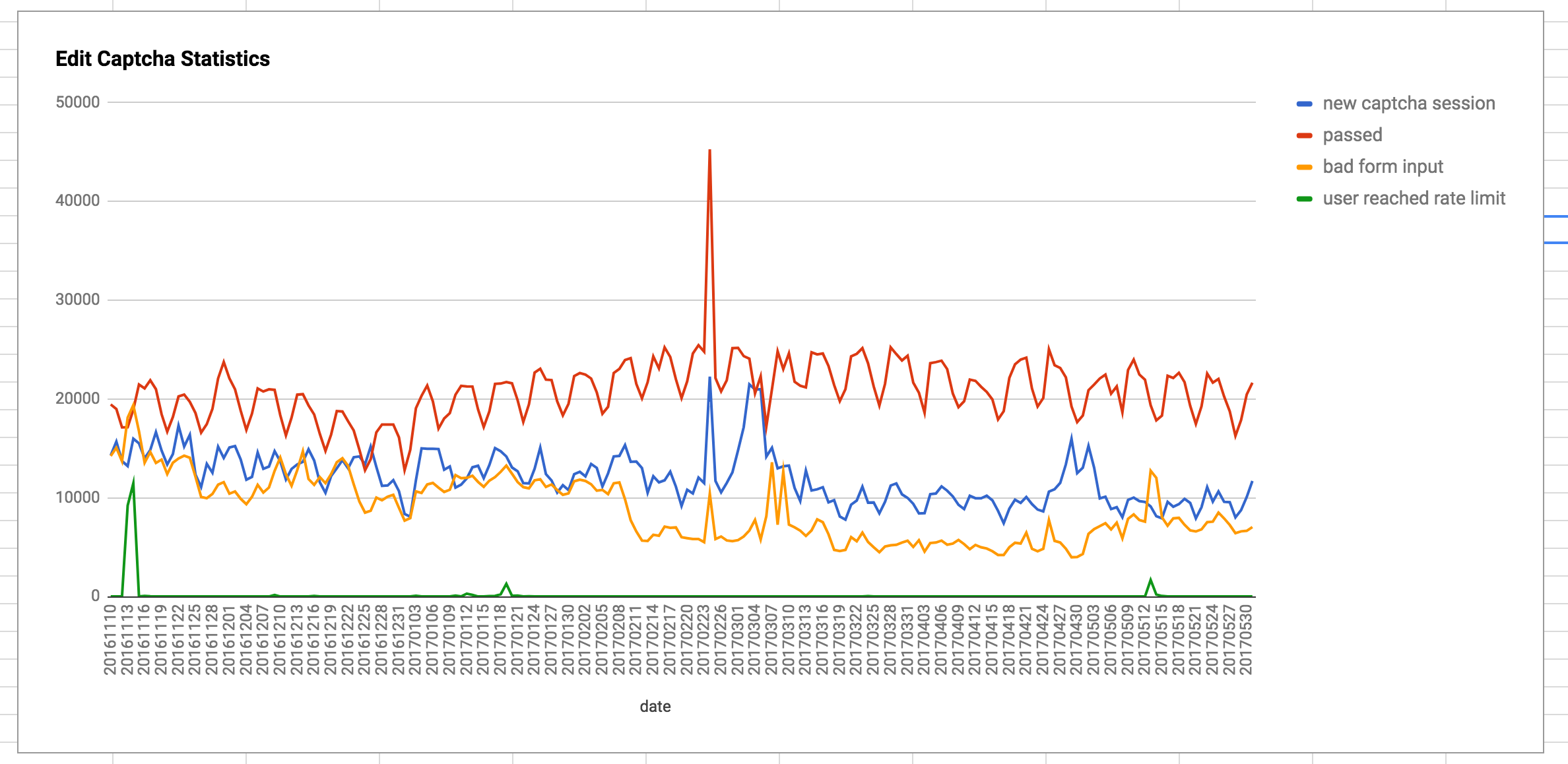

Be useful to have some stats on captcha breakages etc

Logging done by ConfirmEdit currently

Method

log

Found usages (4 usages found)

Method call (4 usages found)

MediaWiki (4 usages found)

extensions/ConfirmEdit/SimpleCaptcha (4 usages found)

Captcha.php (4 usages found)

SimpleCaptcha (4 usages found)

passCaptcha (3 usages found)

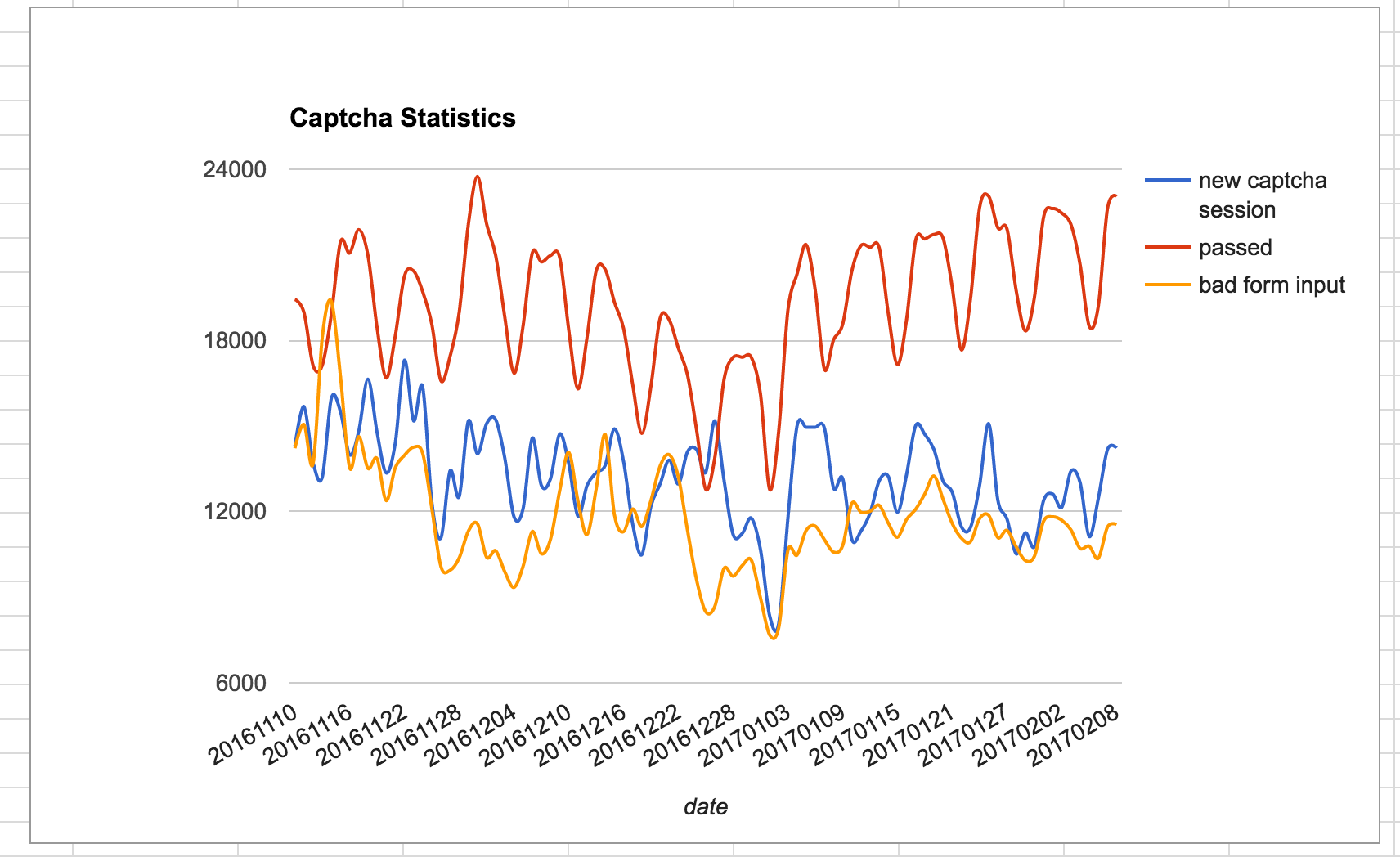

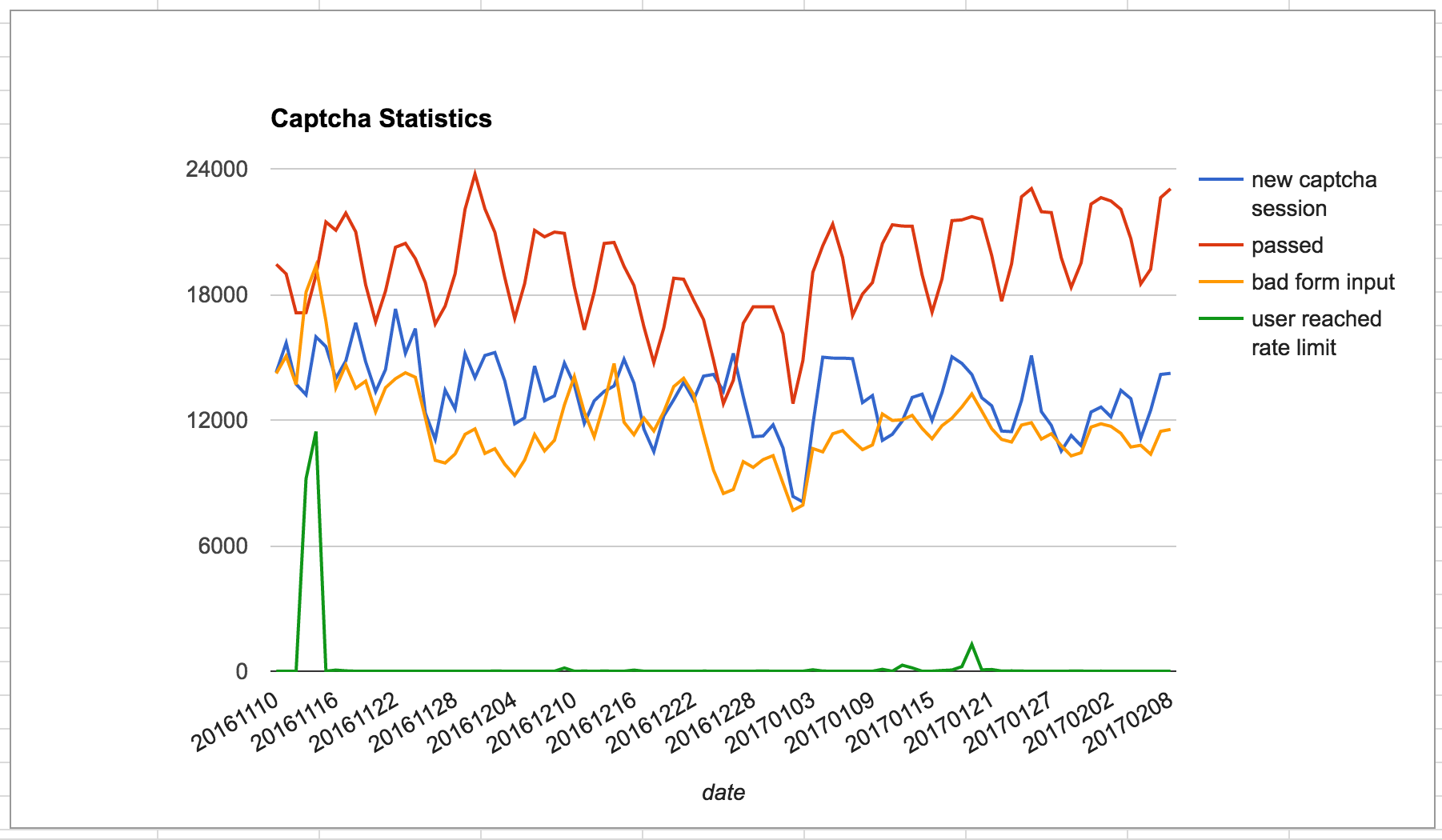

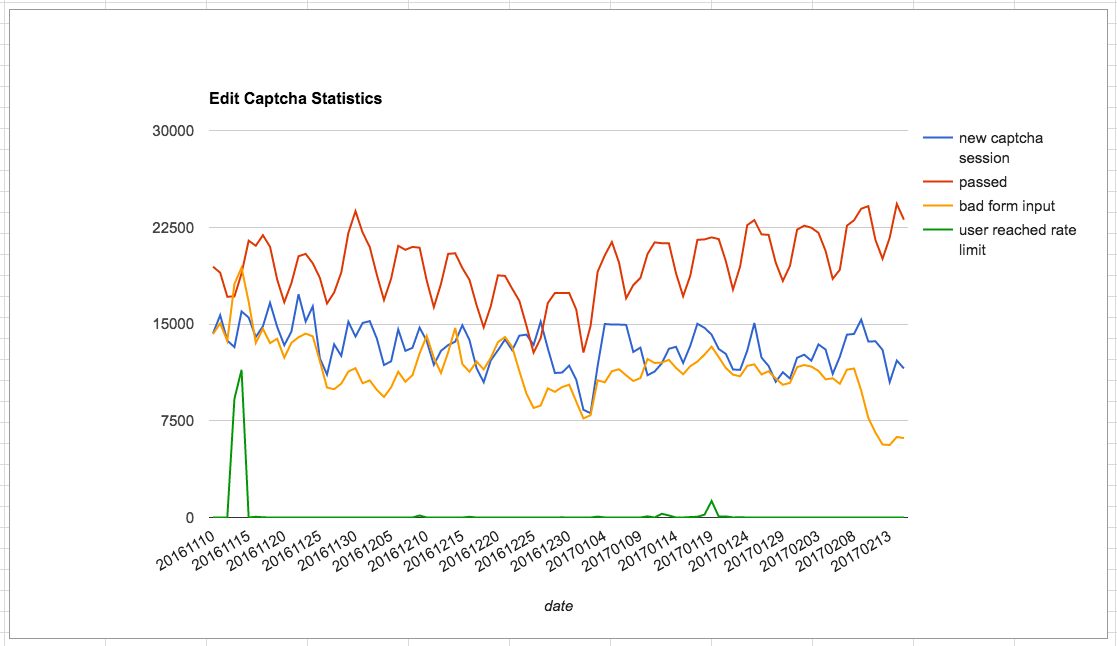

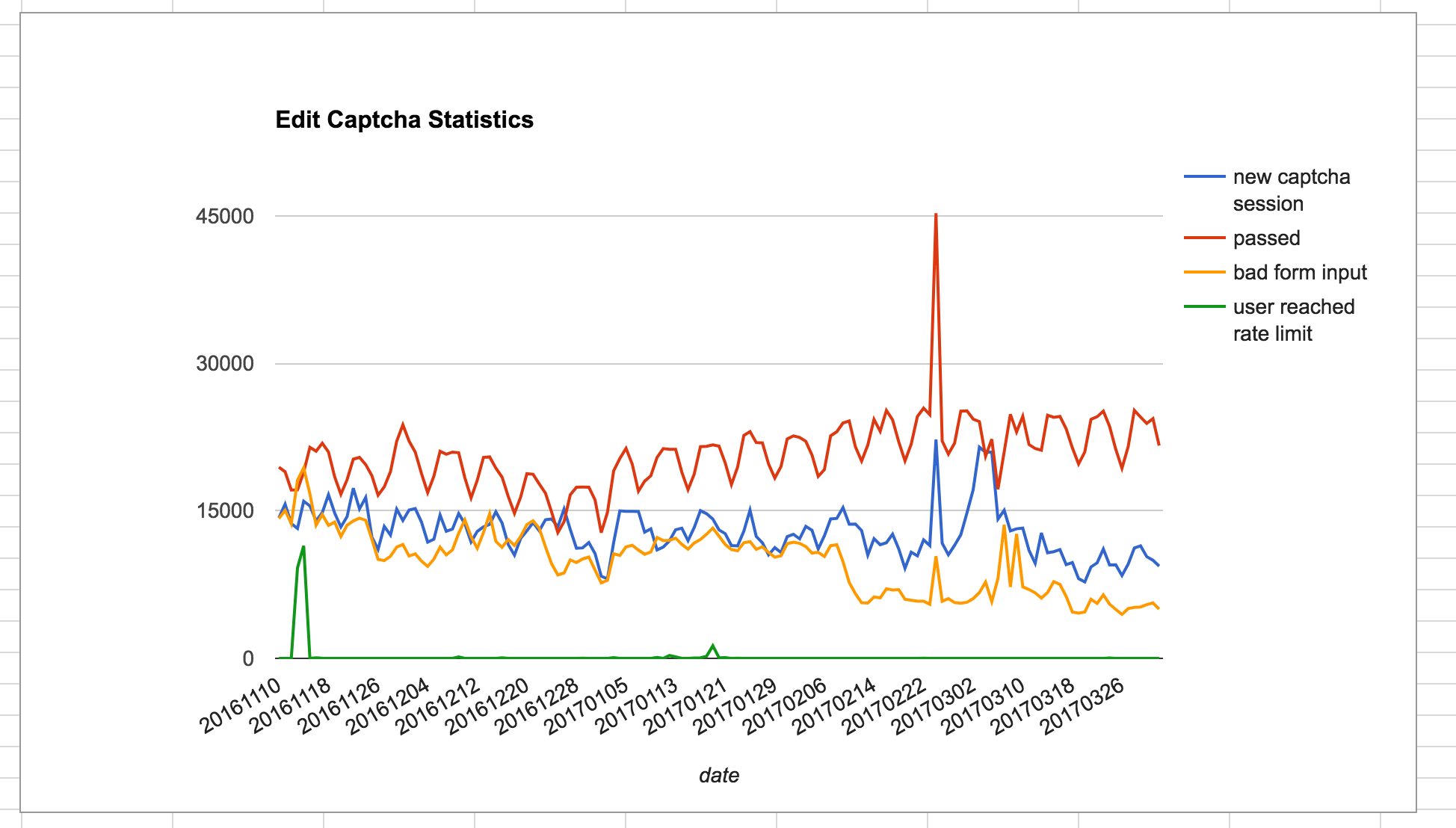

1165$this->log( "passed" );

1171$this->log( "bad form input" );

1176$this->log( "new captcha session" );

passCaptchaLimited (1 usage found)

1123$this->log( 'User reached RateLimit, preventing action.' );