We finally got a continual fullstack instance lifecycle test going in https://gerrit.wikimedia.org/r/#/c/339651/ but it has turned up an oddity where instance creation part of the time takes a long time. Originally, the allowed threshold was 240s and I upped that to 480s as we were leaking 3 instances in half a day. We are still violating the 480s threshold over the course of 2 days. Either we need to agree that it's OK this takes more than 8 minutes periodically or figure out why it does and mitigate. At the moment I am under the impression 8 minutes to create an instance often (where creation is defined as it being returned as active via the API) is probably a sign of deeper issues.

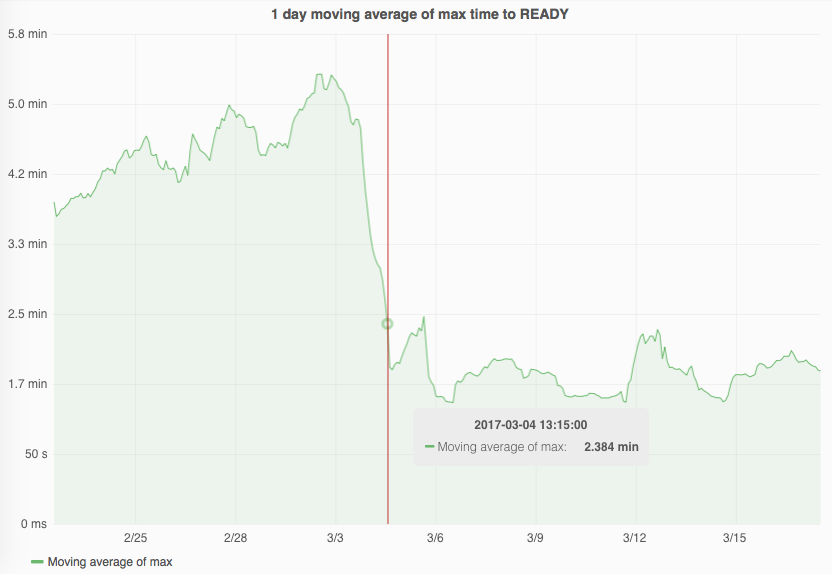

@hashar created a grafana board for this service https://grafana.wikimedia.org/dashboard/db/labs-nova-fullstack

Graphite direct comparison of creation vs fullstack test times where creation is definitely the consistent problem child

This morning I am cleaning up 3 instances since yesterday afternoon that took >480s causing the max_pool param 3 to cause the service itself to cease and alert. When I spot checked them now hours later they are fine.

+--------------------------------------+-----------------------+--------+---------------------+ | ID | Name | Status | Networks | +--------------------------------------+-----------------------+--------+---------------------+ | b051e079-a8b8-4191-832e-39072c3a83fa | fullstackd-1488405544 | ACTIVE | public=10.68.16.43 | | 3d937088-1d8f-4e5b-ae34-d87535fc4c2b | fullstackd-1488372409 | ACTIVE | public=10.68.18.191 | | 210422be-69ee-4a06-90d6-9af162e93773 | fullstackd-1488327296 | ACTIVE | public=10.68.19.129 | +--------------------------------------+-----------------------+--------+---------------------+