As of 1600 UTC on June 13th, errors have increased. Not overload and not timeout errors. Seems to only have affected EQIAD. Not correlated to deployment.

Description

Related Objects

Event Timeline

I'm seeing lots of these in the logs

Traceback (most recent call last):

File "/srv/deployment/ores/venv/lib/python3.4/site-packages/flask/app.py", line 1817, in wsgi_app

response = self.full_dispatch_request()

File "/srv/deployment/ores/venv/lib/python3.4/site-packages/flask/app.py", line 1477, in full_dispatch_request

rv = self.handle_user_exception(e)

File "/srv/deployment/ores/venv/lib/python3.4/site-packages/flask/app.py", line 1381, in handle_user_exception

reraise(exc_type, exc_value, tb)

File "/srv/deployment/ores/venv/lib/python3.4/site-packages/flask/_compat.py", line 33, in reraise

raise value

File "/srv/deployment/ores/venv/lib/python3.4/site-packages/flask/app.py", line 1475, in full_dispatch_request

rv = self.dispatch_request()

File "/srv/deployment/ores/venv/lib/python3.4/site-packages/flask/app.py", line 1461, in dispatch_request

return self.view_functions[rule.endpoint](**req.view_args)

File "./ores/wsgi/preprocessors.py", line 27, in nocache_route

response = make_response(route(*args, **kwargs))

File "./ores/wsgi/preprocessors.py", line 14, in minifiable_route

response = route(*args, **kwargs)

File "./ores/wsgi/routes/v1/scores.py", line 52, in score_model_revisions

score_request = build_score_request(scoring_system, request, context)

File "./ores/wsgi/util.py", line 87, in build_score_request

rev_ids = parse_rev_ids(request, rev_id)

File "./ores/wsgi/util.py", line 108, in parse_rev_ids

return read_bar_split_param(request, "revids", type=int)

File "./ores/wsgi/util.py", line 57, in read_bar_split_param

.format(param, str(e)))

ores.wsgi.util.ParamError: Could not interpret revids. invalid literal for int() with base 10: 'last'

Exception on /scores/itwiki/ [GET]

Traceback (most recent call last):

File "./ores/wsgi/util.py", line 54, in read_bar_split_param

return [type(value) for value in values.split("|")]

File "./ores/wsgi/util.py", line 54, in <listcomp>

return [type(value) for value in values.split("|")]

ValueError: invalid literal for int() with base 10: 'last'This looks like a user-error. For some reason, we're getting requests with "?revids=last". I'm downgrading this to "high" priority.

I can't seem to get a single request to go through on scb1001.

halfak@scb1001:~$ wget 'localhost:8081/v2/scores/ruwiki/?models=reverted%7Cdamaging%7Cgoodfaith&revids=85958727&format=json' -O-

--2017-06-13 18:22:21-- http://localhost:8081/v2/scores/ruwiki/?models=reverted%7Cdamaging%7Cgoodfaith&revids=85958727&format=json

Resolving localhost (localhost)... ::1, 127.0.0.1

Connecting to localhost (localhost)|::1|:8081... failed: Connection refused.

Connecting to localhost (localhost)|127.0.0.1|:8081... connected.

HTTP request sent, awaiting response... 500 INTERNAL SERVER ERROR

2017-06-13 18:22:21 ERROR 500: INTERNAL SERVER ERROR.

halfak@scb1001:~$ wget 'localhost:8081/v2/scores/ruwiki/?models=reverted%7Cdamaging%7Cgoodfaith&revids=85958727&format=json' -O-

--2017-06-13 18:22:23-- http://localhost:8081/v2/scores/ruwiki/?models=reverted%7Cdamaging%7Cgoodfaith&revids=85958727&format=json

Resolving localhost (localhost)... ::1, 127.0.0.1

Connecting to localhost (localhost)|::1|:8081... failed: Connection refused.

Connecting to localhost (localhost)|127.0.0.1|:8081... connected.

HTTP request sent, awaiting response... 200 OK

Length: 436 [application/json]

Saving to: ‘STDOUT’

- 0%[ ] 0 --.-KB/s {"scores": {"ruwiki": {"damaging": {"scores": {"85958727": {"error": {"message": "Timed out after 15 seconds.", "type": "TimeoutError"}}}, "version": "0.3.0"}, "goodfaith": {"scores": {"85958727": {"error": {"message": "Timed out after 15 seconds.", "type": "TimeoutError"}}}, "version": "0.3.0"}, "reverted": {"scores": {"85958727": {"error": {"message": "Timed out after 15 seconds.", "typ- 100%[===========================================================================================================>] 436 --.-KB/s in 0s

2017-06-13 18:23:08 (69.0 MB/s) - written to stdout [436/436]The logs in /srv/log/ores/app.log don't show anything for the 500 response.

The log hasn't been written to in an hour:

~$ ls -alh /srv/log/ores/app.log -rw-r--r-- 1 www-data www-data 6.3K Jun 13 17:54 /srv/log/ores/app.log

I can:

ladsgroup@scb1001:~$ wget 'localhost:8081/v2/scores/ruwiki/?models=reverted%7Cdamaging%7Cgoodfaith&revids=85958727&format=json' -O-

--2017-06-13 18:31:37-- http://localhost:8081/v2/scores/ruwiki/?models=reverted%7Cdamaging%7Cgoodfaith&revids=85958727&format=json

Resolving localhost (localhost)... ::1, 127.0.0.1

Connecting to localhost (localhost)|::1|:8081... failed: Connection refused.

Connecting to localhost (localhost)|127.0.0.1|:8081... connected.

HTTP request sent, awaiting response... 200 OK

Length: 489 [application/json]

Saving to: ‘STDOUT’

- 0%[ ] 0 --.-KB/s {"scores": {"ruwiki": {"damaging": {"scores": {"85958727": {"prediction": false, "probability": {"false": 0.6761220469324349, "true": 0.3238779530675651}}}, "version": "0.3.0"}, "goodfaith": {"scores": {"85958727": {"prediction": true, "probability": {"false": 0.2027760571206214, "true": 0.7972239428793786}}}, "version": "0.3.0"}, - 100%[=====================================================================================>] 489 --.-KB/s in 0s

2017-06-13 18:31:39 (102 MB/s) - written to stdout [489/489]

ladsgroup@scb1001:~$I genuinely have no idea what's going on here.

Mentioned in SAL (#wikimedia-operations) [2017-06-13T19:37:19Z] <Amir1> restarting ores-related services in scb1001 (T167819)

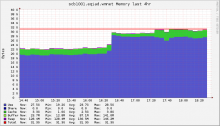

Everything is getting back up as the only node having problems (and putting strain on other nodes) was scb1001 and It's very likely that killing Electron-PDFs on that node which was taking too much CPU caused the node to get to full capacity.

This is number of edits it was able to work on:

Electron got killed in 19:24

12:59 < mutante> is it ok to lower from UBN then?

12:59 < Amir1> mutante: it's okay to resolve it I guess

See discussion of the issue and next steps here: https://lists.wikimedia.org/pipermail/wikitech-l/2017-June/088324.html