Problem

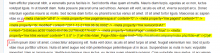

Saving wikitext, or switching to source editor, from VisualEditor which has over ~10kB (long Lorem Ipsum text is enough) results in corrupted wikitext. Only the first ~10kB is taken, then appears some HTML tags (see below), and then appears the first ~10kB. This repeats again and again until the original size is reached.

<meta charset="utf-8" />

<meta property="mw:pageNamespace" content="0" />

<meta property="mw:pageId" content="1" />

<meta property="dc:modified" content="2017-12-13T15:01:56.000Z" />

<meta property="mw:revisionSHA1" content="5702e4d5fd9173246331a889294caf01a3ad3706" />

<meta property="isMainPage" content="true" />

<meta property="mw:html:version" content="1.6.0" />

[http://localhost/company/index.php?title=Main_Page]<title>Main Page</title><base href="http://localhost/company/index.php?title=" />[//localhost/company/load.php?modules=mediawiki.legacy.commonPrint%2Cshared%7Cmediawiki.skinning.content.parsoid%7Cmediawiki.skinning.interface%7Cskins.vector.styles%7Csite.styles%7Cext.cite.style%7Cext.cite.styles%7Cmediawiki.page.gallery.styles&only=styles&skin=vector]<!--[if lt IE 9]><script src="//localhost/company/load.php?modules=html5shiv&only=scripts&skin=vector&sync=1"></script><script>html5.addElements('figure-inline');</script><![endif]-->Steps to reproduce

- Fresh installation of MW + working VisualEditor extension only

- Edit any page

- Add long Lorem ipsum text

- Save or switch to source editor

- Text will be corrupted (repeated)

Environment

- Windows 10 / Windows Server 2008 R2+

- Node.js 8.9.3 LTS

- PHP 7.0.26

- Firefox / Chrome latest version

- IIS

- MW 1.30 stable / 1.31 alpha from GIT

- VisualEditor for 1.30 / for 1.31 from GIT

- Parsoid 0.8.0

- DB engine for MW is SQLite

config.yaml for Parsoid

worker_heartbeat_timeout: 300000

logging:

level: info

services:

- module: lib/index.js

entrypoint: apiServiceWorker

conf:

mwApis:

- uri: 'http://localhost/company/api.php'

domain: 'company'LocalSettings.php

<?php if ( !defined( 'MEDIAWIKI' ) ) { exit; } $wgSitename = "Company"; $wgScriptPath = "/company"; $wgServer = "http://localhost"; $wgResourceBasePath = $wgScriptPath; $wgLogo = "$wgResourceBasePath/resources/assets/wiki.png"; $wgEnableEmail = true; $wgEnableUserEmail = true; $wgEmergencyContact = "apache@localhost"; $wgPasswordSender = "apache@localhost"; $wgEnotifUserTalk = false; $wgEnotifWatchlist = false; $wgEmailAuthentication = true; $wgDBtype = "sqlite"; $wgDBserver = ""; $wgDBname = "wiki"; $wgDBuser = ""; $wgDBpassword = ""; $wgSQLiteDataDir = "C:\\Dev\\Web\\company\\App_Data"; $wgObjectCaches[CACHE_DB] = [ 'class' => 'SqlBagOStuff', 'loggroup' => 'SQLBagOStuff', 'server' => [ 'type' => 'sqlite', 'dbname' => 'wikicache', 'tablePrefix' => '', 'dbDirectory' => $wgSQLiteDataDir, 'flags' => 0 ] ]; $wgMainCacheType = CACHE_NONE; $wgMemCachedServers = []; $wgEnableUploads = false; $wgUseInstantCommons = false; $wgPingback = false; $wgShellLocale = "C.UTF-8"; $wgLanguageCode = "en"; $wgSecretKey = "d85e1b836ff04d1075ffb06cd3ee7d64bcc99a10ee701587f68a909c548f1160"; $wgAuthenticationTokenVersion = "1"; $wgUpgradeKey = "9c4e319200f92f43"; $wgRightsUrl = ""; $wgRightsText = ""; $wgRightsIcon = ""; $wgDiff3 = ""; $wgDefaultSkin = "vector"; wfLoadSkin( 'Vector' ); wfLoadExtension( 'VisualEditor' ); $wgDefaultUserOptions['visualeditor-enable'] = 1; $wgDefaultUserOptions['visualeditor-editor'] = "visualeditor"; $wgHiddenPrefs[] = 'visualeditor-enable'; $wgVisualEditorShowBetaWelcome = false; $wgVisualEditorAvailableNamespaces = [ NS_MAIN => true, NS_USER => true, 102 => true, "_merge_strategy" => "array_plus" ]; $wgVirtualRestConfig['modules']['parsoid'] = array( 'url' => 'http://localhost:8000', 'domain' => 'company' );

Example picture after save