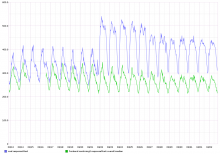

After deploying new coal code, a few of our metrics have changed:

We didn't change the actual calculations, but we did change the logic that results in data being included (or excluded) in certain time windows. That's likely the cause, but we should understand why that had this effect.