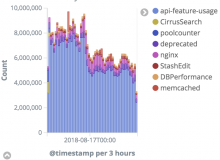

With T200960: Logstash packet loss essentially fixed the daily indices have grown significantly. I don't think we have enough space at the moment to store 30 days worth of logs (note the jump on Aug 01 below). I propose we shorten retention to 20 days until more hardware is available to expand the elasticsearch cluster.

health status index uuid pri rep docs.count docs.deleted store.size pri.store.size green open logstash-2018.07.15 8c9mHFHtSZSYpm4vfk6fIg 1 2 22178907 0 51.6gb 17.2gb green open logstash-2018.07.16 -MU2KyT5RniDmNNu_kFYJg 1 2 21420726 0 56.8gb 18.9gb green open logstash-2018.07.17 lQLJbkzSQLy1MU9ms7UIuA 1 2 32341235 0 81.1gb 27gb green open logstash-2018.07.18 zXogj42hT-SOyIbtH1eQyw 1 2 41270961 0 120gb 40gb green open logstash-2018.07.19 TWJQ3VVJShigNBqVG73GGA 1 2 27917982 0 96.8gb 32.2gb green open logstash-2018.07.20 fkKwqF2jTuSVCCowxI7Ojg 1 2 29655604 0 77.2gb 25.7gb green open logstash-2018.07.21 jZ_2VEwoRiK1jyWq_UT2Og 1 2 37298191 0 93.2gb 31gb green open logstash-2018.07.22 1KK5nZabTEaf_TTKCrju1A 1 2 35022762 0 86.1gb 28.7gb green open logstash-2018.07.23 jAj_pgvvQgylJbmNDHcCJA 1 2 29495638 0 73.3gb 24.4gb green open logstash-2018.07.24 oRhOzOUzRMiqAVGdFslvKw 1 2 28934880 0 69.6gb 23.2gb green open logstash-2018.07.25 uwz1Q2vNSxeU9esiOTlNeA 1 2 28811814 0 71gb 23.6gb green open logstash-2018.07.26 Crs7ovg4SH6hk2HQBeH9OQ 1 2 33923331 0 79.6gb 26.5gb green open logstash-2018.07.27 7fd6Zr1VQeKcl9mKcbPFng 1 2 37867909 0 87.4gb 29.1gb green open logstash-2018.07.28 TmvU5E17Q6iUyX2-9kShCA 1 2 36741805 0 81.5gb 27.1gb green open logstash-2018.07.29 _kgX0vPwSYOkuUIySLy05g 1 2 37386064 0 84.2gb 28.1gb green open logstash-2018.07.30 nHBNpuCmTAuAZf0UC4VMsQ 1 2 35410644 0 89.6gb 29.8gb green open logstash-2018.07.31 lbu-c5FyQbGF5GJCFsZEGg 1 2 22007146 0 58.3gb 19.4gb green open logstash-2018.08.01 Jn8Y6yDLTIW7s0jWcPjLfQ 1 2 18820037 0 50.3gb 16.7gb green open logstash-2018.08.02 nisbJJqVQ6iNfsq6xifjdw 1 2 60243114 0 176.2gb 58.7gb green open logstash-2018.08.03 OC_TRPReTvWkUpCv36pvlw 1 2 118656863 0 316.3gb 105.4gb green open logstash-2018.08.04 g1PXmAZ0S366Pj5xjSZfYQ 1 2 111150066 0 294.2gb 98.1gb green open logstash-2018.08.05 wN5QsRQDSDyJRqhrgk7G3Q 1 2 106689339 0 282.4gb 94.1gb green open logstash-2018.08.06 5_x2JVeGSjOnJJ6Gub4f4Q 1 2 103911287 0 277.8gb 92.7gb green open logstash-2018.08.07 guKiDaenRMK1IZhI4sqWHA 1 2 119193259 0 321gb 107gb green open logstash-2018.08.08 eoSdWMRpTaqZkvfe3FR9Eg 1 2 121122893 0 326.8gb 108.9gb green open logstash-2018.08.09 MZgVZRY_SmuLZCsO7g3NPQ 1 2 117946182 0 333.2gb 111.1gb green open logstash-2018.08.10 UoG_FVTXRASrkUy41Lo5Ag 1 2 108333043 0 280.7gb 93.6gb green open logstash-2018.08.11 DvR_MOmjQEOvseB3darusg 1 2 104247074 0 268.9gb 89.7gb green open logstash-2018.08.12 lHfTcTi1Qzut47ZMqSb8JQ 1 2 103329426 0 266.8gb 88.9gb green open logstash-2018.08.13 Ums0SeWlRGul9DMZIIOa6g 1 2 106676870 0 277.9gb 92.7gb green open logstash-2018.08.14 ikPxvshpSvOZM5bKjAPtKQ 1 2 94564331 0 253.8gb 84gb green open logstash-2018.08.15 57JjETJCRhiuphm42oHDng 1 2 3 0 299.1kb 99.7kb