This will be implemented with an app on toolforge (https://tools.wmflabs.org/sonarqubebot/)

Implementation:

- Application to listen to POST requests from SonarQube

- Validate that the requests come from SonarQube and not some rando

- Create a SonarQubeBot user in gerrit

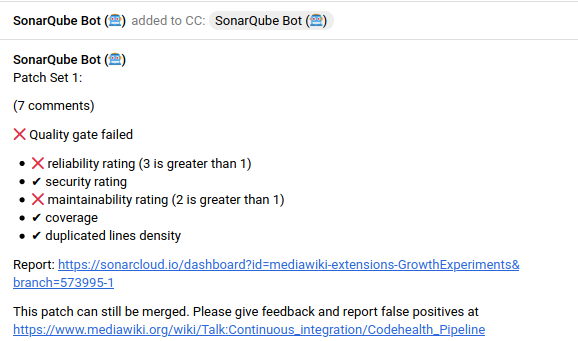

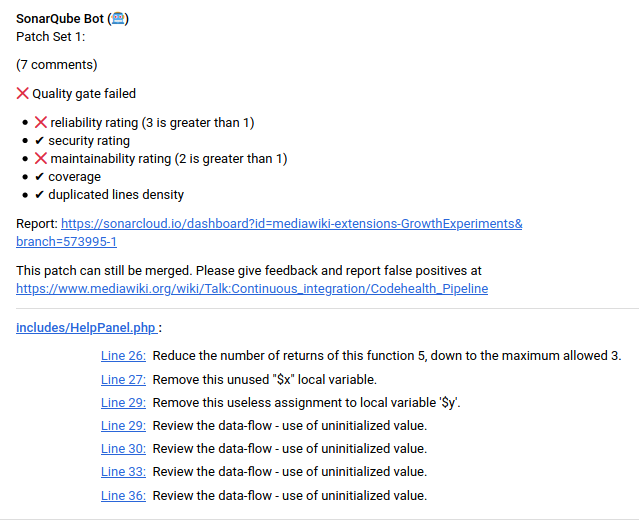

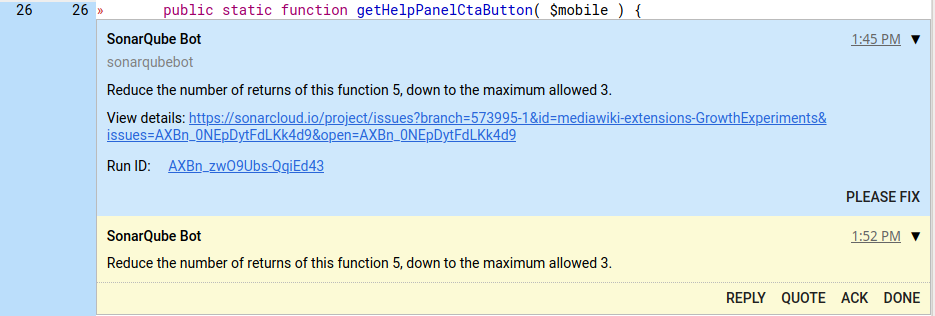

- Craft a comment and post to the gerrit patchset