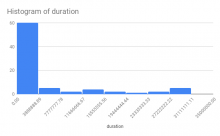

For the purposes of measuring our impact on reducing CheckUser investigation times, let's get some numbers for the existing time taken to resolve a case.

As a rough approximation, for cases in the CU log -

- checked by the same user (actor)

- having the same "reason" field string

Compute the "time taken" by subtracting the last instance timestamp from the first instance timestamp it appears in the log in chronological order.

Generate an average of the time taken to resolve cases over the last 1 year of logs for the following wikis (from T236279):

- eswiki

- zhwiki

- fawiki

- enwiki

- ukwiki

- viwiki

- commons

- enwikibooks