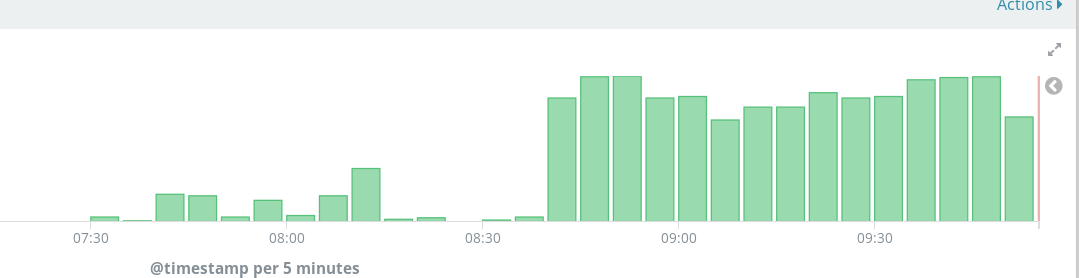

Before 2019-10-08T00:00:02 there was almost no wikidata deadlocks, since then there are 650-1250 per hours at a relatively consistent rate:

https://logstash.wikimedia.org/goto/654dbe1fd799f62f062798cb82e12e23

Happening when executing:

INSERT INTO `wbt_item_terms` (wbit_item_id,wbit_term_in_lang_id) VALUES ('XXXXThere is no apparent version change or configuration change at the time, it could have started at the same time as dumps or other automated process.

The rate is not of immediate concern, but reporting as it could be a regression from the almost 0 rate we recently achieved.

Todo

As discussed in Wikidata team, we will do the following (in order):

- Put limit of writing to both to Q70m for the moment (highest existing item id currently is > 70,202,000). This will stop writing to new store for newly created pages, which result in higher traffic, probably impacting this increase of dead locks the most.

- Read through https://www.mediawiki.org/wiki/Database_transactions to find if there some features we can use to improve locking situation when we write to new store

- Prioritize resolving the tasks blocking switching to reading from new store (T232132: Add EntitiesWithoutTermFinder for the new store, T232040: Add label and description collision detectors for new terms store and T232393: Find out why rebuilding some items in new term store failed) and start writing to and reading from new store for property terms and chunk of item terms (up to Q10m maybe)

- Check later if we can optimize (lower down the number of) writes we do to new store