Description

Details

Event Timeline

Looks like celery has shut down on all of the workers. I'm looking into it now.

I think we might be too close to the memory ceiling and an OOM is what's killing them.

That said, when I restart celery, the lowest available memory gets is ~5GB (out of 8GB) so it doesn't look like we're *really* running out of memory. Could there be another reason we see:

MemoryError: [Errno 12] Cannot allocate memory

Something really strange is going on. I cut our celery workers in half an we're still not able to actually start up celery because we get a MemoryError during the startup process. We haven't done a deployment here in a while. What could have changed?

Looks like the OOM error might have been old. Here's what I have now:

$ sudo -u www-data ../venv/bin/python ores_celery.py /srv/ores/venv/lib/python3.5/site-packages/smart_open/smart_open_lib.py:398: UserWarning: This function is deprecated, use smart_open.open instead. See the migration notes for details: https://github.com/RaRe-Technologies/smart_open/blob/master/README.rst#migrating-to-the-new-open-function 'See the migration notes for details: %s' % _MIGRATION_NOTES_URL Hspell: can't open /usr/share/hspell/hebrew.wgz.sizes. Hspell: can't open /usr/share/hspell/hebrew.wgz.sizes. Traceback (most recent call last): File "ores_celery.py", line 6, in <module> application = celery.build() File "/srv/ores/config/ores/applications/celery.py", line 41, in build config, config['ores']['scoring_system']) File "/srv/ores/config/ores/scoring_systems/celery_queue.py", line 232, in from_config config, name, section_key=section_key) File "/srv/ores/config/ores/scoring_systems/scoring_system.py", line 308, in _kwargs_from_config config, section['metrics_collector']) File "/srv/ores/config/ores/metrics_collectors/metrics_collector.py", line 62, in from_config return Class.from_config(config, name) File "/srv/ores/config/ores/metrics_collectors/statsd.py", line 151, in from_config return cls.from_parameters(**kwargs) File "/srv/ores/config/ores/metrics_collectors/statsd.py", line 131, in from_parameters statsd_client = statsd.StatsClient(*args, **kwargs) File "/srv/ores/venv/lib/python3.5/site-packages/statsd/client.py", line 146, in __init__ host, port, fam, socket.SOCK_DGRAM)[0] File "/usr/lib/python3.5/socket.py", line 733, in getaddrinfo for res in _socket.getaddrinfo(host, port, family, type, proto, flags): socket.gaierror: [Errno -2] Name or service not known

Looks like it is failing because statsd isn't there to connect to anymore.

Turns out it was the statsd host. It changes from labsmon1001 to cloudmetrics1001. Now that I've done a new deployment with an updated config, we're back online.

Reopening, this is alerting again

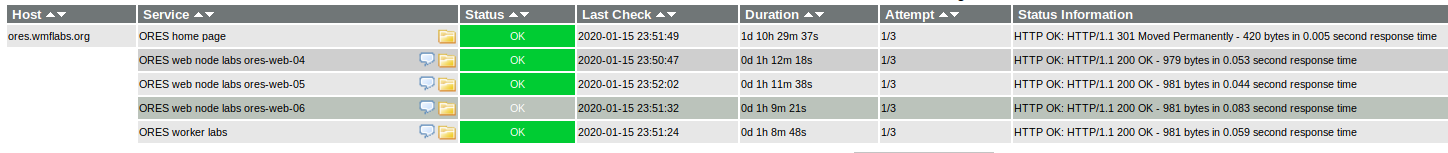

ores.wmflabs.org ORES web node labs ores-web-05 View Extra Service Notes CRITICAL 2020-11-04 10:47:33 8d 16h 47m 31s 3/3 HTTP CRITICAL: HTTP/1.1 500 Internal Server Error - 283 bytes in 0.014 second response time ORES web node labs ores-web-06 View Extra Service Notes CRITICAL 2020-11-04 10:46:53 8d 16h 39m 36s 3/3 HTTP CRITICAL: HTTP/1.1 500 Internal Server Error - 283 bytes in 0.019 second response time

I've ack'd the alerts, which arguably shouldn't be in production icinga since they are about non-production (besides the point though)

The whole ores.wmflabs.org should be downscaled and cleaned up. At its current state, it's completely broken and has lots of maintenance overhead for next to nothing gain. @calbon Can I downsize it and remove alerts and such?

Change 641222 had a related patch set uploaded (by Ladsgroup; owner: Ladsgroup):

[operations/puppet@production] icinga: Drop ores.wmflabs.org monitoring

Change 641222 merged by Alexandros Kosiaris:

[operations/puppet@production] icinga: Drop ores.wmflabs.org monitoring

Change 641238 had a related patch set uploaded (by Dzahn; owner: Dzahn):

[operations/puppet@production] nagios_common: delete check_ores_workers command and config

Change 641238 abandoned by Dzahn:

[operations/puppet@production] nagios_common: delete check_ores_workers command and config

Reason:

still used to check prod, per inline comments

Mentioned in SAL (#wikimedia-cloud) [2020-11-16T21:09:50Z] <Amir1> deleted six ores.wmflabs.org VMs for donwsizing it (T242819)

https://gerrit.wikimedia.org/r/c/operations/puppet/+/641263

https://gerrit.wikimedia.org/r/c/operations/puppet/+/641269

https://gerrit.wikimedia.org/r/c/operations/puppet/+/641270

https://gerrit.wikimedia.org/r/c/operations/puppet/+/641287

https://gerrit.wikimedia.org/r/c/operations/puppet/+/641288

^ all of these were needed until the checks were finally properly removed from Icinga. That is because the config is generated from exported resources and the resources were not absented in puppet. Then we re-added them but with "ensure => absent" which doesn't do the trick. They need to exist first to be able to be removed properly. And then another round because on one of the 4 hosts (icinga1001, icinga2001, alert1001, alert2001) puppet did not run between one merge and the revert of it.

So TLDR this really needs to be done with "absent" first and in the right order.

Finally stuff is gone from https://icinga.wikimedia.org/cgi-bin/icinga/status.cgi?host=ores.wmflabs.org now though. So we can close this again.