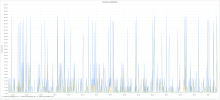

See https://logstash.wikimedia.org/goto/e7950355bc1e9f8687d9792a95672bdc

On March 18th (with a previous spike around March 11th), kubernetes hosts started logging a large amount of discarded (by iptables) packets from mw IPs towards eventgate-analytics.svc.eqiad:4592 and eventgate-main.svc.eqiad:4492

It's not urgent, but:

1/ It might be the sign of a miss-configuration or larger issue

2/ It floods the logs with ~30000messages/h :)

Please investigate it and either stop/re-configure what is sending the packets being dropped, or update Ferm to permit them.

Thanks!