This task will track the racking, setup, and OS installation of an-worker11[02-17]

Hostname / Racking / Installation Details

Hostnames: an-worker11[02-17] (Please note this originally listed as an-worker1096 - an-worker1111, but that range was partially used on racking task T254892, so the an-worker range was merely incremented to the next available hostnames.)

Racking Proposal: They should be racked spread as evenly as possible across rows. Current row allocation of Hadoop worker nodes is described here.

Networking/Subnet/VLAN/IP:

- These nodes use 10G NICs and as such require 10G switch ports.

- These belong in the Analylics VLAN.

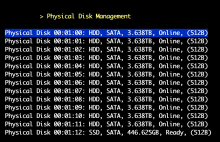

Partitioning/RAID:

please use partman/analytics-flex.cfg

Per host setup checklist

Each host should have its own setup checklist copied and pasted into the list below.

an-worker1102:

- - receive in system on procurement task T246784 & in coupa

- - rack system with proposed racking plan (see above) & update netbox (include all system info plus location, state of planned)

- - bios/drac/serial setup/testing

- - mgmt dns entries added for both asset tag and hostname

- - network port setup (description, enable, vlan)

- end on-site specific steps

- - production dns entries added

- - operations/puppet update (install_server at minimum, other files if possible)

- - OS installation

- - puppet accept/initial run (with role:spare)

- - host state in netbox set to staged

an-worker1103:

- - receive in system on procurement task T246784 & in coupa

- - rack system with proposed racking plan (see above) & update netbox (include all system info plus location, state of planned)

- - bios/drac/serial setup/testing

- - mgmt dns entries added for both asset tag and hostname

- - network port setup (description, enable, vlan)

- end on-site specific steps

- - production dns entries added

- - operations/puppet update (install_server at minimum, other files if possible)

- - OS installation

- - puppet accept/initial run (with role:spare)

- - host state in netbox set to staged

an-worker1104:

- - receive in system on procurement task T246784 & in coupa

- - rack system with proposed racking plan (see above) & update netbox (include all system info plus location, state of planned)

- - bios/drac/serial setup/testing

- - mgmt dns entries added for both asset tag and hostname

- - network port setup (description, enable, vlan)

- end on-site specific steps

- - production dns entries added

- - operations/puppet update (install_server at minimum, other files if possible)

- - OS installation

- - puppet accept/initial run (with role:spare)

- - host state in netbox set to staged

an-worker1105:

- - receive in system on procurement task T246784 & in coupa

- - rack system with proposed racking plan (see above) & update netbox (include all system info plus location, state of planned)

- - bios/drac/serial setup/testing

- - mgmt dns entries added for both asset tag and hostname

- - network port setup (description, enable, vlan)

- end on-site specific steps

- - production dns entries added

- - operations/puppet update (install_server at minimum, other files if possible)

- - OS installation

- - puppet accept/initial run (with role:spare)

- - host state in netbox set to staged

an-worker1106:

- - receive in system on procurement task T246784 & in coupa

- - rack system with proposed racking plan (see above) & update netbox (include all system info plus location, state of planned)

- - bios/drac/serial setup/testing

- - mgmt dns entries added for both asset tag and hostname

- - network port setup (description, enable, vlan)

- end on-site specific steps

- - production dns entries added

- - operations/puppet update (install_server at minimum, other files if possible)

- - OS installation

- - puppet accept/initial run (with role:spare)

- - host state in netbox set to staged

an-worker1107:

- - receive in system on procurement task T246784 & in coupa

- - rack system with proposed racking plan (see above) & update netbox (include all system info plus location, state of planned)

- - bios/drac/serial setup/testing

- - mgmt dns entries added for both asset tag and hostname

- - network port setup (description, enable, vlan)

- end on-site specific steps

- - production dns entries added

- - operations/puppet update (install_server at minimum, other files if possible)

- - OS installation

- - puppet accept/initial run (with role:spare)

- - host state in netbox set to staged

an-worker1108:

- - receive in system on procurement task T246784 & in coupa

- - rack system with proposed racking plan (see above) & update netbox (include all system info plus location, state of planned)

- - bios/drac/serial setup/testing

- - mgmt dns entries added for both asset tag and hostname

- - network port setup (description, enable, vlan)

- end on-site specific steps

- - production dns entries added

- - operations/puppet update (install_server at minimum, other files if possible)

- - OS installation

- - puppet accept/initial run (with role:spare)

- - host state in netbox set to staged

an-worker1109:

- - receive in system on procurement task T246784 & in coupa

- - rack system with proposed racking plan (see above) & update netbox (include all system info plus location, state of planned)

- - bios/drac/serial setup/testing

- - mgmt dns entries added for both asset tag and hostname

- - network port setup (description, enable, vlan)

- end on-site specific steps

- - production dns entries added

- - operations/puppet update (install_server at minimum, other files if possible)

- - OS installation

- - puppet accept/initial run (with role:spare)

- - host state in netbox set to staged

an-worker1110:

- - receive in system on procurement task T246784 & in coupa

- - rack system with proposed racking plan (see above) & update netbox (include all system info plus location, state of planned)

- - bios/drac/serial setup/testing

- - mgmt dns entries added for both asset tag and hostname

- - network port setup (description, enable, vlan)

- end on-site specific steps

- - production dns entries added

- - operations/puppet update (install_server at minimum, other files if possible)

- - OS installation

- - puppet accept/initial run (with role:spare)

- - host state in netbox set to staged

an-worker1111:

- - receive in system on procurement task T246784 & in coupa

- - rack system with proposed racking plan (see above) & update netbox (include all system info plus location, state of planned)

- - bios/drac/serial setup/testing

- - mgmt dns entries added for both asset tag and hostname

- - network port setup (description, enable, vlan)

- end on-site specific steps

- - production dns entries added

- - operations/puppet update (install_server at minimum, other files if possible)

- - OS installation

- - puppet accept/initial run (with role:spare)

- - host state in netbox set to staged

an-worker1112:

- - receive in system on procurement task T246784 & in coupa

- - rack system with proposed racking plan (see above) & update netbox (include all system info plus location, state of planned)

- - bios/drac/serial setup/testing

- - mgmt dns entries added for both asset tag and hostname

- - network port setup (description, enable, vlan)

- end on-site specific steps

- - production dns entries added

- - operations/puppet update (install_server at minimum, other files if possible)

- - OS installation

- - puppet accept/initial run (with role:spare)

- - host state in netbox set to staged

an-worker1113:

- - receive in system on procurement task T246784 & in coupa

- - rack system with proposed racking plan (see above) & update netbox (include all system info plus location, state of planned)

- - bios/drac/serial setup/testing

- - mgmt dns entries added for both asset tag and hostname

- - network port setup (description, enable, vlan)

- end on-site specific steps

- - production dns entries added

- - operations/puppet update (install_server at minimum, other files if possible)

- - OS installation

- - puppet accept/initial run (with role:spare)

- - host state in netbox set to staged

an-worker1114:

- - receive in system on procurement task T246784 & in coupa

- - rack system with proposed racking plan (see above) & update netbox (include all system info plus location, state of planned)

- - bios/drac/serial setup/testing

- - mgmt dns entries added for both asset tag and hostname

- - network port setup (description, enable, vlan)

- end on-site specific steps

- - production dns entries added

- - operations/puppet update (install_server at minimum, other files if possible)

- - OS installation

- - puppet accept/initial run (with role:spare)

- - host state in netbox set to staged

an-worker1115:

- - receive in system on procurement task T246784 & in coupa

- - rack system with proposed racking plan (see above) & update netbox (include all system info plus location, state of planned)

- - bios/drac/serial setup/testing

- - mgmt dns entries added for both asset tag and hostname

- - network port setup (description, enable, vlan)

- end on-site specific steps

- - production dns entries added

- - operations/puppet update (install_server at minimum, other files if possible)

- - OS installation

- - puppet accept/initial run (with role:spare)

- - host state in netbox set to staged

an-worker1116:

- - receive in system on procurement task T246784 & in coupa

- - rack system with proposed racking plan (see above) & update netbox (include all system info plus location, state of planned)

- - bios/drac/serial setup/testing

- - mgmt dns entries added for both asset tag and hostname

- - network port setup (description, enable, vlan)

- end on-site specific steps

- - production dns entries added

- - operations/puppet update (install_server at minimum, other files if possible)

- - OS installation

- - puppet accept/initial run (with role:spare)

- - host state in netbox set to staged

an-worker1117:

- - receive in system on procurement task T246784 & in coupa

- - rack system with proposed racking plan (see above) & update netbox (include all system info plus location, state of planned)

- - bios/drac/serial setup/testing

- - mgmt dns entries added for both asset tag and hostname

- - network port setup (description, enable, vlan)

- end on-site specific steps

- - production dns entries added

- - operations/puppet update (install_server at minimum, other files if possible)

- - OS installation

- - puppet accept/initial run (with role:spare)

- - host state in netbox set to staged

Once the system(s) above have had all checkbox steps completed, this task can be resolved.