In synchro meeting Product-Analytics folks have mentioned performance problems of Superset dashboards.

Multiple optimizations have been discussed and setting up Superset internal cache seems an easy first step.

See https://superset.apache.org/docs/installation/cache

This needs to wait for Superset to be moved to its bare-metal host (dependant task)

Description

Details

| Subject | Repo | Branch | Lines +/- | |

|---|---|---|---|---|

| superset: add cache to staging superset | operations/puppet | production | +28 -0 | |

| superset: add cache to an-tool1010 | operations/puppet | production | +21 -2 |

| Status | Subtype | Assigned | Task | ||

|---|---|---|---|---|---|

| Resolved | odimitrijevic | T255145 Analytics Hardware for Fiscal Year 2020/2021 | |||

| Resolved | • razzi | T268219 Move Superset and Turnilo to an-tool1010 | |||

| Resolved | • razzi | T268784 Configure superset cache |

Event Timeline

The idea that I have is the following:

- We move Superset to an-tool1010, with Turnilo

- We deploy a on-host memcached instance, we have already a lot of puppet code + monitoring + metrics to re-use.

- We use FLASK_CACHE as Joseph highlighted

an-tool1010 has 64G of ram, so I think that there is a ton of space for the on-host memcached instance (that we don't have now on the little VM).

We deploy a on-host memcached instance, we have already a lot of puppet code + monitoring + metrics to re-use.

Sounds good. Q: Is there a reason we couldn't/shouldn't use the prod memcached clusters?

Good point yes, I thought about it but these are the main points against it (in my opinion):

- The memcached prod cluster is mediawiki-specific, no space atm for other use cases. I think that there is a Redis misc cluster, but I am not sure how/if it will be supported in the long run (some services use it we could try to investigate).

- I think (and I could be very wrong) that any caching usage from Superset should be some GBs of memcached/redis memory, that there is plenty for the moment on an-tool1010 (no reason to waste it I know but given the current usage that Superset has in its VM, 64G are a ton :D).

- Having a on host memcached/redis instance would be nice to restart/wipe/tune on demand, without risking to impact anything else, until we find a good and stable config.

In the long run, if Superset's usage grow so much that this setup is not ok anymore, we could think about a little dedicated caching cluster, maybe co-located with celery? All within the Analytics VLAN, so have full control of the whole superset pipeline. Or if SRE supports Redis, we could ask for some spare resources in there and see how it goes!

Lemme know if it makes sense :)

Change 644672 had a related patch set uploaded (by Razzi; owner: Razzi):

[operations/puppet@production] superset: add cached to an-tool1010

Change 644672 merged by Razzi:

[operations/puppet@production] superset: add cache to an-tool1010

Change 647106 had a related patch set uploaded (by Razzi; owner: Razzi):

[operations/puppet@production] superset: add cache to staging superset

Change 647106 merged by Razzi:

[operations/puppet@production] superset: add cache to staging superset

Current progress: configured staging superset to use memcache, but pylibmc was installed as an apt package and the process uses a virtual environment, so pylibmc needs to be installed there, in the virtual environment, using the https://gerrit.wikimedia.org/r/admin/repos/analytics/superset/deploy repository.

When it says it should only be run "on a build server", is that`deneb.codfw.wmnet`?

When it says it should only be run "on a build server", is that`deneb.codfw.wmnet`?

Currently, yup!

Quick poll: what should the default caching timeout be? I'm thinking 12 hours, since it seems most charts have daily granularity, so viewing a chart one day and then the next day will show the latest data point. The timeout is also configurable on a per-table or per-chart level, but I expect most users won't discover this.

@razzi Did you get this working? I've been testing in my fundraising Superset dev environment, and so far Superset/Flask doesn't seem to enable the cache. See T272636: Investigate Superset caching

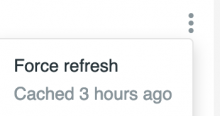

@Jgreen I did get this working, and confirmed it was working by visiting it in the UI, where you can see whether a chart is cached in the overflow menu:

I also saw the log warning messages you're getting about CACHE_TYPE being set to null, and haven't explored why those messages are there despite the caching working.

It also appears CACHE_CONFIG was renamed to DATA_CACHE_CONFIG in a recent superset version: see https://github.com/apache/superset/pull/11509.

Update here: we had to roll back the caching because our data access permissions weren't used by caching. Architecturally, this is a real problem: the permissions are checked when the query runs, and if the query doesn't run because it's cached, the permissions aren't checked. The solution would be to mirror our database permission structure with Superset roles, but we don't have plans to do this currently. The issue for that is here: https://phabricator.wikimedia.org/T273850 and I'm closing this one.

We're curious to know whether caching can be turned on after the superset 3 upgrade? Having trouble finding the newest task about this...