This task will start with @SNowick_WMF and possibly accrue subtasks. @JTannerWMF may add content to this task.

The insight we seek to gain through instrumentation will include:

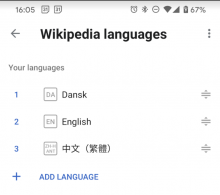

- How does the lack of understanding English influences the ability for someone to complete the task (via splitting out people who have English as one of the languages set in their app from the Wiki annotations on languages other than English)

- We need to explicitly track, which source the image is coming from (Wikidata, Commons, Other Wikis), so that we understand the influence that has on accuracy.

- We want to understand the success rate of the tutorial

- We want to know if people like the task. A method to do so is to evaluate if users return to complete it on three distinct dates

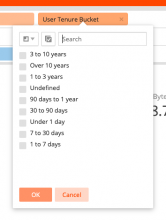

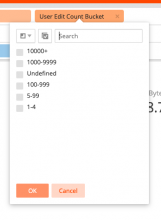

- We want to compare frequency of return to the task (retention) by date across user tenure and language to understand if there was more stickiness for this task by how experienced a user is and if they speak English or not

- Of the people that got the task right, how long did it take them to submit an answer? We want to see this data to categorize if a match is easy or hard

- We want to see if someone clicked to see more information on a task, this will help us determine the difficulty of a task

- We want to know how often someone selects certain choices in the pop up dialog in response to No or Not Sure

- We want to see the user name if they opt-in to showing it

- We want to know if someone scrolled the article

Draft schema, based on the above requirements:

- lang - Language (or list of languages, if more than one) that the user has configured in the app.

- pageTitle - Title of the article that was suggested.

- imageTitle - File name of the image that was suggested for the article.

- suggestionSource - Source from which this suggestion is being made, e.g. whether the image appears in another language wiki, inside a wikidata item, etc.

- response - The response that the user gave for this suggestion. This could be a text field, i.e. literally yes, no, unsure, or a numeric value (0, 1, 2), whichever will be simpler for data analysis.

- reason - The justification for the user's response. Since the user may select one or more reasons for their response, this will be a comma-separated list of values that correspond to "reasons" that we will agree on (0 = "Not relevant", 1 = "Low quality", etc)

- detailsClicked - Whether the user tapped for more information on the image (true/false).

- infoClicked - Whether the user tapped on the "i" icon in the toolbar.

- scrolled - Whether the user scrolled the contents of the article that are shown underneath the image suggestion (true/false).

- timeUntilClick - Amount of time, in milliseconds, that the user spent before tapping on the Yes/No/Not sure buttons.

- timeUntilSubmit - Amount of time, in milliseconds, that the user spent before submitting the entire response, including specifying the reasons for selecting No or Not sure.

- userName - The wiki username of this user. May be null if the user did not agree to share.

- teacherMode - (true/false) Whether this feature is being used by a superuser / omniscient entity.

Known test usernames

- Rho2017

- Cooltey5

- SHaran (WMF)

- Scblr

- Scblrtest10

- Dbrant testing

- HeyDimpz

- Derrellwilliams

- Cloud atlas