This task is in the context of fully migrating off the old paging system (i.e. SMS sent by icinga via a 3rd party provider) and onto VictorOps / Splunk Oncall.

For Analytics specifically we'll need to at do/coordinate these steps:

- Onboard to VO all Analytics folks that need paging, and have them be part of the Analytics VO team (already present)

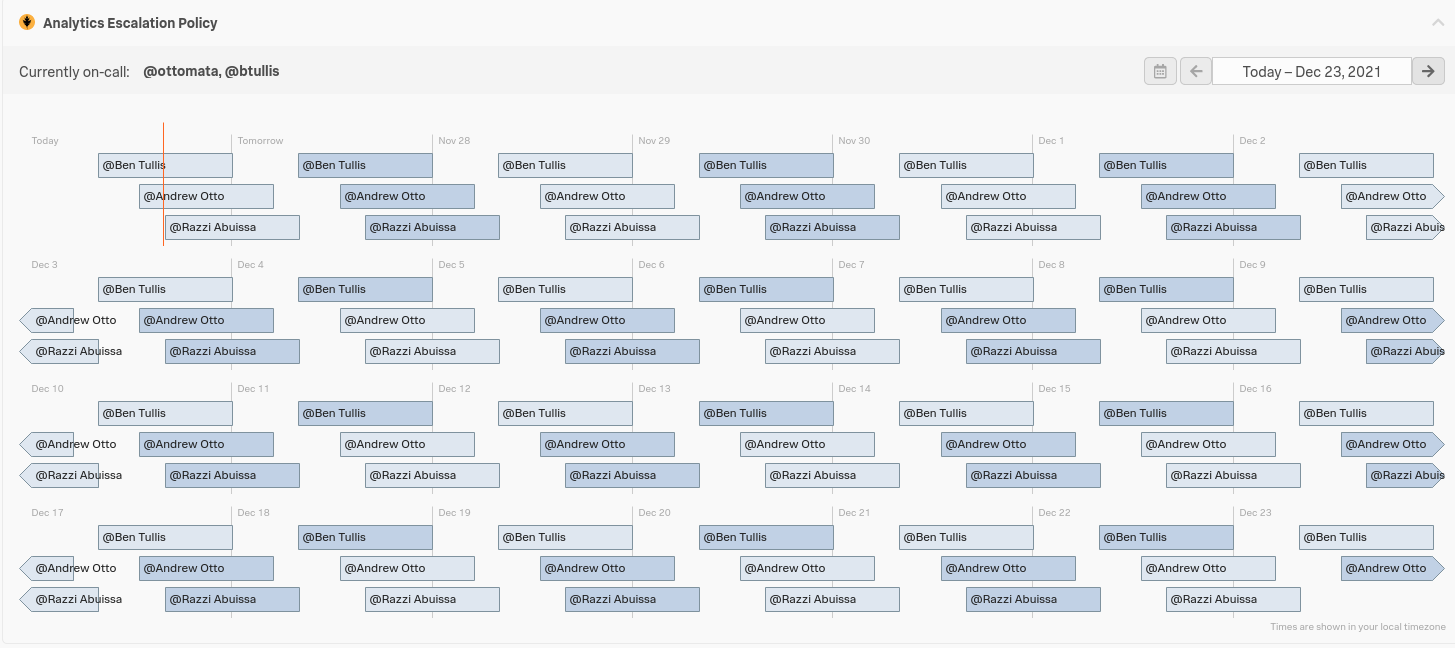

- Create a rotation and escalation for the team, and assign a new routing key (for the icinga integration)

- Make sure team onboarding documentation is updated to reflect VO onboarding and stop changing icinga contacts.cfg file (note that cgi.cfg will still need modification for web interface permissions tho)