The really strange thing: you CAN ping the IP. However, any VM with a public IP can easily curl it. Our test case is:

curl https://hub.paws.wmcloud.org/hub/metrics since that's where I found this.

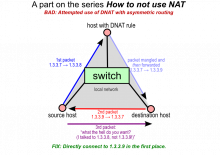

The response times out like a firewall issue, but we so far haven't found a firewall. Better yet, it seems as though a connection is made (SYN/ACK), but things don't quite end up right.

Seems interesting!