🧪 Results

To evaluate the impact of introducing an "edit" button within the Vector 2022's new sticky header, we ran two A/B tests.

These two A/B tests were design to help us learn how the new edit button within the sticky header impacted the likelihood:

- People would publish the edits they started

- The edits people published would get reverted

What follows are the conclusions we're drawing from these A/B tests and details about the Wikipedias that participated in them.

Conclusions

The results of both A/B tests, have led to us conclude:

- People were more likely to complete the edits they start using the sticky header

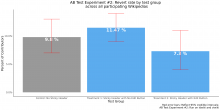

- Of all the edits people initiated throughout both A/B tests, there was a 2.8% and a 6.8% increase in the percent of people who were able to successfully complete at least one edit using the edit button within the sticky header, in AB Test Experiment #1 and #2 respectively. This is in comparison to edits people started using other edit buttons present on the page.

- Edits people initiated and published using the sticky header were less likely to be reverted

- Of all the edits people published throughout both A/B tests, the edits people started using the new edit button within the sticky header were less likely to be reverted than edits started using other edit buttons present on the page.

Note: While we are able to confirm that edits published using the sticky header were less likely to be reverted than edits published using other edit buttons present on the page, we are unable to confirm and share a specific percentage decrease in revert rates because of a relatively high margin of error. Learn more in the test report.

Test Details

To arrive at the conclusions above, we ran two A/B tests.

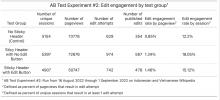

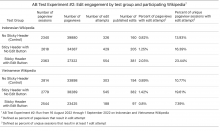

The first test ran between 6 July and 8 August 2022 on 15 Wikipedias. At these projects, 50% of people included in the A/B test were shown the Vector 2022 skin's new sticky header without an edit button within it and 50% of people were shown the Vector 2022 skin's new sticky header with an edit button within it.

The second test ran between 16 August and 1 September 2022 on two Wikipedias: Vietnamese and Indonesian. At these two projects, there were three equally-sized three test groups:

- A control group that did not see or have access to the Vector 2022 skin's new sticky header

- A treatment group that saw the Vector 2022 skin's new sticky header without an edit button within it

- A treatment group that saw the Vector 2022 skin's new sticky header with an edit button within it

T283505 will introduce a fixed "sticky" site header to the desktop reading experience.

To start, the sticky header will NOT contain editing functionality, per T294383.

This task represents the work with running an A/B test to evaluate the impact introducing an edit affordance within desktop reading experience's fixed "sticky" site header has on the following:

- The speed and ease with which contributors, across experience levels, can begin making a change to the content they are wanting to affect

- How likely people are to publish the edits they start making

- Peoples' awareness of their ability to edit the content they are consuming

- The rate at which people make destructive changes to wikis

Decision to be made

This A/B test will help us make the following decision: Should edit affordance(s) within the sticky header be made available to more people?

Note: we have yet to define what "more people" means in this context and defining it depends on knowing all of the people who have access to the sticky header at the time the test is run.

Curiosities

We do not think this series of changes is likely to have a notable impact on a single metric that has a clear directional interpretation. Said another way: we do not think there is a single metric that is likely to A) move in response to these changes *and* B) for the direction of that movement to indicate a clear improvement or degradation in peoples' user experience. As such, we will evaluate a collection of metrics and evaluate them holistically to decide whether the impact of this intervention was positive or negative.

| Priority | Impact | Metric |

|---|---|---|

| 1. | Peoples' awareness of their ability to edit | Proportion of people who initiated at least one edit session during the course of the A/B test |

| 2. | Reduction in effort required to start an edit | Average time required between when the editing interface is ready and people make a change to the document. |

| 3. | Reduction in overall effort required to publish an edit | i. Average number of edits each person makes throughout the course of the A/B test and ii. Average rate at which people publish the edits they start |

Decision Matrix

| ID | Scenario | Metric(s) | Plan of action |

|---|---|---|---|

| 1. | People are more likely to make destructive edits | Proportion of published edits that are reverted within 48 hours of being made increases by >10% over a sustained period of time | 1. Pause plans for wider deployment of the sticky header with editing functionality enabled, 2. To contextualize change in revert rate, investigate changes in the number of published edits (maybe higher revert rate is a "price" we're willing to "pay" for the increase in good edits), 3. Investigate the type of edits being reverted to understand how the sticky header editing affordance could be contributing to the uptick |

| 2. | People are less likely to publish the edits they initiate | Edit completion rate decreases by >10% over a sustained period of time | 1. Pause plans for wider deployment of the sticky header with editing functionality enabled, 2. Investigate what patterns exist among the people whose edit completion rate has gone down; if there is a pattern among Junior Contributors, prioritize work on T296907 |

| 3. | People do NOT encounter more difficulty publishing edits and there are no regressions in edit revert and edit completion rates | Time to first change does NOT increase by >10% over a sustained period of time, edit revert rate does NOT increase by >10%, edit completion rate does NOT decrease by >10% | Move forward with opt-out deployment |

Participating Wikis

See: T298280.

Done

- A report is published that evaluates the ===Hypotheses above