Conclusions

DataHub is our leading candidate.

Pros

- Good UX, lots of features we want like data quality reporting and social

- Push ingestion architecture, Kafka useful for deployment flexibility (public catalog)

- Most robust experience so far, setting up and running ingestion

Cons

- Dependency on Confluent Schema, see T299703#7671668

- Possible tendency to lean towards other Confluent ecosystem pieces

- Neo4j or ElasticSearch can be the graph backend, but Neo4j Community is limited to a single node and ElasticSearch may not perform as well as we need it to for this use case, even with a small graph. Good migration guide here.

Run

- Start all the services mentioned in T299703#7660150

- Tunnel with ssh -N -L9002:localhost:9002 stat1008.eqiad.wmnet

- browse http://localhost:9002/ (username and password are both: datahub)

Steps to Reproduce Installation

Detailed below in comments from Ben. Datahub runs in docker by default but we don't have docker on the test cluster, so we'll have to try setting up the individual dependency services:

- Elasticsearch (we'll use the latest OpenSearch)

- Mysql (already have this)

- kafka (we have a test kafka cluster, so connecting that may be easier than spinning up a new one)

Ingestion

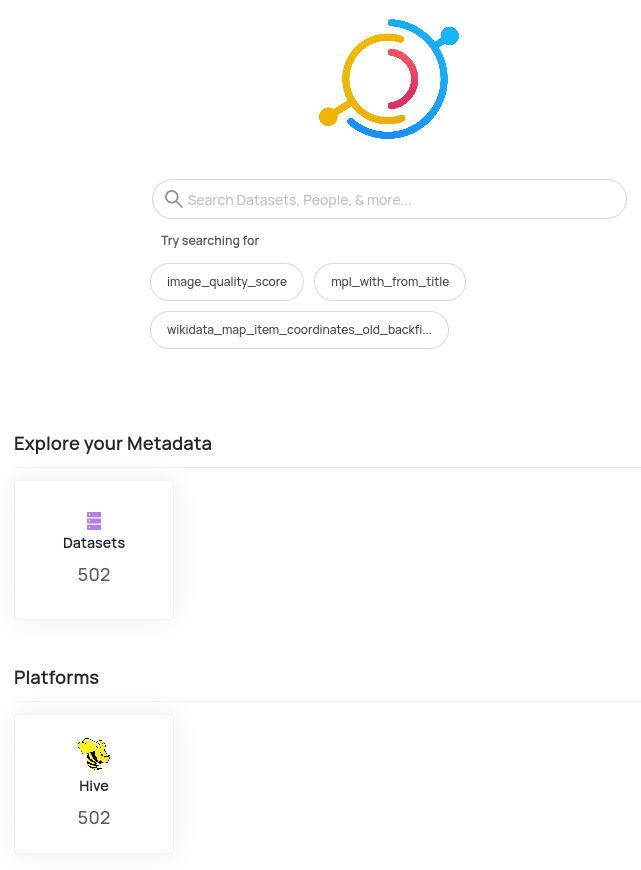

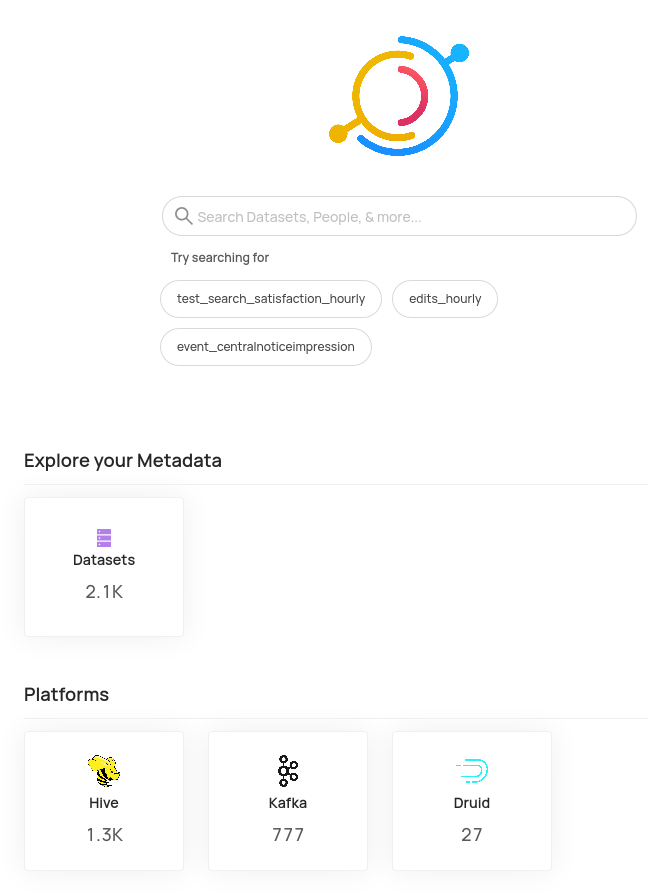

- Ingest Hive Metastore: T299703#7662929

- Ingest Kafka: T299703#7663941

- Ingest both Druid clusters: T299703#7664039

(see also this thread from the Datahub slack)