Once db1115 has been reimaged and cleaned up, we should get orchestrator to start using db1115 instead of db2093 so we can have consistency (eqiad being the writable DC).

@Kormat I will ping you once this can happen.

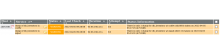

- Move orchestrator DB from db2093 to db1115 (enable replication)

- Fix orchstrator_srv grant on db1115, ensure it works from dborch1001.

- Disable puppet on dborch1001.

- Stop orchestrator

- Merge https://gerrit.wikimedia.org/r/c/operations/puppet/+/774485

- Take a mysqldump of the orchestrator database on db2093: sudo mysqldump --single-transaction --ssl-verify-server-cert -h db2093.codfw.wmnet --databases orchestrator > orchestrator.dump.T301315

- Load dump into the test section: sudo db-mysql db1124 < orchestrator.dump.T301315. Double-check that it loads correctly, and that the data looks good.

- Drop orchestrator database on db2093: sudo db-mysql db2093 -e 'drop database orchestrator'

- Load mysqldump into db1115, with replication enabled: sudo db-mysql db1115 < orchestrator.dump.T301315

- Run puppet on dborch1001, start orchestrator.

- Reimage db2093 to Bullseye

- Start to backup orchestrator on db1115