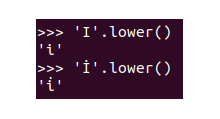

AttributeError: 'NoneType' object has no attribute 'start'

File "/opt/lib/python/site-packages/flask/app.py", line 2073, in wsgi_app

response = self.full_dispatch_request()

File "/opt/lib/python/site-packages/flask/app.py", line 1518, in full_dispatch_request

rv = self.handle_user_exception(e)

File "/opt/lib/python/site-packages/flask/app.py", line 1516, in full_dispatch_request

rv = self.dispatch_request()

File "/opt/lib/python/site-packages/flask/app.py", line 1502, in dispatch_request

return self.ensure_sync(self.view_functions[rule.endpoint])(**req.view_args)

File "/srv/app/app.py", line 252, in query

sections_to_exclude=data["sections_to_exclude"][:25],

File "/srv/app/src/query.py", line 54, in run

sections_to_exclude=sections_to_exclude,

File "/srv/app/src/scripts/utils.py", line 425, in process_page

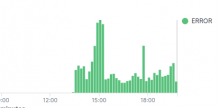

i1_sub = match.start()Spotted when looking at the service logs after deployment (but the error was from earlier). Just a single instance.