Error

- mwversion: 1.39.0-wmf.25

- reqId: a9f3f7b62eecf029ce4b0588

- Find reqId in Logstash

[{reqId}] {exception_url} MWException: Error contacting the Parsoid/RESTBase server (HTTP 404)from /srv/mediawiki/php-1.39.0-wmf.25/extensions/DiscussionTools/includes/Hooks/HookUtils.php(98)

#0 /srv/mediawiki/php-1.39.0-wmf.25/extensions/DiscussionTools/includes/Hooks/DataUpdatesHooks.php(47): MediaWiki\Extension\DiscussionTools\Hooks\HookUtils::parseRevisionParsoidHtml(MediaWiki\Revision\RevisionStoreRecord)

#1 /srv/mediawiki/php-1.39.0-wmf.25/includes/deferred/MWCallableUpdate.php(38): MediaWiki\Extension\DiscussionTools\Hooks\DataUpdatesHooks->MediaWiki\Extension\DiscussionTools\Hooks\{closure}()

#2 /srv/mediawiki/php-1.39.0-wmf.25/includes/deferred/DeferredUpdates.php(474): MWCallableUpdate->doUpdate()

#3 /srv/mediawiki/php-1.39.0-wmf.25/includes/deferred/RefreshSecondaryDataUpdate.php(103): DeferredUpdates::attemptUpdate(MWCallableUpdate, Wikimedia\Rdbms\LBFactoryMulti)

#4 /srv/mediawiki/php-1.39.0-wmf.25/includes/deferred/DeferredUpdates.php(474): RefreshSecondaryDataUpdate->doUpdate()

#5 /srv/mediawiki/php-1.39.0-wmf.25/includes/Storage/DerivedPageDataUpdater.php(1801): DeferredUpdates::attemptUpdate(RefreshSecondaryDataUpdate, Wikimedia\Rdbms\LBFactoryMulti)

#6 /srv/mediawiki/php-1.39.0-wmf.25/includes/page/WikiPage.php(2136): MediaWiki\Storage\DerivedPageDataUpdater->doSecondaryDataUpdates(array)

#7 /srv/mediawiki/php-1.39.0-wmf.25/includes/jobqueue/jobs/RefreshLinksJob.php(242): WikiPage->doSecondaryDataUpdates(array)

#8 /srv/mediawiki/php-1.39.0-wmf.25/includes/jobqueue/jobs/RefreshLinksJob.php(163): RefreshLinksJob->runForTitle(Title)

#9 /srv/mediawiki/php-1.39.0-wmf.25/extensions/EventBus/includes/JobExecutor.php(79): RefreshLinksJob->run()

#10 /srv/mediawiki/rpc/RunSingleJob.php(77): MediaWiki\Extension\EventBus\JobExecutor->execute(array)

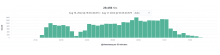

#11 {main}Impact

Notes

> 200 in the last 15 minutes, multiple projects