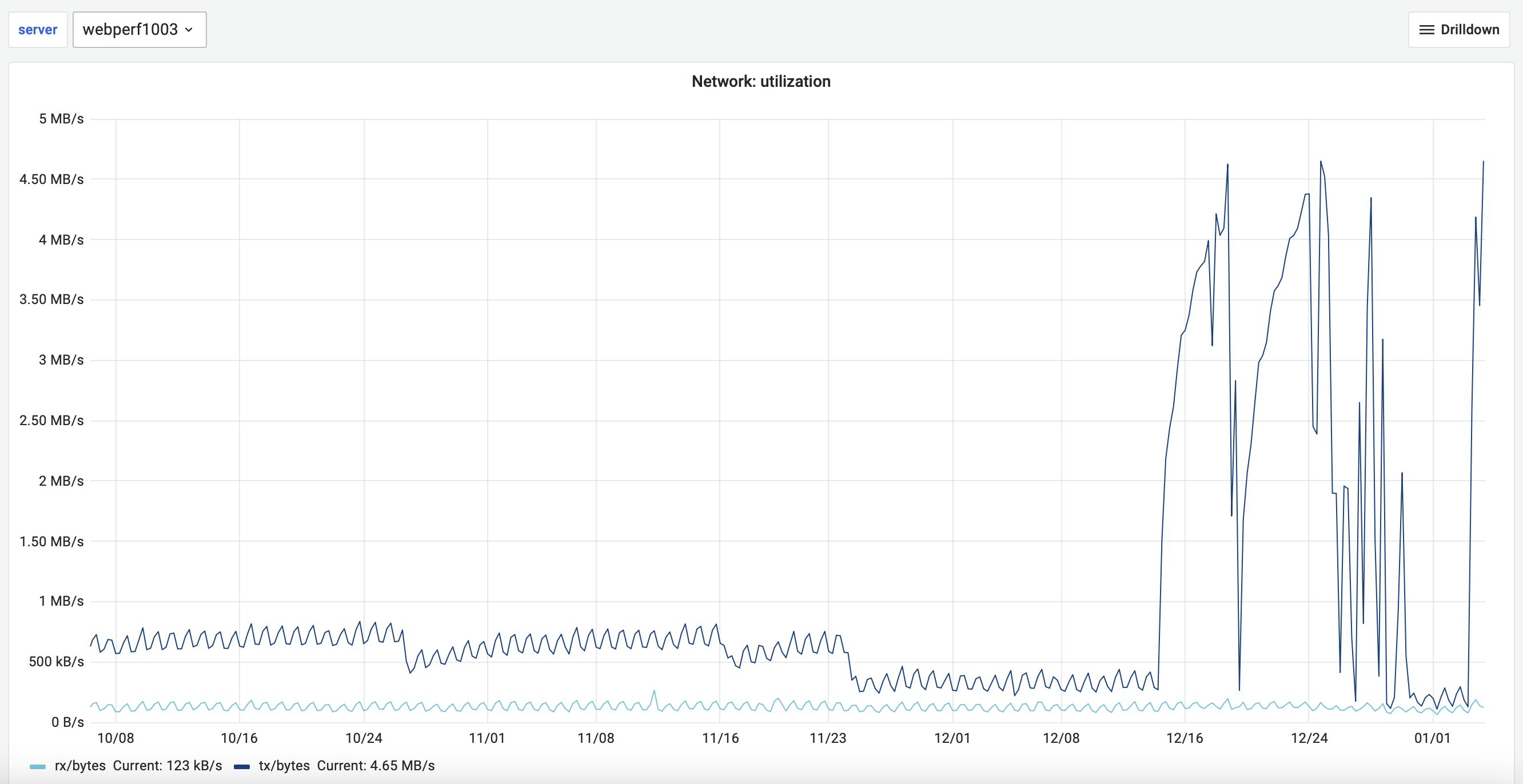

It seems like we stopped reporting metrics to Prometheus. Checking the dashboard I created before the holidays stopped working and in the CPU dashboard I can see the last reported metrics happened the 27.

Description

Details

Related Objects

- Mentioned In

- T286703: Navigation Timing cleanup

Event Timeline

I deployed the changes that was merged before Christmas and the ones @Krinkle fixed and now it works again. Lets keep a close watch and see what happens.

Where been missing metrics back and forth and when I check the time it takes to get the metrics on localhost (curl -o /dev/null -s -w 'Total: %{time_total}s\n' localhost:9230/metric) it takes more than 40 seconds to get them,

Change 875264 had a related patch set uploaded (by Filippo Giunchedi; author: Filippo Giunchedi):

[operations/puppet@production] prometheus: bump scrape timeout for webperf

Change 875264 merged by Filippo Giunchedi:

[operations/puppet@production] prometheus: bump scrape timeout for webperf

Change 875462 had a related patch set uploaded (by Phedenskog; author: Phedenskog):

[performance/navtiming@master] Disable loadEventEnd and ttfb.

Change 875462 merged by jenkins-bot:

[performance/navtiming@master] Disable loadEventEnd and ttfb.

Change 875955 had a related patch set uploaded (by Krinkle; author: Krinkle):

[performance/navtiming@master] Add support for --kafka-fixture to stub out live Kafka

On latest master with this patch checked out, navtiming --kafka-fixture events.json takes:

- ~4 minutes to complete,

- process consumes ~77MB of RAM,

- scraping localhost:9230/metric takes 1.5 seconds and returns 71K metrics lines (comment lines excluded).

If I revert bb2bf6f976 on top of this and run it again, I get:

- ~4 minutes to complete,

- ~106MB of RAM

- scrape takes 4.5 seconds and returns 206K metric lines.

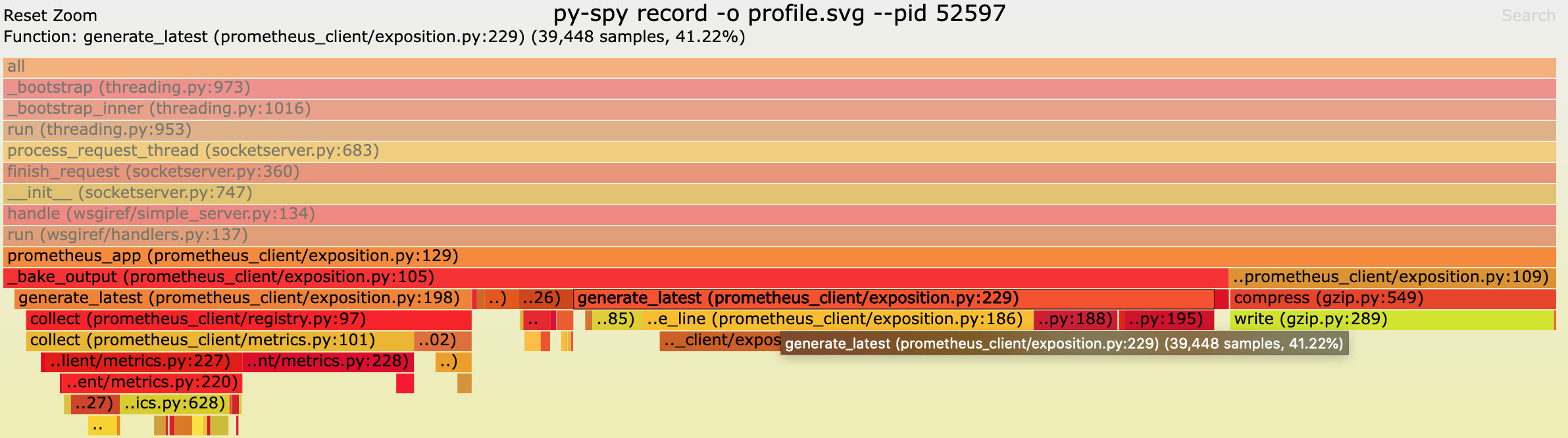

After the navtiming process finished processing the fixture and enters its idle mode, I started https://github.com/benfred/py-spy in one tab, and ran curl in to scrape in an infinite loop in a second tab:

$ .tox/py3/bin/navtiming --kafka-fixture ./events.json […] Reached end of kafka fixture file, going to sleep for 1 hour...

$ sudo py-spy record -o profile.svg --pid 52597$ watch -n0 'curl -o /dev/null -s localhost:9230/metric --compressed'Result after 15 minutes and 95K stack samples at 100/s: https://people.wikimedia.org/~krinkle/T326118-profile.svg

It looks like the method responsible in python-prometheus-client for responding to scrape requests is _bake_output (prometheus/client_python@v0.15.0:/exposition.py), which spends its time as follow:

- 29% generate_latest/collect (~1 call), this yields a representation of each of known registered metrics.

- 41% generate_latest/sample_line (N calls, 1 for each sample in each metric), this creates part of the line protocol format to describe the current metric value and its labels. Basically some_metric_name{foo=a, bar=b, etc} some_val some_timestamp.

- 20% gzip.compress (1 call)

A useful guide for me was https://prometheus.io/docs/instrumenting/writing_clientlibs/ which describes the terminology used in prometheus client codebases.

I found no major inefficiencies in the low-level logic. I could squeeze maybe 2-5% with micro optims but on the whole its code is quite minimal. It remains odd of course that it'd take a dozen seconds to translate its memory data into a few 100K lines of plain text. But, fixing that might require a larger overhaul upstream. This may be worthwhile, but, is involves more non-trivial code review from upstream. Proably worth asking first.

The two things that stand out the most:

- It seems to be "discovering" each metric and its combinations at runtime during each scrape. The way the instrumentation logic on the main thread stores things seems different from what the scrape wants for iteration so it's passing around and restructuring/constructing/allocationg a lot just to be able to yield an object that can be turned into a metric line. I was expecting the main iterator to be cheap and have all its state ready to go for string formatting, but that's clearly not the case given 50% of the non-gzip time is spent here.

- Once it has the list of objects to iterate over, it spends another 20% or so formatting numbers. More details about that at https://github.com/prometheus/client_python/issues/374.

- Gzip taking 30% of the latency, about 4s of the 12s. Not sure if it's a poor implementation or inefficient way of invocing. If not, might be worth looking at scraping without compression if we're okay transferring 100MB every minute instead of 3MB.

Change 876001 had a related patch set uploaded (by Krinkle; author: Krinkle):

[performance/navtiming@master] Apply new buckets to navtiming_responsestart_by_cache_host also

Change 876002 had a related patch set uploaded (by Krinkle; author: Krinkle):

[performance/navtiming@master] Reduce cardinality of 'namespace' label in navtiming metrics

Change 876001 merged by jenkins-bot:

[performance/navtiming@master] Apply new buckets to navtiming_responsestart_by_cache_host also

Change 876002 merged by jenkins-bot:

[performance/navtiming@master] Reduce cardinality of 'namespace' label in navtiming metrics

Change 881407 had a related patch set uploaded (by Krinkle; author: Phedenskog):

[performance/navtiming@master] Reduce ua/browser cardinality for Prometheus.

I counted the number of lines that is returned and at the moment it is 748785. Earlier today I could see that getting the metrics from Prometheus takes 40+ seconds sometimes.

Change 882763 had a related patch set uploaded (by Krinkle; author: Krinkle):

[performance/navtiming@master] Reduce cardinality of 'namespace' label in navtiming metrics

Change 881407 had a related patch set uploaded (by Krinkle; author: Phedenskog):

[performance/navtiming@master] Reduce ua/browser cardinality for Prometheus.

Change 881407 merged by jenkins-bot:

[performance/navtiming@master] Reduce ua/browser cardinality for Prometheus.

Change 882763 merged by jenkins-bot:

[performance/navtiming@master] Consolidate mw_context labels to reduce cardinality further