Attempting to image cp5017 and cp5025 (eqsin) randomly ends with infinite waiting periods when the cookbook issues a reboot. Re-running the cookbook will occasionally yield success. It appears that other DCs are not affected according to @ssingh

The final lines before cookbook infinitely waits are:

Running IPMI command: ipmitool -I lanplus -H cp5025.mgmt.eqsin.wmnet -U root -E chassis power cycle Host rebooted via IPMI

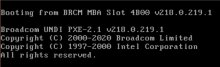

No useful output can be found in the console when the server is stuck.

Is there a connectivity issue preventing a successful reconnection after reboot?