The Spark History Service allows cluster users the ability to examine the retrospective status of their jobs, including many details that faciliatate troubleshooting. Without this service we are losing valuable insights into the performance characteristics of both our production and ad-hoc cluster jobs.

Whilst it can be run as a standalone service, the most useful configuration for us is when the Spark History Service is integrated with the YARN job browser interface, which we run at https://yarn.wikimedia.org - We already have the spark UI available for running jobs, but once a task has finished we lose access to this information. By integrating it with the YARN job browser we can make this a unified interface for current and historical jobs.

The most useful information on the Spark History Service is here: https://spark.apache.org/docs/latest/monitoring.html

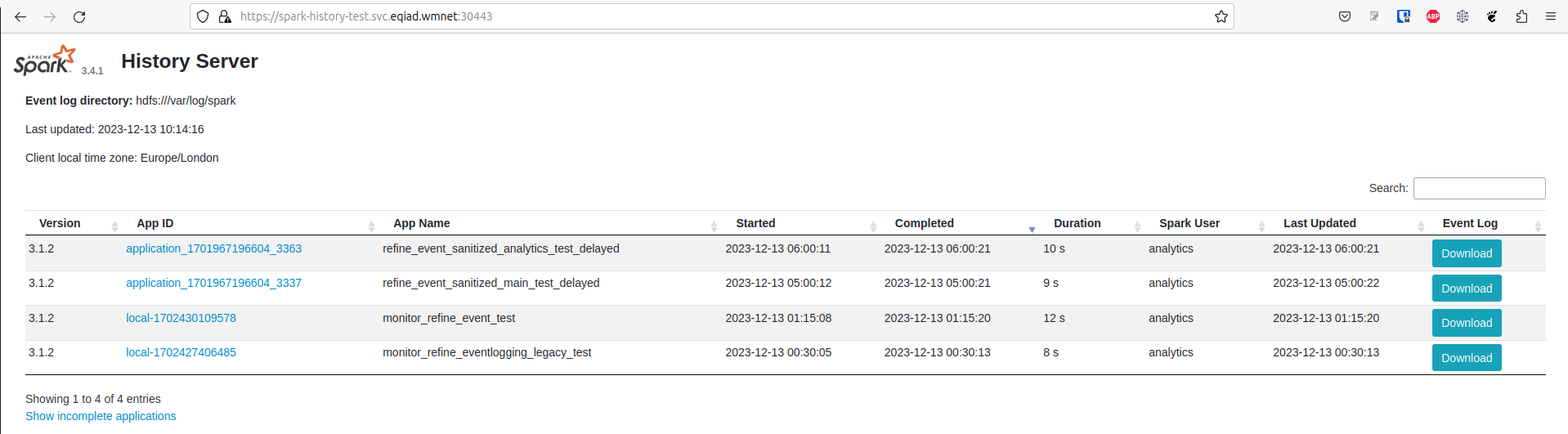

In terms of its architecture, it relies on spark jobs being instrumented in such a way that they write event log files (one per app) to a common directory on HDFS.

The history server then loads these jobs files and presents the information via its UI. The History server can also be responsible for log rotation and compression, if required.

We have decided that we would like to run the Spark History Server under Kubernetes on the DSE-K8S cluster.

We have a set of Spark images that we already use for the spark-operator, so we are adapting this image so this it is suitable for running this history server daemon too.

A number of other tasks remain to be completed, as per: https://wikitech.wikimedia.org/wiki/Kubernetes/Add_a_new_service

- Decide how to handle the test cluster - T351716

On the server side

- Set up the kubeconfig files - T351711

- Add two namespaces (spark-history and spark-history-test) - T351713

- Create a docker image containing kerberos-related tooling - T352406

- Create a helm-chart for spark-history - T351722

- Define the two helmfile deployments - T352860

- Grant permission to /var/log/spark for the associated principal - T352838

- Add private data (i.e. the keytab) - T351816

- Configure ingress to the services - T352639

- Deploy the services - T352861

- Ensure that suitable metrics are being gathered - T353694

- Configure availability monitoring - T353717

- Test visibility of spark job data - T352882

- Configure the YARN resourcemanager with the history service URL - T352863

- Document the service - T353232

- Investigate Spark History Server silent errors when downloading some files from HDFS - T354777

- Setup an appropriate retention policy - T354927

- Tweak memory settings - T354929

On the client side

- Configure the spark defaults with the required options - T352849

Acceptance Criteria

- Spark history is accessible on the test cluster via SSH tunnelling to an-test-master*

- Spark history is accessible on the production cluster via https://yarn.wikimedia.org