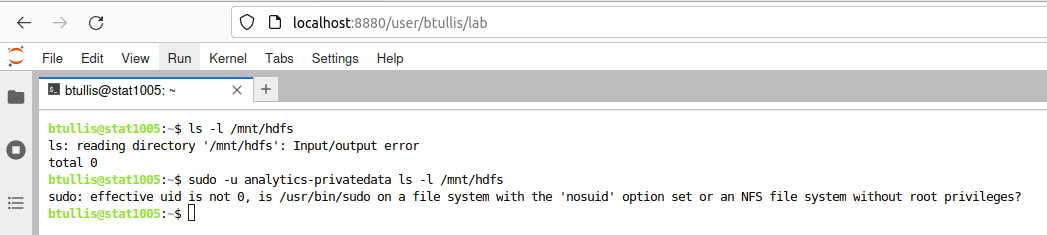

jupyterhub-conda.service our recently refreshed bullseye hadoop test client an-test-client1002 is currentl y having an error similar to the one faced with systemd-timesyncd.service on T310643

The error on our bullseye hadoop test client an-test-client1002 looks like

Mar 29 09:33:32 an-test-client1002 systemd[391070]: jupyterhub-conda.service: Failed to set up mount namespacing: /run/systemd/unit-root/: Input/output error Mar 29 09:33:32 an-test-client1002 systemd[391070]: jupyterhub-conda.service: Failed at step NAMESPACE spawning /opt/conda-analytics/bin/jupyterhub: Input/output error Mar 29 09:33:32 an-test-client1002 systemd[1]: jupyterhub-conda.service: Main process exited, code=exited, status=226/NAMESPACE Mar 29 09:33:32 an-test-client1002 systemd[1]: jupyterhub-conda.service: Failed with result 'exit-code'.