EventLogging error msg

Seen in IE8 as anon and as logged-in user, likely to occur in other pre-IE9 versions:

Edit any page in IE8, for instance a user talk page.

- No editing toolbar appears.

- Link to "My Sandbox" is gone

- User javascript is gone

- Editing toolbar text only appears at bottom of edit screen but does not function in any way

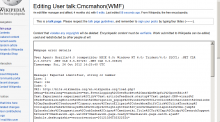

Complete error msg is shown in attachment, I'm running IE8 in a VM and can't copy/paste, but error is "Expected identifier, string or number" from bits...load.php...EventLogging

Version: 1.21.x

Severity: major

Attached: