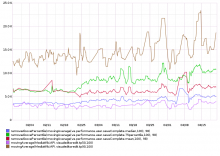

Time-series graph showing save timing from 1/28 to 4/20

Compared to the start of February 2014, median user-perceived save time is up by approximately a full second, whereas the save time at the 75th percentile is up ~4.5 seconds, jumping from 6.5 to 11 seconds. Backend profiling data for action=visualeditoredit corroborates this and suggests the problem is at the backend.

Attached graph can be manipulated in Graphite Composer at http://graphite.wikimedia.org/; look for it under User Graphs -> ori.

Version: unspecified

Severity: major

See Also:

https://bugzilla.wikimedia.org/show_bug.cgi?id=66914

Attached: