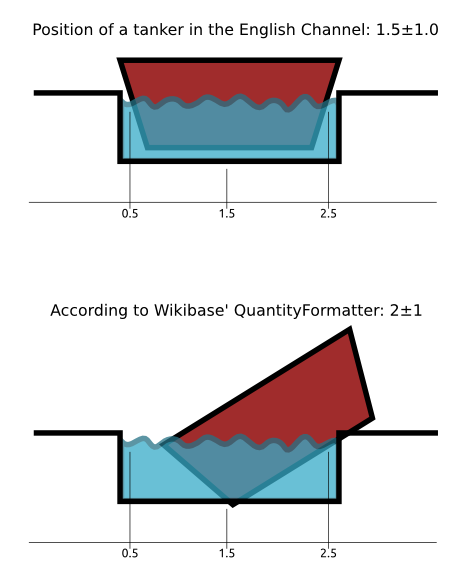

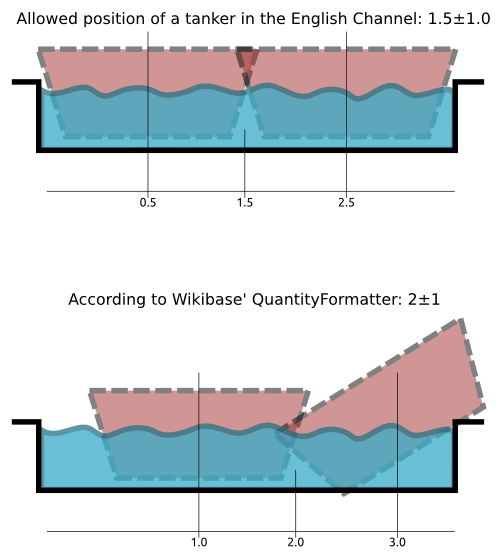

Enter 1.991+-1 in a quantity statement. It is stored via the API as expected (Amount: 1.991, Upper bound: 2.991, Lower bound: 0.991), but rendered as 2+-1. This causes significant data loss whenever somebody uses the shown value.

Found this while reviewing https://github.com/wmde/WikidataBrowserTests/pull/66.