Code Health

Inside a broad Code Health project there is a small Code Health Metrics group. We meet weekly and discuss how code health could be improved by metrics. Each member has only a few hours each week to work on this, so our projects are small.

In our discussions, we have agreed on a few principles. Some of them are:

- Metrics are about improving the process as much improving the code.

- Focus on new code, not existing one.

- Humans are smarter than tools.

The goal of the project is to provide fast and actionable feedback on code health metrics. Since our time for this project is limited, we've decided to make a spike (T207046). The spike focuses on:

- one repository,

- one language,

- one metric,

- one tool,

- one feedback mechanism.

All of the above tasks are already completed, except for the last one. In parallel to finishing the spike, we are also working on expanding the scope to more repositories, languages and metrics. At the moment, the spike works for several Java repositories.

SonarQube

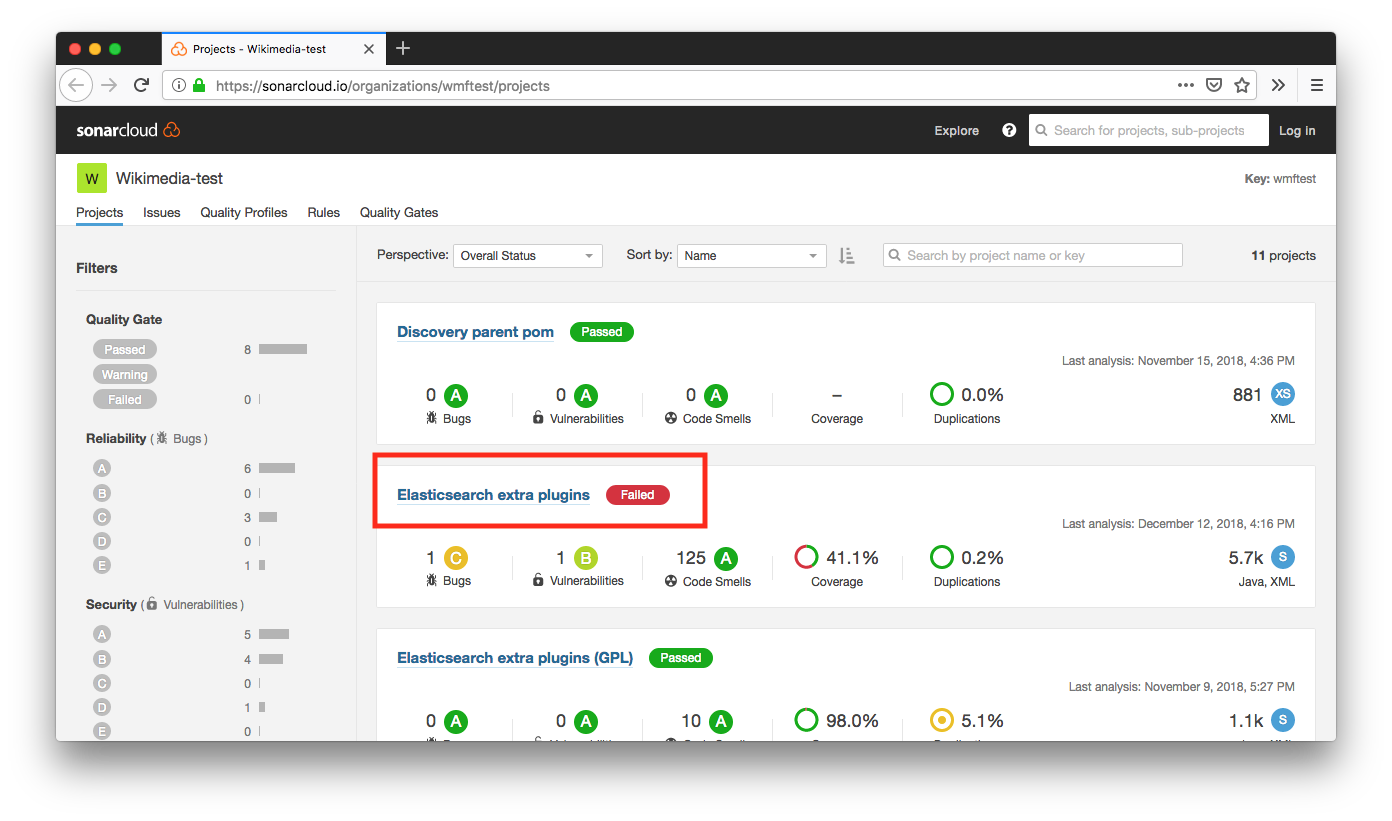

After some investigation, the tool we have selected is SonarQube. The tool does everything we need, and more. In this post I'll only mention one feature. We have decided not to host SonarQube ourselves at the moment. We are using a hosted solution, SonarCloud. You can see the our current dashboart at wmftest organization at SonarCloud.

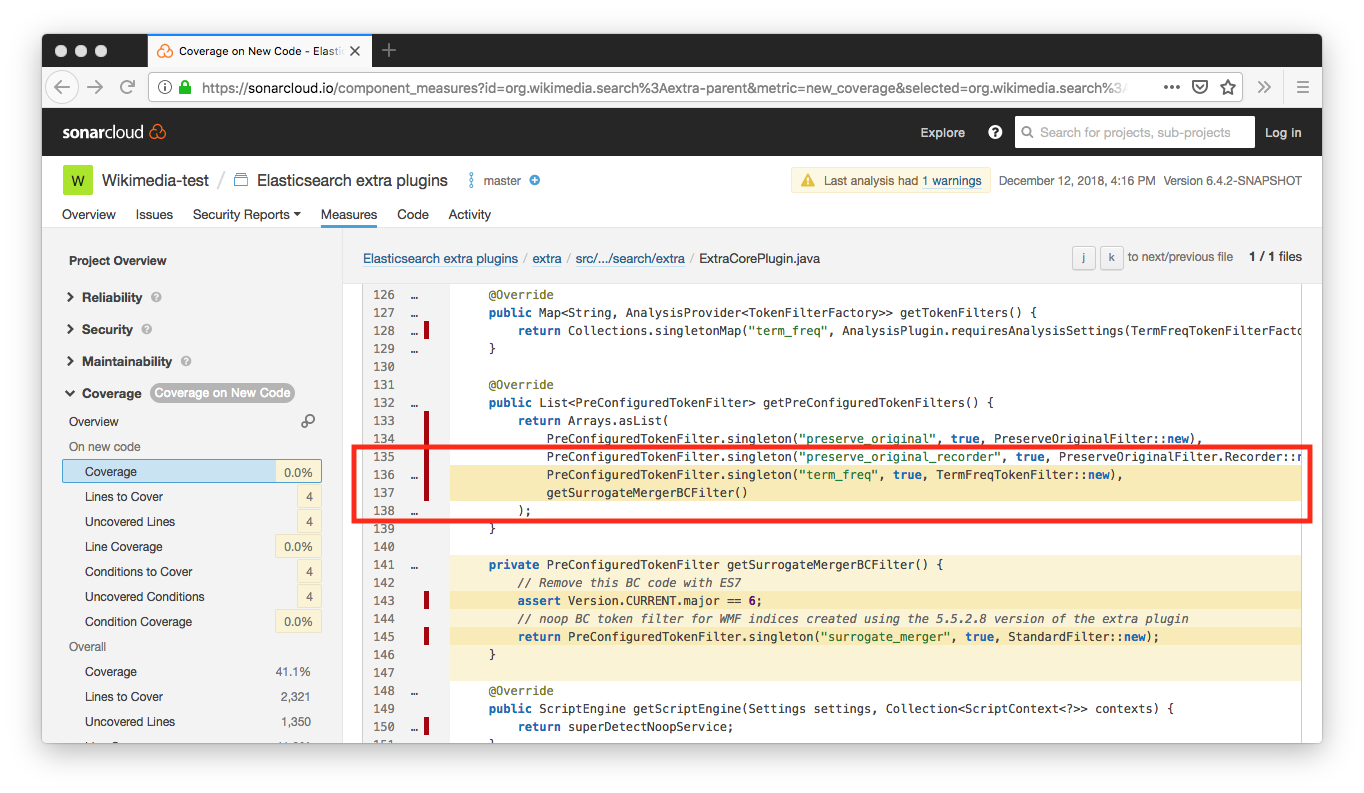

As mentioned in the principles, in order to make the metrics actionable, we've decided to focus only on new code, ignoring existing code for now. That means that when you make a change to a repository with a lot of code, you are not overwhelmed with all metrics (and problems) the tool has found. Instead, the tool focuses just on the code you have wrote. So, for example, if a small patch you have submitted to a big repository does not introduce new problems, the tool says so. If the patch introduces new problems (like decreased branch coverage) the tools let's you know.

Members of the Code Health Metrics group have reminded me multiple times that I have to mention SonarLint, an IDE extension. I don't use it myself, since it doesn't support my favorite editor.

Example

A good example is at at wmftest organization at SonarCloud. Elasticsearch extra plugins has failed quality gate.

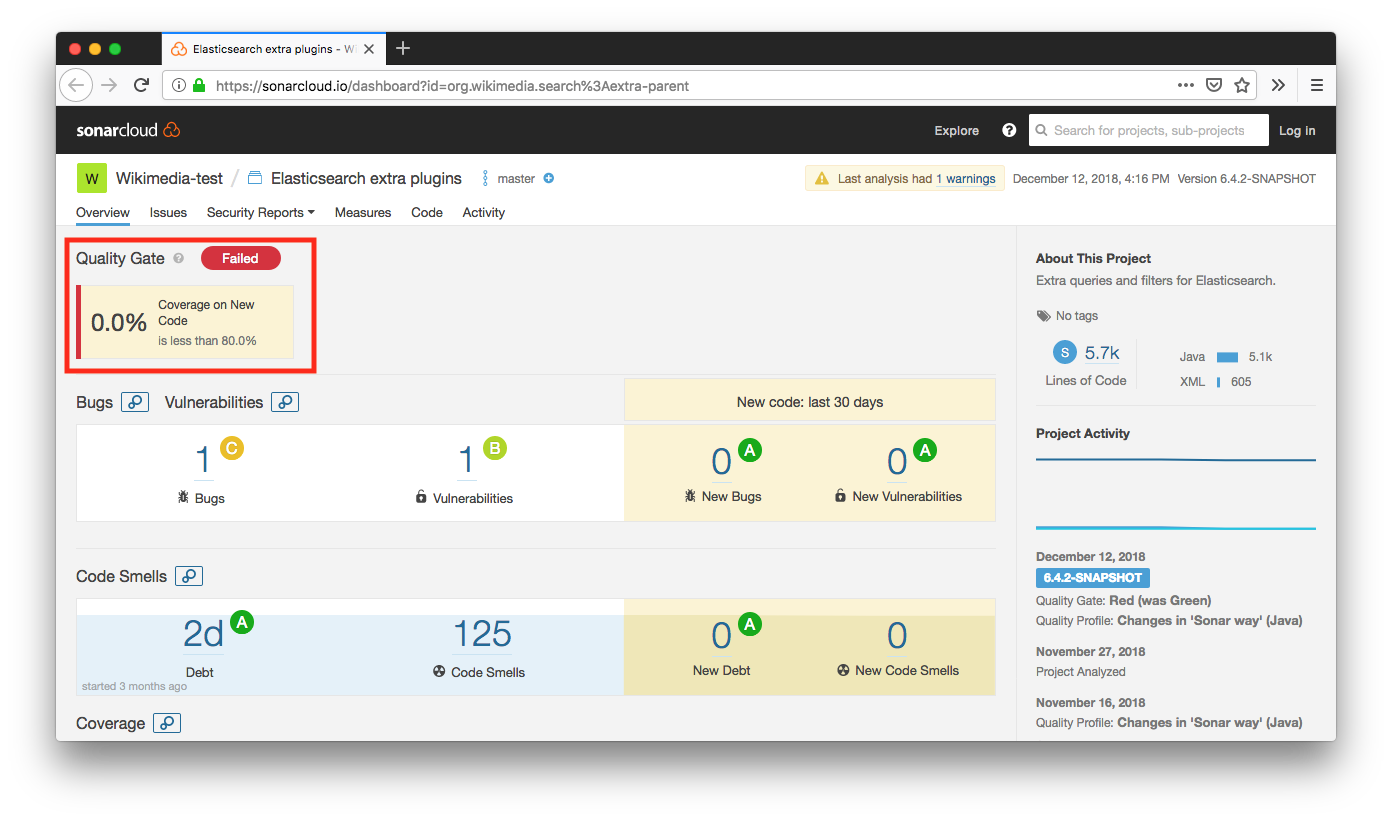

Opening the project Elasticsearch extra plugins project you see that the failure is related to test coverage (less than 80%).

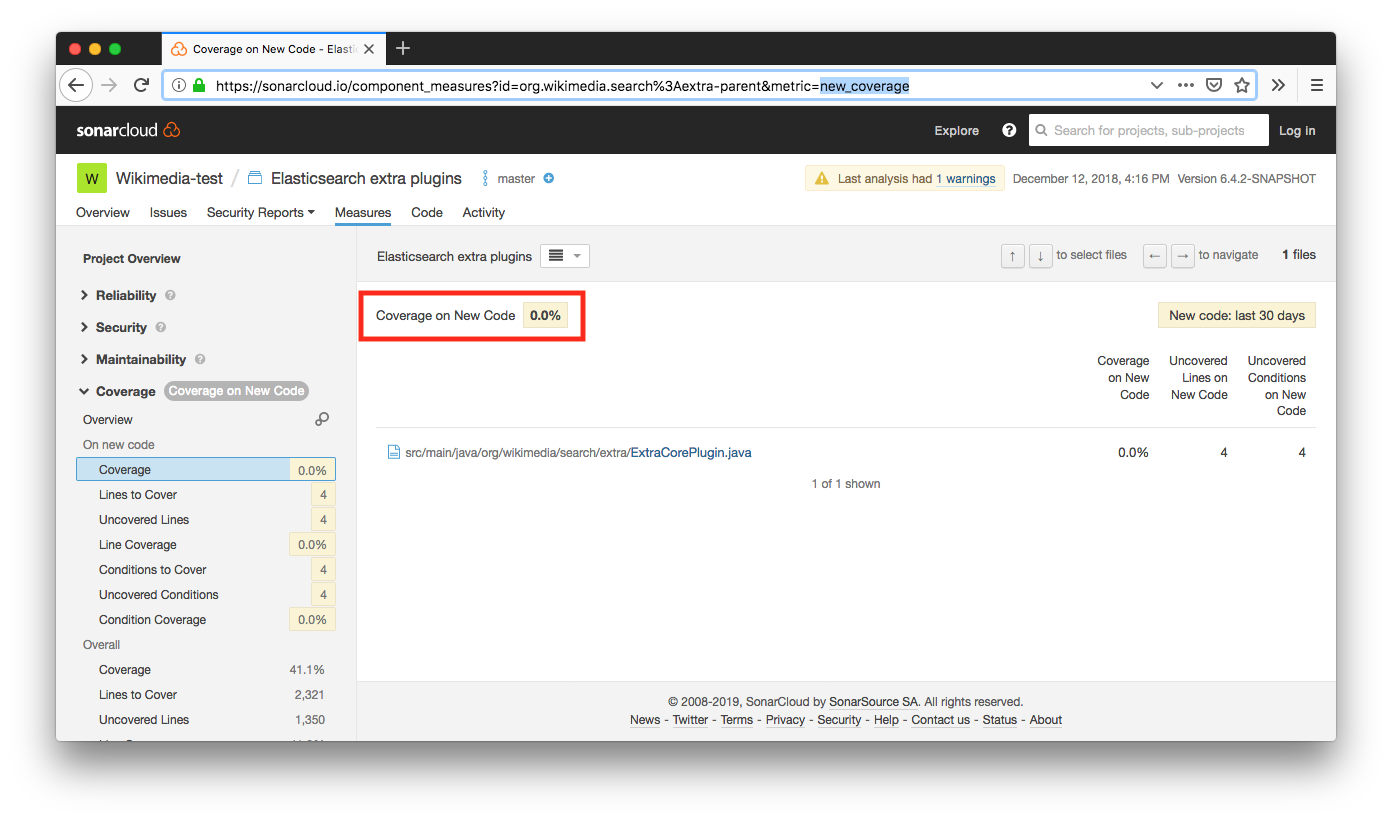

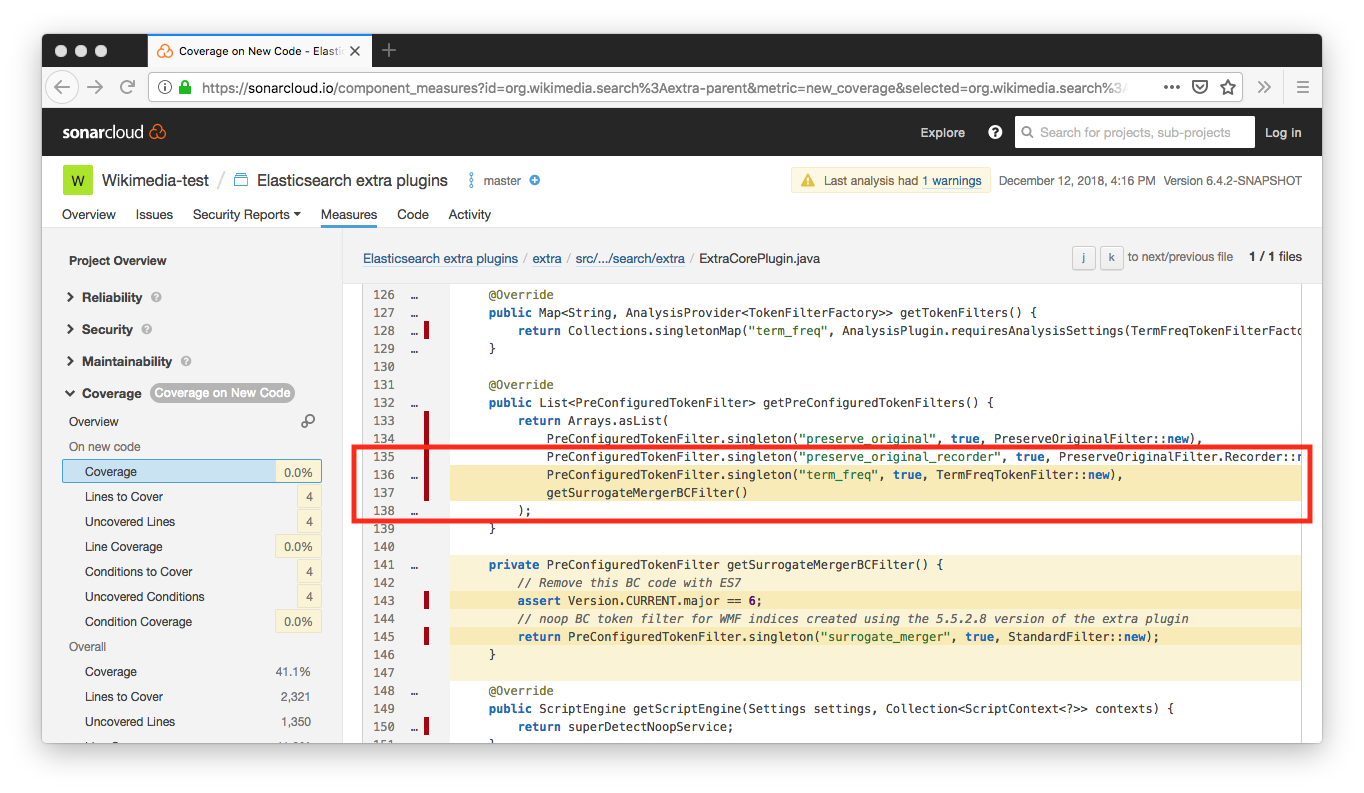

Click the warning and you get more details: Coverage on New Code 0.0%.

Click the ExtraCorePlugin.java file. New lines have yellow background. It's easy to see that there are lines that are marked red (meaning no coverage) but it's also easy to see which new lines (yellow background) have no coverage (red sidebar).

Talks

We have planned to present what we have so far during Wikimedia Foundation All Hands. The prepare for that, we're created this blog post and presented at 5 Minute Demo and Testival Meetup.

I would like to thank all members of the Code Health Metrics Working group for help writing this post and especially to Guillaume Lederrey and Kosta Harlan.

FAQ

Q: Sonar-what?!

A: SonarQube is the tool. SonarCloud is the hosted version of the tool. SonarLint in an IDE extension.

Q: When can I use this on my project?

A: Soon. Probably when T207046 is resolved. If there are no blockers, in a few weeks.

Q: Why are we using SonarCloud instead of hosting SonarQube ourselves?

A: We did not want to invest time in hosting it ourselves until we're sure the tool is the right choice for us.

- Projects

- Subscribers

- None

- Tokens