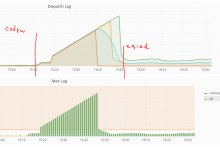

At the time of the eqiad -> codfw DC switch the average dispatch lag times for wikidata dropped from ~2.5 mins on average down to ~16 seconds.

This can be seen on https://grafana.wikimedia.org/dashboard/db/wikidata-dispatch?orgId=1&from=1535809586024&to=1538401586025

We should investigate what exactly caused this.

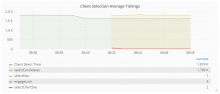

Looking at the metrics of the dispatching script it appears that the total amount of time spent selecting clients dramatically dropped.

Possible causes?:

- different mwmaint hardware

- different db hardware

- different redis hardware

- some other db related difference

- something else?