Evaluate Rya an alternative to Virtuoso with a hadoop based columnar store that scales easily.

"Apache Rya is a scalable RDF Store that is built on top of a Columnar Index Store (such as Accumulo). It is implemented as an extension to RDF4J to provide easy query mechanisms (SPARQL, SERQL, etc) and Rdf data storage (RDF/XML, NTriples, etc)."

Pro:

- scales by having Accumulo which is build on Hadoop cluster

- has SPARQL endpoint

- Apache top-level project

Con:

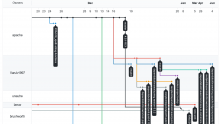

- no commits since dec 2020

- few stars and forks in Github

See also https://www.wikidata.org/wiki/Wikidata:WikiProject_Limits_of_Wikidata#Apache_Rya