Background

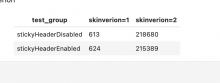

During the analysis of the sticky header a/b test T298873: Analyze results of sticky header A/B test, we identified that 809 sessions were assigned to both the control and test groups. We would like to look into why this is happening in order to avoid it in future tests

Acceptance criteria

- Determine reasons for duplicate A/B test bucketing