This task will track the racking, setup, and OS installation of stat1010.eqiad.wmnet

Hostname / Racking / Installation Details

Hostnames: stat1010.eqiad.wmnet

Racking Proposal: Replacing stat1005 - no restriction on location

Networking/Subnet/VLAN/IP: Single 10G network connection - analytics vlan please

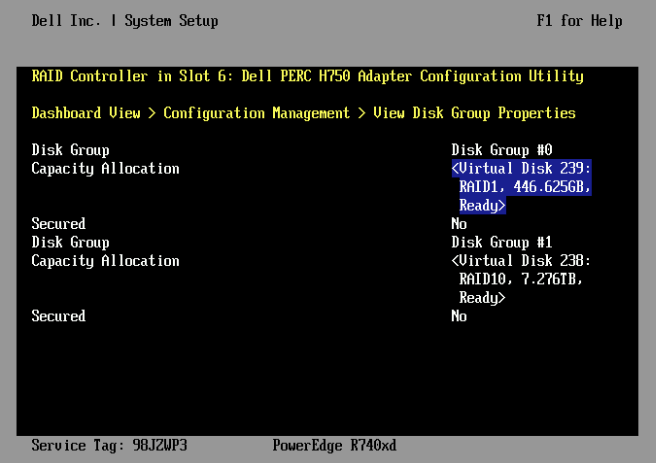

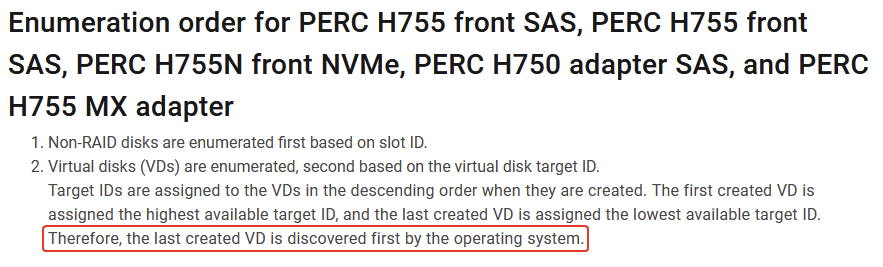

Partitioning/Raid: RAID 1 pair for O/S - RAID 10 on four disks for /srv - hardware RAID

OS Distro: Bullseye

Per host setup checklist

stat1010:

- - receive in system on procurement task T297736 & in coupa

- - rack system with proposed racking plan (see above) & update netbox (include all system info plus location, state of planned)

- - add mgmt dns (asset tag and hostname) and production dns entries in netbox, run cookbook sre.dns.netbox.

- - network port setup via netbox, run homer from an active cumin host to commit

- - bios/drac/serial setup/testing, see Lifecycle Steps & Automatic BIOS setup details

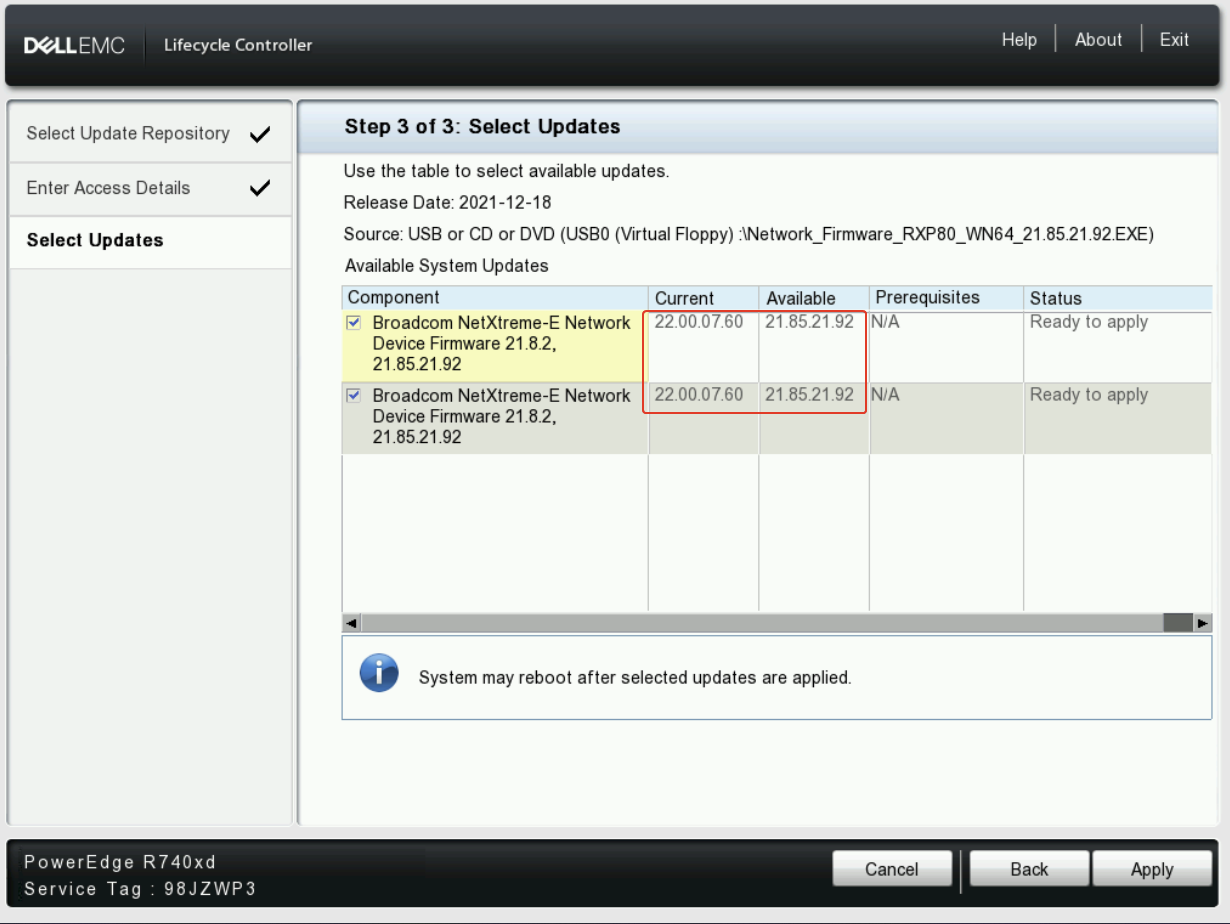

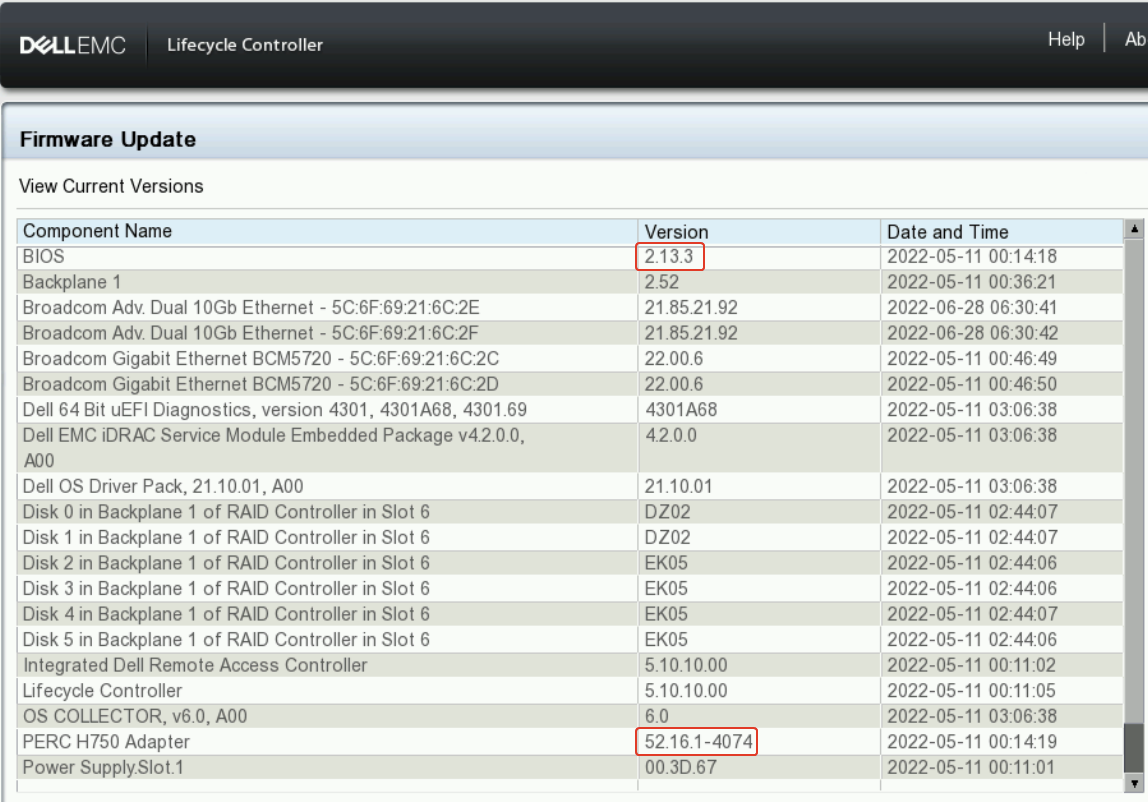

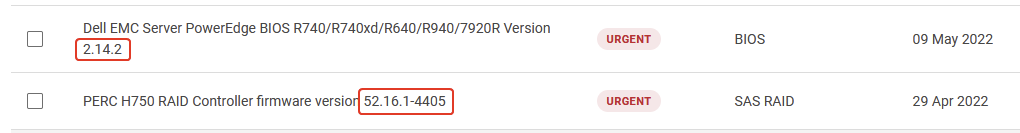

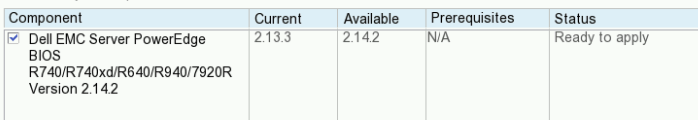

- - firmware update (idrac, bios, network, raid controller) **n.b. NIC firmware downgraded. See: T304483 for details. BIOS and RAID firmware updated. No iDRAC update available.

- - operations/puppet update - this should include updates to netboot.pp, and site.pp role(insetup) or cp systems use role(insetup::nofirm).

- - OS installation & initital puppet run via sre.hosts.reimage cookbook.