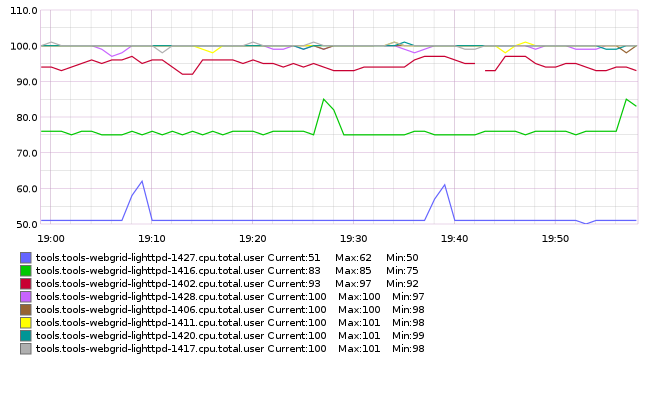

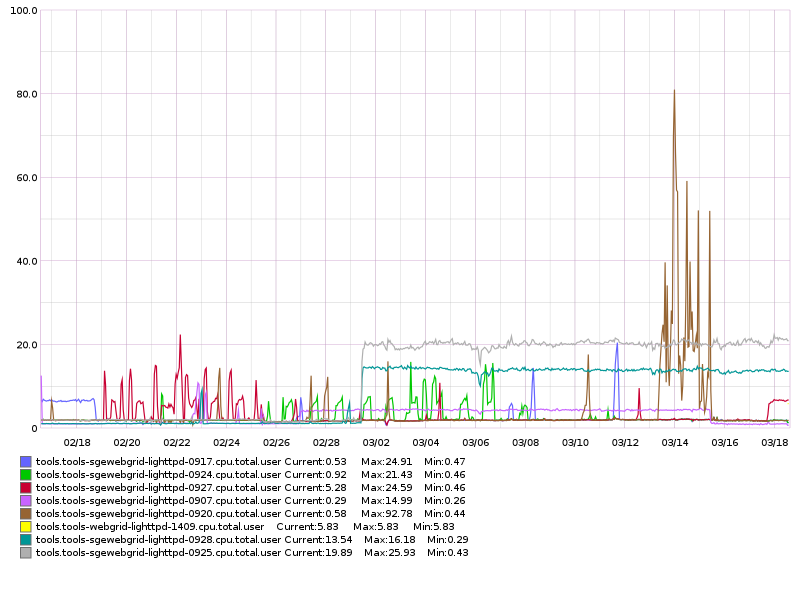

Graphite quick check: Top 8 webgrid-lighttpd instances

by average CPU over one hour

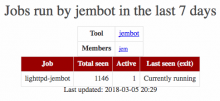

A recent snapshot of activity shows 1146 restarts of the webservice in the last 7 calendar days

The error.log data seems to indicate that the main lighttpd process is being killed on a regular basis for exceeding its memory limit:

2018-03-05 21:30:23: (log.c.166) server started 2018-03-05 21:40:21: (server.c.1558) server stopped by UID = 0 PID = 25145 2018-03-05 21:40:24: (log.c.166) server started 2018-03-05 21:50:20: (server.c.1558) server stopped by UID = 0 PID = 30322 2018-03-05 21:50:23: (log.c.166) server started 2018-03-05 22:00:21: (server.c.1558) server stopped by UID = 0 PID = 7331 2018-03-05 22:00:23: (log.c.166) server started 2018-03-05 22:10:20: (server.c.1558) server stopped by UID = 0 PID = 19091 2018-03-05 22:10:22: (log.c.166) server started 2018-03-05 22:20:20: (server.c.1558) server stopped by UID = 0 PID = 7240 2018-03-05 22:20:22: (log.c.166) server started 2018-03-05 22:30:21: (server.c.1558) server stopped by UID = 0 PID = 10593 2018-03-05 22:30:23: (log.c.166) server started

See also:

- T132879: High load on some webgrid nodes

- T182070: tools-webgrid-lighttpd have ~ 90 procs stuck at 100% CPU time (mostly tools.jembot)

- T179378: some labvirt servers are at full CPU capacity

- T109362: continuous jobs killed during restart despite rescheduling

- T153281: webgrid-lighttpd queues kill OOM jobs with SIGKILL leaving php-cgi processes behind