TLDR workaround

Here are the clush commands @bd808 has been using to first check for and then kill processes that have leaked out of grid engine due to some cleanup failure:

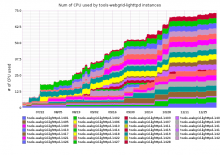

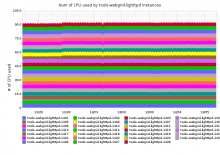

$ clush -w @exec -w @webgrid -b 'ps axwo user:20,ppid,pid,cmd | grep -Ev "^($USER|root|daemon|diamond|_lldpd|messagebus|nagios|nslcd|ntp|prometheus|statd|syslog|Debian-exim|www-data|sgeadmin)"|grep -v perl|grep -E " 1 "'$ clush -w @exec -w @webgrid -b 'ps axwo user:20,ppid,pid,cmd | grep -Ev "^($USER|root|daemon|diamond|_lldpd|messagebus|nagios|nslcd|ntp|prometheus|statd|syslog|Debian-exim|www-data|sgeadmin)"|grep -v perl|grep -E " 1 "|awk "{print \$3}"|xargs sudo kill -9'While looking at CPU usage of instances on labs, I found out that tools-webgrid-lighttpd-* have high user CPU usage being reported. That seems to be leaking over time as shown for 5 months (CPU usage is number of CPU being busy):

Over a week:

It seems we have the equivalent of 90 CPU being stuck at 100%.

View of CPU usage for tools-webrid-lighttpd-* instances.

Based on:

# List proces per cputime, percent cpu, command and username # Filter on cputime of 1 day or more and current cpu being at least 10% cmd="ps -e -o cputime,pcpu,cmd,user|grep -P '^\d+-.* \d\d\.'|sort -n|sed -e 's%\(^\|\s\+\)% | %g'" for i in $(seq 1401 1428); do ssh "tools-webgrid-lighttpd-$i.tools.eqiad.wmflabs" "$cmd" | sed -e "s%^%| $i %" done

The top offender seems to be tools.jembot. As of January 4th 2018

| Instance | Cumulative time | Utilization | Command | User |

|---|---|---|---|---|

| 1401 | 20-14:46:57 | 99.2 | /usr/bin/php-cgi | tools.jembot |

| 1401 | 178-13:37:34 | 95.5 | /usr/bin/php-cgi | tools.blockcalc |

| 1404 | 20-12:40:45 | 99.8 | /usr/bin/php-cgi | tools.jembot |

| 1404 | 20-18:45:12 | 99.8 | /usr/bin/php-cgi | tools.jembot |

| 1404 | 170-12:48:17 | 91.2 | /usr/bin/php-cgi | tools.wam |

| 1405 | 20-22:20:24 | 99.8 | /usr/bin/php-cgi | tools.jembot |

| 1405 | 174-15:13:09 | 93.4 | /usr/bin/php-cgi | tools.dupdet |

| 1407 | 20-11:46:07 | 99.7 | /usr/bin/php-cgi | tools.jembot |

| 1407 | 21-02:11:31 | 99.6 | /usr/bin/php-cgi | tools.jembot |

| 1407 | 157-10:17:17 | 99.4 | /usr/bin/php-cgi | tools.wsexport |

| 1408 | 65-23:01:42 | 90.2 | /usr/bin/php-cgi | tools.spellcheck |

| 1408 | 69-02:01:07 | 90.5 | /usr/bin/php-cgi | tools.spellcheck |

| 1408 | 77-08:11:17 | 98.3 | /usr/bin/php-cgi | tools.pinyin-wiki |

| 1408 | 79-03:28:27 | 96.5 | /usr/bin/php-cgi | tools.spellcheck |

| 1409 | 43-06:46:25 | 72.3 | /usr/bin/php-cgi | tools.jembot |

| 1409 | 43-06:47:33 | 72.3 | /usr/bin/php-cgi | tools.jembot |

| 1409 | 43-06:49:12 | 72.3 | /usr/bin/php-cgi | tools.jembot |

| 1409 | 43-06:50:14 | 72.3 | /usr/bin/php-cgi | tools.jembot |

| 1410 | 7-05:27:30 | 48.8 | /usr/bin/php-cgi | tools.jembot |

| 1410 | 7-05:27:32 | 48.8 | /usr/bin/php-cgi | tools.jembot |

| 1410 | 7-05:27:41 | 48.8 | /usr/bin/php-cgi | tools.jembot |

| 1410 | 7-05:27:59 | 48.8 | /usr/bin/php-cgi | tools.jembot |

| 1410 | 20-13:12:38 | 98.3 | /usr/bin/php-cgi | tools.jembot |

| 1410 | 185-05:23:07 | 99.1 | /usr/bin/php-cgi | tools.supercount |

| 1411 | 7-12:04:11 | 98.9 | /usr/bin/php-cgi | tools.dupdet |

| 1411 | 21-00:40:14 | 99.9 | /usr/bin/php-cgi | tools.jembot |

| 1411 | 39-01:19:03 | 98.1 | /usr/bin/php-cgi | tools.dupdet |

| 1412 | 117-15:40:47 | 79.5 | /usr/bin/php-cgi | tools.sowhy |

| 1412 | 117-16:36:43 | 79.5 | /usr/bin/php-cgi | tools.sowhy |

| 1413 | 20-11:19:09 | 99.4 | /usr/bin/php-cgi | tools.jembot |

| 1413 | 99-16:41:22 | 98.0 | /usr/bin/php-cgi | tools.croptool |

| 1413 | 101-22:23:08 | 98.6 | /usr/bin/php-cgi | tools.wsexport |

| 1414 | 6-01:45:25 | 49.1 | /usr/bin/php-cgi | tools.jembot |

| 1414 | 6-01:46:21 | 49.1 | /usr/bin/php-cgi | tools.jembot |

| 1414 | 6-01:47:22 | 49.1 | /usr/bin/php-cgi | tools.jembot |

| 1414 | 6-01:47:32 | 49.1 | /usr/bin/php-cgi | tools.jembot |

| 1414 | 20-14:59:49 | 98.9 | /usr/bin/php-cgi | tools.jembot |

| 1414 | 20-23:36:58 | 98.9 | /usr/bin/php-cgi | tools.jembot |

| 1415 | 12-06:45:37 | 99.8 | /usr/bin/php-cgi | tools.jembot |

| 1415 | 110-05:29:54 | 95.8 | /usr/bin/php-cgi | tools.dupdet |

| 1415 | 137-08:25:28 | 99.2 | /usr/bin/php-cgi | tools.wsexport |

| 1416 | 20-09:10:48 | 98.8 | /usr/bin/php-cgi | tools.jembot |

| 1416 | 20-09:12:58 | 98.8 | /usr/bin/php-cgi | tools.jembot |

| 1416 | 41-10:19:46 | 90.5 | /usr/bin/php-cgi | tools.wsexport |

| 1416 | 111-15:27:57 | 96.1 | /usr/bin/php-cgi | tools.jembot |

| 1417 | 10-14:06:20 | 71.9 | /usr/bin/php-cgi | tools.jembot |

| 1417 | 10-14:11:09 | 71.9 | /usr/bin/php-cgi | tools.jembot |

| 1417 | 10-14:25:11 | 72.0 | /usr/bin/php-cgi | tools.jembot |

| 1417 | 10-14:38:52 | 72.1 | /usr/bin/php-cgi | tools.jembot |

| 1417 | 104-16:14:40 | 96.0 | /usr/bin/php-cgi | tools.wsexport |

| 1418 | 26-22:28:14 | 98.4 | /usr/bin/php-cgi | tools.jembot |

| 1418 | 26-22:29:15 | 98.4 | /usr/bin/php-cgi | tools.jembot |

| 1418 | 26-22:30:01 | 98.4 | /usr/bin/php-cgi | tools.jembot |

| 1418 | 26-22:30:06 | 98.4 | /usr/bin/php-cgi | tools.jembot |

| 1419 | 20-14:39:46 | 99.8 | /usr/bin/php-cgi | tools.jembot |

| 1419 | 21-03:42:07 | 99.7 | /usr/bin/php-cgi | tools.jembot |

| 1420 | 20-14:47:52 | 99.8 | /usr/bin/php-cgi | tools.jembot |

| 1420 | 20-14:54:44 | 99.8 | /usr/bin/php-cgi | tools.jembot |

| 1420 | 21-03:49:59 | 99.8 | /usr/bin/php-cgi | tools.jembot |

| 1421 | 22-09:58:37 | 98.4 | /usr/bin/php-cgi | tools.jembot |

| 1421 | 22-10:00:35 | 98.4 | /usr/bin/php-cgi | tools.jembot |

| 1421 | 22-10:03:00 | 98.4 | /usr/bin/php-cgi | tools.jembot |

| 1421 | 22-10:03:32 | 98.4 | /usr/bin/php-cgi | tools.jembot |

| 1422 | 25-05:58:24 | 98.8 | /usr/bin/php-cgi | tools.jembot |

| 1422 | 25-06:00:02 | 98.8 | /usr/bin/php-cgi | tools.jembot |

| 1422 | 25-06:00:47 | 98.8 | /usr/bin/php-cgi | tools.jembot |

| 1422 | 25-06:02:09 | 98.8 | /usr/bin/php-cgi | tools.jembot |

| 1425 | 23-21:45:04 | 98.4 | /usr/bin/php-cgi | tools.jembot |

| 1425 | 23-21:46:35 | 98.4 | /usr/bin/php-cgi | tools.jembot |

| 1425 | 23-21:46:58 | 98.4 | /usr/bin/php-cgi | tools.jembot |

| 1425 | 23-21:48:50 | 98.4 | /usr/bin/php-cgi | tools.jembot |

| 1426 | 24-18:01:55 | 98.8 | /usr/bin/php-cgi | tools.jembot |

| 1426 | 24-18:02:38 | 98.8 | /usr/bin/php-cgi | tools.jembot |

| 1426 | 24-18:03:03 | 98.8 | /usr/bin/php-cgi | tools.jembot |

| 1426 | 24-18:03:22 | 98.8 | /usr/bin/php-cgi | tools.jembot |

| 1427 | 24-18:30:27 | 99.6 | /usr/bin/php-cgi | tools.jembot |

| 1427 | 24-18:31:11 | 99.6 | /usr/bin/php-cgi | tools.jembot |

| 1427 | 24-19:33:26 | 99.8 | /usr/bin/php-cgi | tools.jembot |

| 1428 | 35-13:33:16 | 93.3 | /usr/bin/php-cgi | tools.iabot |

| 1428 | 134-02:15:20 | 97.8 | /usr/bin/php-cgi | tools.wsexport |