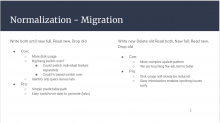

I) Preparation

- update write logic so that it would write to both schemas (wb_terms and normalized one) on configuration (Task T219297 and T219295)

- write a maintenance scripts for populating the normalized tables with property terms (Task T219894)

- write a maintenance scripts for populating the normalized tables with item terms (part of Checkpoint T219122)

II) Property Terms migration (small footprint on time and size)

turn on configuration for writing to both schemas for properties & run the maintenance script for properties (part of Checkpoint T219301)

estimated increase in disk space usage =

ratio of properties/entities * current disk usage of wb_terms (incl. indexes) as of March 2019 * ratio of data size before/after normalization (accord. to test run and incl. indexes)

estimated increase in disk space usage =

0.0001 * 846GB * 0.2 = ~17.5MBso roughly we need extra of 18MB for property terms migration. this extra size is not expected to grow significantly before we manage to reach the point to drop the whole wb_terms.

migration time is hard to estimate, but is not expected to be long for properties. We will measure and use that time to later estimate item migration time.

III) Item Terms migration (big footprint on time and size)

turn on configuration for writing to both schemas for items & run the maintenance script for items (part of Checkpoint T219123)

estimated increase in disk space usage =

ratio of items/entities * current disk usage of wb_terms (incl. indexes) as of March 2019 * ratio of data size before/after normalization (accord. to test run and incl. indexes)

estimated increase in disk space usage =

0.9999 * 846GB * 0.2 = ~170GBso roughly we need extra of 170GB for item terms migration. expected increased to this number may be significant.

migration time is to be estimated based on proeprty terms migration is over and measured.

rollback plan

Stopping migration and rolling back is straightfowrad in this plan. We only to stop the migration script and stop writing to new schema, and can drop new schema tables if necessary too.