Summary

In May-June 2019, the Wikidata development team will drop the wb_terms table from the database in favor of a new optimized schema. Over years, this table has become too big, causing various issues. The table being used by plenty of external tools, we are setting up a process to make sure that the change can be done together with the developers and maintainers, without causing issues and broken tools.

On this task, you can find a description of the changes and the process, and you can ask for more details or for help in the comments. On the wb_terms - Tool Builders Migration board you will find all the details about the migration, how to update your tool, and you can add your own tasks.

Context: what is the problem with wb_terms?

wb_terms is an important table in Wikidata’s internal structure. It supports vital features in Wikidata such as performing search on entities and rendering the right labels of items and properties that get linked in statements. It has also been used by several other extensions, like PropertySuggester that uses the search feature mentioned before, and by many tool builders to query entity terms (labels, descriptions and aliases) for their needs.

In April 2018, the table took up to 900 GB, and in March 2019 the table takes up to 946 GB (46 GB increase, of which 30 happened in last 3 months) and we expect the rate in which the table size increases will be bigger with more adoption of Wikidata.

Currently our master host of database has less free space than the table size, which puts it in a risky situation as any attempt to alter the table or any other tables in the database become dangerous due to shortage on disk space required for these operations.

wb_terms takes up a lot of disk space because it contains over 70% duplication due to its design, and because it is too denormalized and has 7 indexes. The indexes size being about 590 GB.

In order to tackle these potential risks, we are working on a solution:

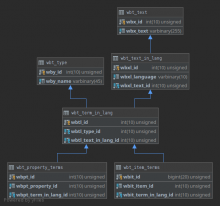

- redesign the table by normalizing it to avoid the high degree of duplication (see new schema diagram, new schema DDL)

- provide a clean abstraction on code level to access the new schema functionality

- migrate data gradually from the old wb_terms table into the new schema while we make sure we do not interrupt any usage of the table functionality

Overview of the new schema:

How are existing tools building on top of Wikidata affected?

Everyone querying wb_terms directly (on Cloud Services Wiki Replicas through database connection) will be affected. Those tools will need an update in its code to continue working. Tool builders and maintainers will need to adapt their code to the new schema before the migration starts and switch to the new code when the migration starts.

➡️ You can find more details about how to prepare your code to do in this task.

Other tools, for example those getting data through the API, pywikibot, gadgets, etc. will not be affected and will work as before with no action required from the maintainers.

Tool builders are encouraged to navigate to the dedicated workboard created for their tools migration, where we will organize detailed information and help out answering questions and fixing issues to our best capacity. Feel free to add your own tasks to this board, and to collaborate with each others.

Timeline

- Breaking change announcement: April 24th

- 29th of May: Test environment for tool builders will be ready

- 24th of June: Property Terms migration starts

- 26th of June: Read property terms from new schema on Wikidata

- 3rd of July: Item terms migration begins

- 10th of July: Read item terms from one of the two schemas

➡️ You can find more details about the migration plan in this task.

In case of inevitable circumstances that would lead to a change in this timeline, this task will be updated accordingly and an announcement will be made.

I’m running one or several tools using wb_terms, what should I do?

You may want to check if this alternative, possibly easier solution might suit your needs first

- You need to adapt the code of your tool(s) to the new schema. Here's the documentation that will help you:

- The migration will take place on May 29th, but don’t wait last minute to start working on your code :) A test system will be ready starting on May 15th to try your code against the new database structure.

- If you need help or advice, we offer you several options:

- You can ask for details or help in a comment under this task or create a task with the tag wb_terms - Tool Builders Migration. Wikidata developers will answer you as soon as possible.

- Two sessions of the Technical Advice IRC Meeting (TAIM) will be dedicated to Wikidata’s wb_terms redesign: you can join and discuss about your issues with Wikidata developers. These meetings will take place on May 15th and May 22th, at 15:00 UTC, on the #wikimedia-tech channel.

- A dedicated session will be organized at the Wikimedia hackathon in Prague. It will take place on Friday, May 17th at 15:00 at the Wikidata table (more details)

Is this going to affect other Wikibase instances outside of Wikidata/Cloud Services?

Not yet. The new schema and migration will be deployed on Wikidata first. There will be a separate announcement and release for that to follow with more details.