TASK AUTO-GENERATED by Nagios/Icinga RAID event handler

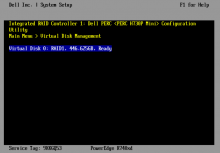

A degraded RAID (megacli) was detected on host an-worker1132. An automatic snapshot of the current RAID status is attached below.

Please sync with the service owner to find the appropriate time window before actually replacing any failed hardware.

CRITICAL: 6 failed LD(s) (Offline, Offline, Offline, Offline, Offline, Offline) $ sudo /usr/local/lib/nagios/plugins/get-raid-status-megacli Failed to execute '['/usr/lib/nagios/plugins/check_nrpe', '-4', '-H', 'an-worker1132', '-c', 'get_raid_status_megacli']': RETCODE: 3 STDOUT: NRPE: Unable to read output STDERR: None