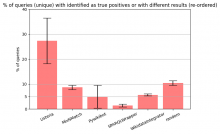

By using a tool to compare the differences of two results of the same sparql query we should evaluate how many queries might "break" when running against the wikidata main graph instead of the full graph.

Comparison will use T351819 and be based on the sets of sparql extracted in T349512.

We should attempt to identify the reasons of the differences and whether they are related or unrelated to the split:

- query features dependent on internal ordering the blazegraph btrees (LIMIT X OFFSET Y, bd:slice)

- use of external datasets (federation, mwapi)

- unicode collation issues (T233204)

- ...add more when discovered

For the queries whose results vary because of the split we should attempt to evaluate if targeting scholarly articles is intentional or not (e.g. statistical queries with group by counts) and possibly identify the tools and their maintainers to contact them to gather feedback on the project.

AC:

- a report is available showing how the current split is going to affect queries once run on the wikidata main subgraph

- a list of affected tools/scripts (when identifiable) that could possibly be contacted