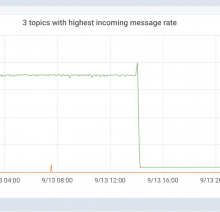

Starting 2019-04-05 it looks like traffic (messages and bytes) to __consumer_offsets to logstash1010 kept increasing and stayed at ~15k msg/s. A logstash frontend restart seems to reset the traffic (happened on april 15th, then again on april 16th), although I don't see the traffic increasing now (screenshot below). link to dashboard (note that it'll take a while to load, the dashboard is heavy on prometheus ATM)

I'm filing this for reference / tracking as I don't know if it causes any problems or even what's the root cause. cc @elukey @Ottomata @herron @colewhite