During the SRE summit it was asked if webrequest_sampled_128 data could be kept for more than a week in Turnilo/Druid (ideally, as much as possible - according with our 3 months retention policy) since it would be useful for various use cases.

Description

Details

| Subject | Repo | Branch | Lines +/- | |

|---|---|---|---|---|

| Keeping webrequest_sampled data for 30 days | operations/puppet | production | +1 -1 |

Event Timeline

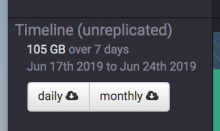

This data set's size in Druid is 100GB per week.

We can increase it to a month with our current capacity.

Would that be OK?

Yes definitely, a month would surely help a lot Brandon and the traffic team (they need to look at week over week trends) and surely Faidon as well.

Wait, is this data being kept for 60 days? https://github.com/wikimedia/puppet/blob/production/modules/profile/manifests/analytics/refinery/job/data_purge.pp#L102

I see, there was a rule on druid UI dropping this data every 7 days, I think we should move away from that and keep our puppet config to be the one that dictates how much data we keep. cc @JAllemandou in case there is any issue

Change 518769 had a related patch set uploaded (by Nuria; owner: Nuria):

[operations/puppet@production] Keeping webrequest_sampled data for 30 days

@Nuria: We on purpose did it the way it is setup, in order to facilitate loading data in druid in case it is needed (data present in deep-storage for 60 days) while still keeping space on druid.

Having agreed we should keep 1 month of data in druid, I still recommend using rules to unload data after 1 month and keep 60 days in deep storage, as 2 month means 2Tb per server in druid, probably too much.

The thing is that in 2 years of having this data available we have never needed to load data from deep storage , also since there was no documentation about this I do not think any of us realized loading from deep storage was a possibility.

I would prefer to keep 1 month of data and if we think we need more we can make the case for the hardware w/o having to do any "special loading" but rather just have the data available for everyone. Do you see any problems with this, there might be something I am missing regarding deep storage, please let me know.

With better/more precise explanation:

- In order for data to be dropped from deepstorage, it needs to be unloaded from historical nodes. This can be done in 2 ways: disabling a full datasource, or disabling segments using rules.

- Once segments are disabled, you can run the kill task to drop them.

Given the need to use rules to disable segments from historical, I'd rather keep the max data in hadoop (no storage issue so far).

So, let me understand, teh https://github.com/wikimedia/analytics-refinery/blob/master/bin/refinery-drop-druid-deep-storage-data requires that we disable segments using rules before we can drop data?

Correct (see https://druid.apache.org/docs/latest/tutorials/tutorial-delete-data.html, paragraph How to permanently delete data). We can also use API calls to mark segments as unused if we prefer not using rules.

Change 518769 abandoned by Nuria:

Keeping webrequest_sampled data for 30 days

Reason:

...

Ok, I have edited the UI rules to have 30 days retention, the deep storage will keep 60 days of data so no changes are needed there