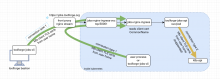

My initial idea was to deploy this API in toolforge k8s itself, something like https://jobs.toolforge.org/api/. The new Toolforge Jobs Framework requires client TLS in order to call the k8s API on behalf of the original user.

This mix presents a certain challenge for the TLS flow:

- for toolforge webservices, server side TLS is terminated in the nginx front proxy, and original client TLS doesn't reach the final nginx or pod running in k8s.

- the nginx front proxy calls k8s ingress without TLS or, specifically, without accounting for original client TLS certs.

- the acme-chief certificate for *.toolforge.org is currently not available to arbitrary pods running in k8s.

Some options to handle this:

option 1

introduce a special case for ' jobs.toolforge.org' in the front proxy, that uses the stream nginx method to proxy TCP level instead of HTTP. We leave client cert TLS termination for jobs.toolforge.orgin the actual jobs.toolforge.org pod or k8s ingress controller.

Docs: https://docs.nginx.com/nginx/admin-guide/load-balancer/tcp-udp-load-balancer/

option 2

introduce general config options in nginx front proxy to account for client TLS certs, and forward them to the k8s ingress backend. We would need to make nginx front proxy aware of the k8s CA in order to validate the client TLS cert. This might be the most 'elegant' solution, barring the inconvenience of having to extract the k8s CA and make it available to nginx front proxy.

The nginx front proxy config would end up looking like this:

server {

[..]

ssl_client_certificate /etc/kubernetes-ca.crt;

ssl_verify_client optional;

[...]

proxy_set_header X-Client-Dn $ssl_client_s_dn;

}option 3

Move general TLS termination out of the front proxy, into the k8s ingress nginx server. We may still have to support webservices running in the grid so this option is unlikely to be feasible.

option 4

Introduce a dedicated frontend for the jobs API in parallel to the common toolforge front proxy. This will cost a VM and 1 floating IP.