Backups in the codfw -> eqiad complete rather quickly.

305897 Full 5,561 3.078 T OK 08-Feb-21 17:19 backup2002.codfw.wmnet-Monthly-1st-Wed-EsRwEqiad-mysql-srv-backups-dumps-latest

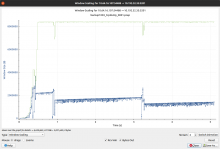

For some reason, eqiad-> codfw backups take 4x-7x longer :-(: https://grafana.wikimedia.org/d/000000377/host-overview?viewPanel=8&orgId=1&from=1612780931730&to=1612809026255&var-server=backup1002&var-datasource=thanos&var-cluster=misc

We need to debug this, as while the speed is "enough" (although undesired), to perform regular backups, it could be a major blocker in case of an emergency recovery.

We need to first discover at which layer this is happening, and then debug it further:

-

Software limitation (e.g. bacula) -

Hardware limitation (e.g. hw raid/disks issue) - Network limitation (e.g. link instability or bottleneck)